Nexa AI has released its latest OmniAudio-2.6B audio language model, a powerful tool optimized for edge devices. It integrates automatic speech recognition (ASR) and language models into a unified framework, significantly improving processing speed and efficiency, and solving the inefficiency and delay problems caused by the connections between components in traditional architectures. This model is particularly suitable for devices with limited computing resources, such as wearables, automotive systems, and IoT devices.

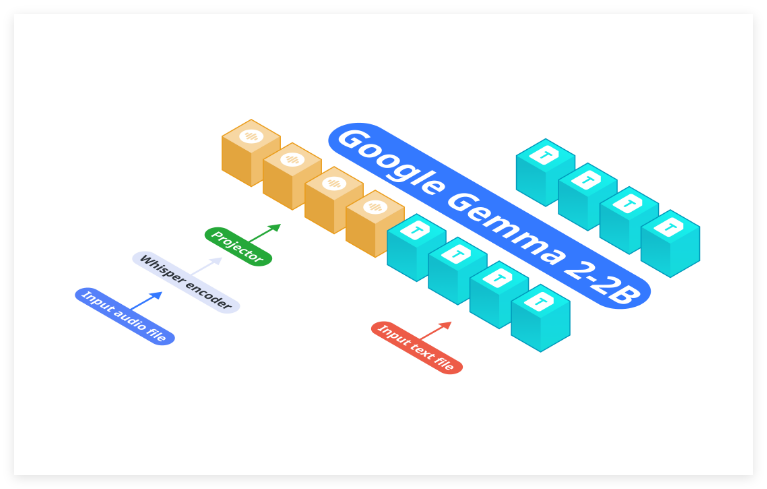

Nexa AI recently launched its new OmniAudio-2.6B audio language model, designed to meet the needs of efficient deployment of edge devices. Unlike traditional architectures that separate automatic speech recognition (ASR) and language models, OmniAudio-2.6B integrates Gemma-2-2b, Whisper Turbo, and custom projectors into a unified framework. This design eliminates the traditional system The inefficiency and latency caused by the linkage of various components in the network are particularly suitable for devices with limited computing resources.

Main highlights:

Processing speed: OmniAudio-2.6B excels in performance. On a 2024Mac Mini M4Pro, using the Nexa SDK and using the FP16GGUF format, the model achieved 35.23 tokens per second, and 66 tokens per second in the Q4_K_M GGUF format. In comparison, Qwen2-Audio-7B can only handle 6.38 tokens per second on similar hardware, demonstrating a significant speed advantage. Resource Efficiency: The model’s compact design reduces reliance on cloud resources, making it ideal for power- and bandwidth-constrained wearables, automotive systems, and IoT devices. This feature enables efficient operation under limited hardware conditions. High accuracy and flexibility: Although OmniAudio-2.6B focuses on speed and efficiency, it also performs well in terms of accuracy and is suitable for a variety of tasks such as transcription, translation, summarization, etc. Whether it's real-time speech processing or complex language tasks, OmniAudio-2.6B can provide accurate results.

The launch of OmniAudio-2.6B marks another important advancement of Nexa AI in the field of audio language models. Its optimized architecture not only improves processing speed and efficiency, but also brings more possibilities to edge computing devices. As the Internet of Things and wearable devices continue to become more popular, OmniAudio-2.6B is expected to play an important role in multiple application scenarios.

Model address: https://huggingface.co/NexaAIDev/OmniAudio-2.6B

Product address: https://nexa.ai/blogs/omniaudio-2.6b

All in all, OmniAudio-2.6B has brought revolutionary changes to audio processing on edge devices with its efficient architecture and excellent performance, laying a solid foundation for the widespread popularization of AI applications in the future. The innovation of Nexa AI is worth looking forward to.