The speech team of Alibaba Tongyi Lab has launched CosyVoice 2.0. This large open source speech generation model has made a significant breakthrough in speech synthesis technology. Compared with the previous generation version, CosyVoice 2.0 has greatly improved accuracy, stability and naturalness, realized two-way streaming speech synthesis, and significantly reduced synthesis delay. This upgrade is not only reflected in the technical level, but also brings a qualitative leap in user experience, providing users with richer and more convenient speech synthesis services.

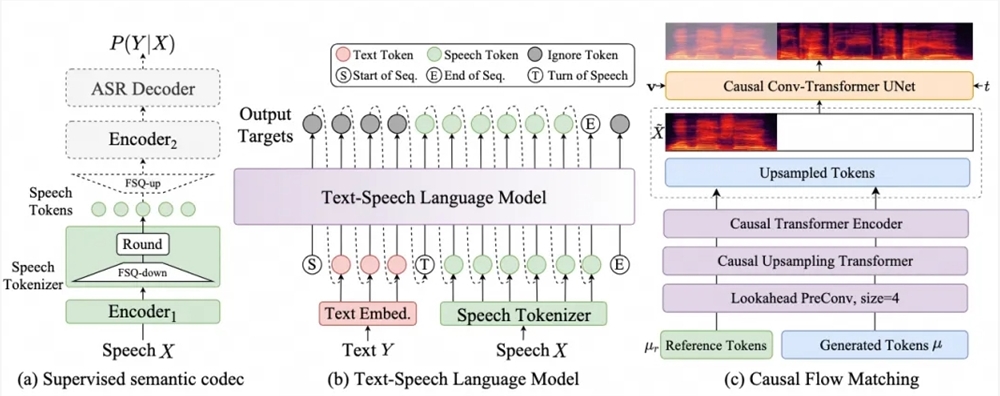

The speech team of Alibaba Tongyi Lab announced that its large open source speech generation model CosyVoice has been upgraded to version 2.0. This upgrade marks a significant improvement in the accuracy, stability and natural experience of speech generation technology. CosyVoice2.0 adopts speech generation large model technology that integrates offline and streaming modeling to achieve two-way streaming speech synthesis. The first packet synthesis delay can reach 150ms, which significantly improves the response speed of speech synthesis.

In terms of pronunciation accuracy, CosyVoice2.0 has a 30% to 50% error rate reduction compared to the previous version. It has achieved the lowest word error rate on the hard test set of the Seed-TTS test set, especially in synthetic tongue twisters, Excellent performance in polyphonic characters and rare characters. In addition, version 2.0 maintains timbre consistency in zero-sample speech generation and cross-language speech synthesis. In particular, the cross-language speech synthesis capability has been significantly improved compared to version 1.0.

CosyVoice2.0 has also improved the rhythm, sound quality, and emotional matching of synthesized audio. The MOS evaluation score increased from 5.4 to 5.53, which is close to the score of a large commercial speech synthesis model. At the same time, version 2.0 supports more fine-grained emotion control and dialect accent control, providing users with richer language choices, including major dialects such as Cantonese, Sichuan dialect, Zhengzhou dialect, Tianjin dialect and Changsha dialect, as well as role-playing functions. Such as imitating robots, Peppa Pig’s style speech, etc.

The upgrade of CosyVoice2.0 not only improves speech synthesis technology and experience, but also further promotes the development of the open source community and encourages more developers to participate in the innovation and application of speech processing technology.

GitHub repository: CosyVoice (https://github.com/FunAudioLLM/CosyVoice) Check out the latest updated CosyVoice2

Experience DEMO online: https://www.modelscope.cn/studios/iic/CosyVoice2-0.5B

Open source code: https://github.com/FunAudioLLM/CosyVoice

Open source model: https://www.modelscope.cn/models/iic/CosyVoice2-0.5B

The open source of CosyVoice 2.0 will further promote the popularization and development of speech synthesis technology, provide developers and researchers with powerful tools and resources, and look forward to the emergence of more innovative applications. Welcome to visit the link provided to experience and download.