Advances in artificial intelligence models have brought unprecedented computing power, but also brought huge energy consumption and environmental problems. The energy consumption of OpenAI's latest AI model o3 and its impact on the environment have attracted widespread attention and discussion. This article will analyze the energy consumption, carbon emissions and environmental impact of the o3 model, and explore the views and response strategies of experts and scholars in related fields on this issue.

As artificial intelligence continues to advance, the balance between innovation and sustainability becomes an important challenge. Recently, OpenAI launched its latest AI model, o3, which is its most powerful model to date. However, in addition to the cost of running these models, their impact on the environment has also raised widespread concerns.

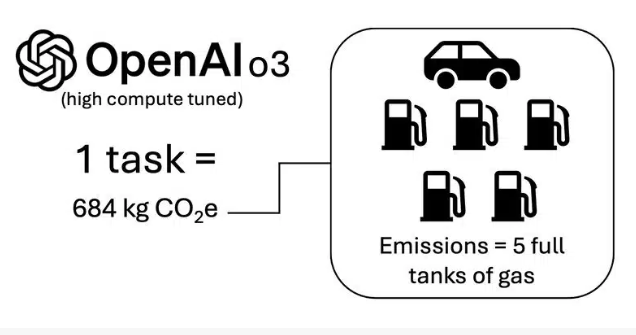

One study showed that each O3 task consumes approximately 1,785 kilowatt hours of electricity, which is equivalent to the electricity consumption of an average American household in two months. According to analysis by Boris Gamazaychikov, Salesforce's head of AI sustainability, this electricity consumption corresponds to approximately 684 kilograms of carbon dioxide equivalent emissions, which is equivalent to the carbon emissions of five full tanks of gasoline.

The high-compute version of o3 is benchmarked under the ARC-AGI framework, and the calculations are based on standard GPU energy consumption and grid emission factors. "As technology continues to expand and integrate, we need to pay more attention to these trade-offs," Gamazaychikov said. He also mentioned that this calculation does not take into account embodied carbon and only focuses on the energy consumption of the GPU, so the actual emissions may be Underrated.

In addition, data scientist Kasper Groes Albin Ludvigsen said that the energy consumption of an HGX server equipped with eight Nvidia H100 graphics cards is between 11 and 12 kilowatts, far exceeding the 0.7 kilowatts per graphics card.

In terms of task definition, Pleias co-founder Pierre-Carl Langlais raised concerns about model design, especially if the model design cannot be scaled down quickly. "There is a lot of drafting, intermediate testing and reasoning required when solving complex math problems," he said.

Earlier this year, research revealed that ChatGPT consumes 10% of the average human’s daily water intake during a single conversation, which is almost half a liter of water. While this number may not seem like much, when millions of people use this chatbot every day, the total water consumption becomes considerable.

Kathy Baxter, chief architect of artificial intelligence technology at Salesforce, warned that AI advancements like OpenAI's o3 model may suffer from Jevons' paradox. "While the energy required may decrease, water consumption may increase," she said.

In response to the challenges faced by AI data centers, such as high energy consumption, complex cooling requirements, and huge physical infrastructure, companies such as Synaptics and embedUR are trying to solve these problems through edge AI to reduce dependence on data centers, reduce latency and performance consumption, making it possible to make real-time decisions at the device level.

Highlights:

The power consumption of each o3 task is equivalent to two months of electricity consumption of a household.

Each mission emits as much carbon dioxide as five full tanks of gasoline.

The amount of water consumed in ChatGPT conversations reaches 10% of the average human daily drinking water.

All in all, the high energy consumption and environmental impact of AI models cannot be ignored. We need to actively explore greener and more sustainable AI development paths while pursuing technological progress, and balance the relationship between innovation and environmental protection in order to achieve sustainable development of artificial intelligence.