Smooth human-machine dialogue is an important goal in the field of artificial intelligence. However, AI often has problems in judging the "end of the round", resulting in a poor conversation experience. Users often encounter AI interruptions or delays in responding, which seriously affects the efficiency and naturalness of human-computer interaction. Traditional voice activity detection (VAD) methods are too simple, easily affected by environmental noise and user pauses, and cannot accurately determine the end of a conversation.

In the world of human-machine dialogue, the most troublesome thing is - "Have you finished speaking?" This sentence may seem simple, but it has become a hurdle that countless voice assistants and customer service robots cannot overcome. Do you often encounter this situation: you just paused for a moment to think about what to say next, but the AI can't wait to respond; or you have clearly finished speaking, but the AI is still waiting stupidly , until you can't help but say "I'm done" and it doesn't react. This experience is simply crazy.

This is not because the AI is deliberately causing trouble, but because when they judge the "End of Turn" (EOT), they are like a "blind person" who can only hear whether there is a sound, but cannot figure out whether you have a sound or not. Not finished yet. The traditional method mainly relies on voice activity detection (VAD), which is like a "voice-activated switch". It only pays attention to whether there is a voice signal. As long as there is no sound, it will be judged that you have finished speaking. Can this be confused by pauses and background noise? It is simply too "Simple"!

However, recently a company called Livekit couldn't stand it anymore and decided to install a smarter "brain" on AI. They have developed an open source accurate speech turn detection model. This model is like a real "mind reading" master and can accurately determine whether you have finished speaking. This is not a simple "voice-activated switch", but an "intelligent assistant" that can understand the intention of your words!

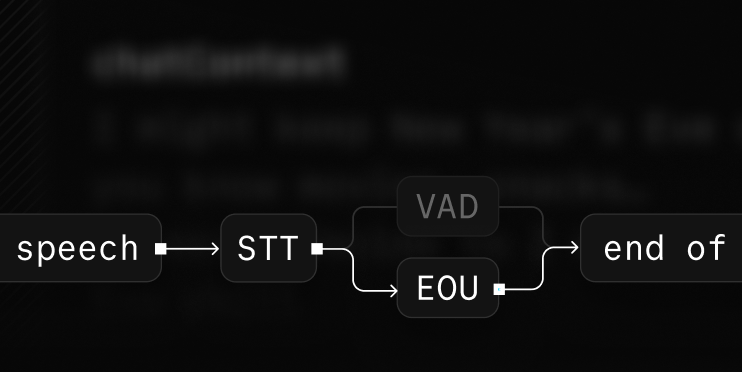

The great thing about Livekit's model is that it does not simply rely on "whether there is sound", but combines the Transformer model with traditional Voice Activity Detection (VAD). This is like equipping AI with a "super brain" and an "ear". The "Shunfeng Ear" is responsible for monitoring whether there are sounds, while the "Super Brain" is responsible for analyzing the semantics of these sounds to understand whether your words are complete and whether there is any unfinished meaning. The powerful combination of these two can truly achieve accurate "end-of-round detection".

What can this model do? It allows AI partners such as voice assistants and customer service robots to more accurately determine whether you have finished speaking before starting to respond to you. This will undoubtedly greatly improve the smoothness and naturalness of human-machine dialogue. When chatting with AI in the future, you no longer have to worry about being "stealed" or "pretended to be dumb" by it!

In order to prove their strength, Livekit also showed their test results: their new model can reduce AI's "wrong interruptions" by 85%! This means that AI becomes more natural and less prone to misjudgment, and humans Conversations on the phone have also become smoother and more pleasant. Think about it, when you call customer service in the future, you will no longer be upset by AI’s mechanical replies, but can be as comfortable as chatting with a real person. This experience is simply amazing!

Moreover, this model is especially suitable for scenarios that require human-machine dialogue, such as voice customer service, intelligent question and answer robots, etc. Livekit also thoughtfully showed a demonstration video. The AI agent in the video, after receiving the user's question, will wait patiently for the user to finish all the information before giving the corresponding answer. This is like an "intimate person" who truly understands your needs. He will not "cut in" before you have finished speaking, nor will he remain "dumbfounded" when you have finished speaking.

Of course, this model is still in the open source stage and there is still a lot of room for improvement. But we have reason to believe that with the continuous development of technology, future human-machine conversations will become more natural, smooth and intelligent. Maybe one day, we will really forget that what we are talking to is a cold machine, but an "AI partner" that really understands you.

Project address: https://github.com/livekit/agents/tree/main/livekit-plugins/livekit-plugins-turn-detector

Livekit's open source model provides new ideas for solving the "end of turn" problem in human-computer dialogue, marking a step towards a more natural and smoother human-computer interaction experience. We look forward to the further improvement and application of this model in the future to bring users a more convenient and intelligent human-machine conversation experience.