Answer.AI and LightOn jointly released the open source language model ModernBERT, which is a major improvement over Google BERT and has significantly improved speed, efficiency and quality. ModernBERT processes four times faster than BERT, uses less memory, and can handle text up to 8192 tokens, which is 16 times faster than existing models. It also made breakthrough progress in programming code encoding for the first time, scoring over 80 on the StackOverflow Q&A dataset, setting a new record. More importantly, ModernBERT significantly reduces the cost of large-scale text processing and can run on ordinary consumer-grade hardware, making it more cost-effective than models such as GPT-4.

ModernBERT's design allows it to handle text up to 8192 tokens long, which is a 16x improvement over the typical 512 token limit of existing encoding models. In addition, ModernBERT is the first extensively trained programming code encoding model, achieving a score of over 80 on the StackOverflow Q&A dataset, setting a new record for encoding models.

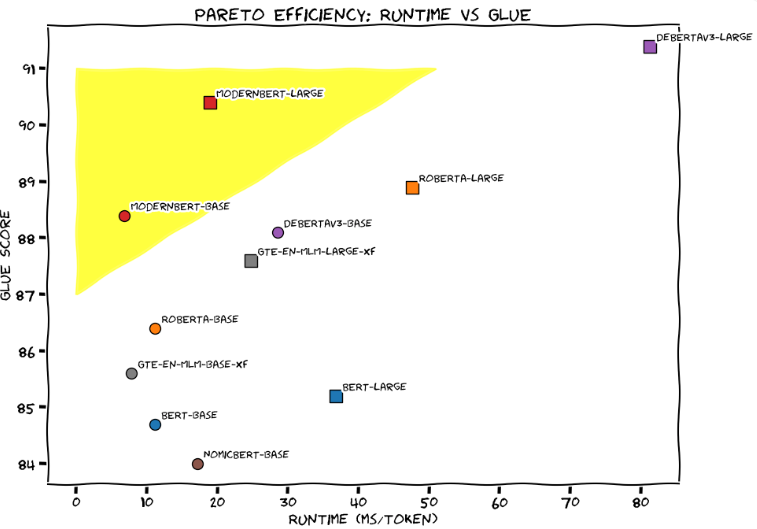

In the General Language Understanding Evaluation (GLUE), ModernBERT-Large achieved the best balance of processing speed and accuracy, with a processing time of approximately 20 milliseconds per token and a score of 90. The development team vividly compared ModernBERT to a tuned Honda Civic, emphasizing its reliability and efficiency in daily applications.

Compared with existing large-scale language models such as GPT-4, ModernBERT significantly reduces the cost of large-scale text processing. GPT-4 costs pennies per query, while ModernBERT runs locally, is faster and cheaper. For example, when the FineWeb Edu project filtered 15 billion tags, the cost of using the BERT model was US$60,000, and even using Google's Gemini Flash decoder, the cost was more than US$1 million.

The development team says ModernBERT is well suited for a variety of practical applications, including retrieval-augmented generation (RAG) systems, code search, and content review. Unlike GPT-4, which requires specialized hardware, ModernBERT can run effectively on ordinary consumer gaming GPUs.

Currently, ModernBERT is available in two versions: the base model contains 139 million parameters, and the large version contains 395 million parameters. Both versions are now released on Hugging Face, and users can directly replace existing BERT models with them. The development team plans to launch a larger version next year, but there are no plans for multi-modal capabilities. To promote new app development, they're also launching a contest that will award $100 and a six-month Hugging Face pro subscription to the five best presenters.

Since Google launched BERT in 2018, the model has been one of the most popular language models, with more than 68 million monthly downloads on HuggingFace.

Project entrance: https://huggingface.co/blog/modernbert

Highlight:

ModernBERT is four times faster than BERT and can handle text up to 8192 tokens long.

Compared with GPT-4, ModernBERT's cost in large-scale text processing is significantly reduced and its operation is more efficient.

This model is particularly good at processing programming code, scoring over 80 on the StackOverflow Q&A data set, setting a new record.

In short, the open source release of ModernBERT provides developers with an efficient, economical and powerful language model choice. It has significant advantages in speed, efficiency and the ability to process long texts, and is expected to promote the innovative development of more AI applications. Looking forward to future updates and wider applications.