Zhiyuan Research Institute and Tencent jointly released LongBench v2, a benchmark testing platform for evaluating the long text understanding and reasoning capabilities of large language models (LLMs). LongBench v2 significantly improves the text length and difficulty of the assessment, including 503 challenging four-choice multiple-choice questions, which makes it difficult for even human experts to obtain high accuracy in a short time. The benchmark covers six major task categories and includes improvements to the evaluation methodology to increase the reliability and accuracy of the results. This move aims to promote the progress of large language models in long text processing and provide a more effective evaluation tool for related research.

At a press conference on December 19, 2024, Zhiyuan Research Institute and Tencent announced the launch of LongBench v2, which is designed to evaluate the deep understanding and reasoning capabilities of large language models (LLMs) in real-world long text multi-tasks. Designed benchmarks. The platform aims to promote the progress of long text models in understanding and reasoning, and responds to the current challenges in the application of long text and large language models.

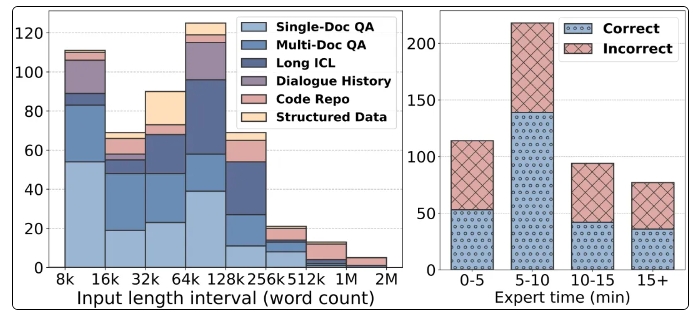

Notable features of LongBench v2 include support for longer text lengths, ranging from 8k to 2M words, and contains 503 challenging four-choice multiple-choice questions with higher difficulty, even the average accuracy of human experts in 15 minutes The rate is only 53.7%. In addition, the benchmark covers six main task categories, including single-document Q&A, multi-document Q&A, long text context learning, etc., ensuring a wide range of application scenarios.

In order to ensure the reliability of the assessment, all questions in LongBench v2 are in the form of multiple-choice questions and undergo a strict manual annotation and review process. During the data collection process, annotators from top universities were recruited to ensure the quality and difficulty of the questions. By introducing control variables, LongBench v2 improves the original Bradley-Terry statistical algorithm, reducing the impact of confounding factors and making model ranking more scientific and accurate.

In terms of evaluation results, the research team tested 10 open source LLMs and 6 closed source LLMs and found that the performance of the model was significantly improved after introducing control variables. In particular, the GPT-4o model performs well on tasks such as multi-document question answering and long text context learning after introducing more reasoning steps, showing the importance of reasoning capabilities.

The launch of LongBench v2 not only provides a new tool for the evaluation of large language models, but also points out the direction for future research, emphasizing the importance of improving the model's own understanding and reasoning capabilities. The cooperation between Zhiyuan Research Institute and Tencent marks further development in the field of AI technology. It is expected that this benchmark test can promote the advancement of long text understanding and reasoning technology.

Home page: https://longbench2.github.io

Paper: https://arxiv.org/abs/2412.15204

Data and code: https://github.com/THUDM/LongBench

The release of LongBench v2 marks a new stage in large language model evaluation. Its more stringent evaluation standards and more comprehensive test content will help promote the continuous improvement of large language models in long text understanding and reasoning capabilities. We look forward to more research results based on LongBench v2 appearing in the future to further promote the development of AI technology.