Apple has recently made a major breakthrough in the speed of large language model (LLM) generation. It has cooperated with NVIDIA to use the open source technology Recurrent Drafter (ReDrafter) to nearly triple the speed of generation. ReDrafter uses a speculative decoding method to significantly improve model training efficiency, and is integrated with NVIDIA's TensorRT-LLM inference acceleration framework to further reduce usage costs and latency. This cooperation not only improves development efficiency, but also provides users with a faster service experience, marking Apple's determination and strength to continue to innovate in the field of AI. This article will detail the details of the cooperation between Apple and NVIDIA and the advantages of ReDrafter technology.

Recently, Apple's latest research in the field of machine learning shows that through cooperation with NVIDIA, they have successfully increased the generation speed of large language models (LLM) by nearly three times. The key to this progress lies in Apple’s open source technology “Recurrent Drafter” (ReDrafter), which uses a speculative decoding method that can significantly improve the efficiency of model training.

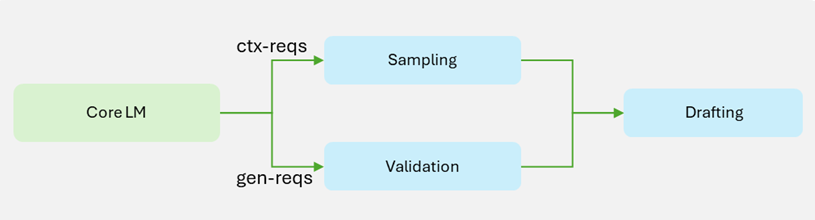

In the past, the process of creating large language models was usually very time-consuming and resource-consuming, and companies often needed to purchase a large number of hardware devices, thus increasing operating costs. Earlier in 2024, Apple released ReDrafter, a technology that combines recursive neural networks and dynamic tree attention methods to quickly generate and verify tags, increasing the tag generation speed by 3.5 times compared with traditional automatic regression methods.

This week, Apple further announced that their cooperation with NVIDIA will integrate ReDrafter into NVIDIA's TensorRT-LLM inference acceleration framework. This move will allow machine learning developers using NVIDIA GPUs to take advantage of ReDrafter's acceleration capabilities in production environments. It is worth mentioning that although high-performance multi-GPU servers are usually expensive, this cooperation can reduce latency while reducing the amount of hardware required, resulting in a more economical solution.

In benchmark tests with NVIDIA, generation efficiency using ReDrafter was significantly improved, with a 2.7x increase in token generation per second in greedy encoding mode. This means developers can get more results in less time and provide users with a faster service experience.

After confirming its cooperation with NVIDIA, Apple also stated that they are considering using Amazon's Trainium2 chip to improve model training efficiency. It is expected that the efficiency of pre-training using Trainium2 will be improved by 50% compared with existing hardware.

Official blog: https://developer.nvidia.com/blog/nvidia-tensorrt-llm-now-supports-recurrent-drafting-for-optimizing-llm-inference/

Highlight:

Apple partners with NVIDIA to nearly triple the speed of large language model generation.

The open source technology ReDrafter combines with recurrent neural networks to significantly improve model training efficiency.

This collaboration helps reduce costs and provide more efficient solutions for machine learning developers.

All in all, the cooperation between Apple and NVIDIA and the application of ReDrafter technology have brought significant efficiency improvements and cost reductions to the development and application of large language models. This not only promotes technological progress in the field of artificial intelligence, but also brings more convenient and economical solutions to developers and users, indicating the vigorous development of AI applications in the future.