NVIDIA quickly launched the GB300 and B300 GPUs, achieving significant performance improvements just half a year after the release of the GB200 and B200, especially in inference models. This is not only a simple hardware upgrade, but also represents NVIDIA's strategic layout adjustment in the field of AI acceleration, which will have a profound impact on the industry. The core of this upgrade lies in a huge leap in inference performance, as well as optimization of memory and architecture, which will directly affect the efficiency and cost of large language models.

Only 6 months after the release of GB200 and B200, Nvidia once again launched new GPUs-GB300 and B300. This may seem like a small upgrade, but in fact it contains huge changes, especially the significant improvement in the performance of the inference model, which will have a profound impact on the entire industry.

B300/GB300: A huge leap in inference performance

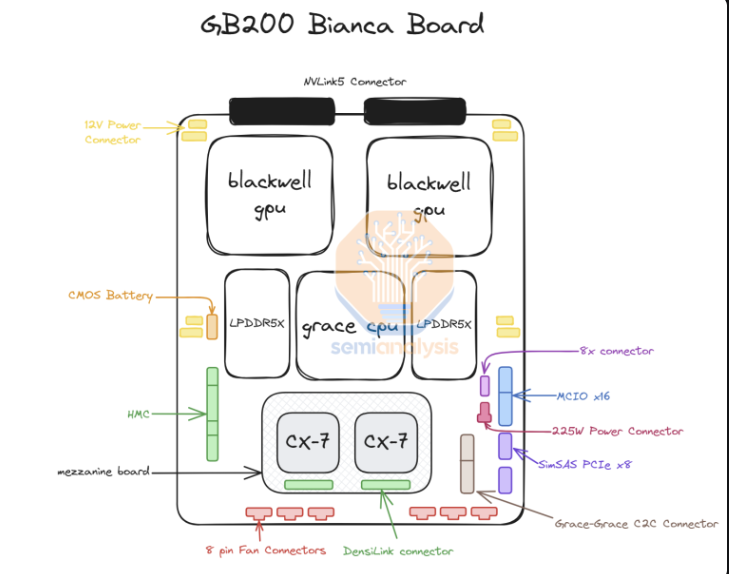

B300GPU uses TSMC's 4NP process node and is optimized for computing chips. This makes the FLOPS performance of B300 50% higher than that of B200. Part of the performance improvement comes from the increase in TDP. The TDP of GB300 and B300HGX reach 1.4KW and 1.2KW respectively (GB200 and B200 are 1.2KW and 1KW respectively). The remaining performance gains come from architectural enhancements and system-level optimizations, such as dynamic power allocation between the CPU and GPU.

In addition to the increase in FLOPS, the memory has also been upgraded to 12-Hi HBM3E, and the HBM capacity of each GPU has been increased to 288GB. However, the pin speed remains unchanged, so the memory bandwidth per GPU is still 8TB/s. It is worth noting that Samsung failed to enter the supply chain of GB200 or GB300.

In addition, Nvidia has also made adjustments in pricing. This will affect the profit margin of Blackwell products to a certain extent, but more importantly, the performance improvement of B300/GB300 will be mainly reflected in the inference model.

Tailored for inference models

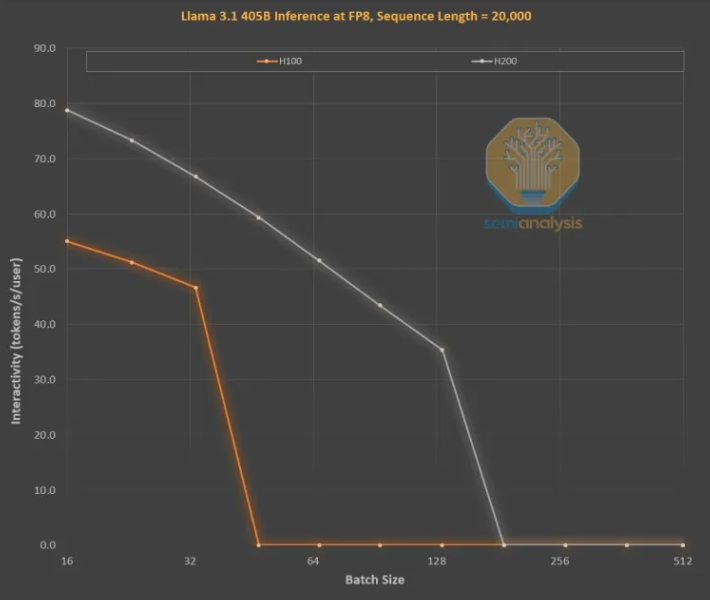

Memory improvements are critical for OpenAI O3-style LLM inference training, as long sequences increase KVCache, limiting critical batch size and latency. The upgrade from H100 to H200 (mainly the increase in memory) has brought improvements in the following two aspects:

Higher memory bandwidth (4.8TB/s on the H200 and 3.35TB/s on the H100) resulted in a general 43% improvement in interactivity across all comparable batch sizes.

Since the H200 runs a larger batch size than the H100, the number of tokens generated per second increases by 3 times, and the cost is reduced by about 3 times. This difference is mainly due to KVCache limiting the total batch size.

The performance improvement of larger memory capacity is huge. The performance and economic differences between the two GPUs are much greater than their specs suggest:

The user experience with inference models can be poor because there is significant latency between requests and responses. If the inference time can be significantly accelerated, users' willingness to use and pay will be increased.

The 3x improvement in hardware performance from mid-generation memory upgrades is staggering and far faster than Moore's Law, Huang's Law, or any other hardware improvement we've seen.

All in all, the launch of NVIDIA B300/GB300 is not only another leap in GPU technology, but also a strong promotion of the application of AI inference models. It will greatly improve user experience and reduce costs, leading the AI industry to enter a new stage of development.