Training large AI models is costly and its huge resource requirements limit their widespread application and raise concerns about energy efficiency and environmental impact. Traditional training methods are inefficient, rely on dense matrices, and require large amounts of memory and computing power. Although some existing methods try to alleviate these problems, they still have limitations in practical applications. Therefore, it is crucial to develop an approach that can simultaneously reduce memory usage, computational cost, and training time without compromising performance.

Training large-scale AI models (such as Transformers and language models) has become an indispensable key link in the AI field, but it also faces high computing costs, memory consumption, and energy requirements. For example, OpenAI's GPT-3 has 175 billion parameters and requires weeks of GPU training. This huge resource requirement limits the application of this technology to large-scale organizations with abundant computing resources, while also exacerbating concerns about energy efficiency and environmental impact. Addressing these challenges is critical to ensuring wider accessibility and sustainability of AI development.

Traditional training methods are inefficient and innovative solutions are urgently needed

CoMERA framework: Efficient training through adaptive tensor optimization

The foundation of CoMERA is an adaptive tensor representation, which allows model layers to dynamically adjust their ranks based on resource constraints. By modifying the tensor rank, the framework enables compression without compromising the operational integrity of the neural network. This dynamic optimization is achieved through a two-stage training process:

Early stages: focus on stable convergence.

Later stages: fine-tuning the rank to meet specific compression goals.

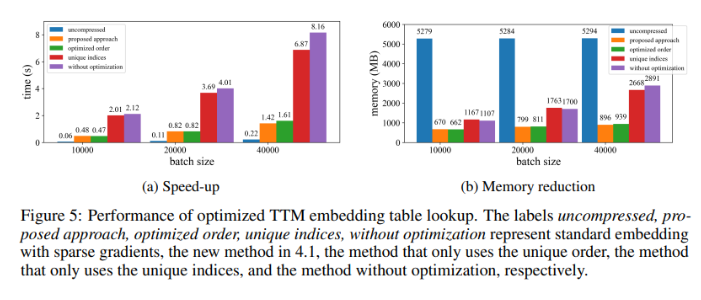

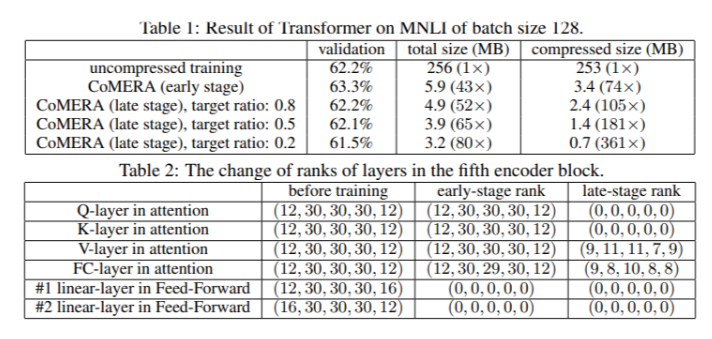

In a six-encoder Transformer model, CoMERA achieved a compression ratio of up to 43x in its early stages, and an even higher compression ratio of 361x in its later optimization stages. In addition, it reduces memory consumption by 9 times and speeds up training by 2-3 times per round compared to GaLore.

Multiple test results show that CoMERA has excellent performance

When applied to a Transformer model trained on the MNLI dataset, CoMERA reduces model size from 256MB to as low as 3.2MB while maintaining accuracy. In large-scale recommender systems such as DLRM, CoMERA compresses models by 99x and reduces peak memory usage by 7x. The framework also performed well in pre-training CodeBERT, a domain-specific large-scale language model, achieving an overall compression ratio of 4.23x and achieving a 2x speedup in some training stages. These results highlight its ability to handle a variety of tasks and architectures, extending its applicability in various fields.

Summary of key benefits of the CoMERA framework

The main conclusions of this study are as follows:

CoMERA achieves compression ratios of up to 361x for specific layers and 99x for the entire model, significantly reducing storage and memory requirements.

This framework shortens the training time of each round of Transformer and recommendation systems by 2-3 times, saving computing resources and time.

By using tensorized representations and CUDA graphs, CoMERA reduces peak memory consumption by 7x, making training on smaller GPUs possible.

CoMERA's approach supports multiple architectures, including Transformers and large language models, while maintaining or improving accuracy.

By reducing the energy and resources required for training, CoMERA helps enable more sustainable AI practices and makes cutting-edge models available to a wider audience.

All in all, the CoMERA framework provides a breakthrough solution for efficiently training large AI models, which significantly reduces computational costs and memory requirements through adaptive tensor optimization while maintaining model accuracy. This research makes an important contribution to the continued development and broader accessibility of the field of AI.