Recently, the Google deep learning team and researchers from multiple universities released a new system called "MegaSaM", which can efficiently estimate camera parameters and depth maps from dynamic videos. This marks a major breakthrough in the field of computer vision and is expected to revolutionize video processing technology and bring widespread applications in many fields. Traditional methods have many limitations when dealing with dynamic scenes. The emergence of MegaSaM effectively solves these problems and provides a new solution for dynamic video analysis.

Recently, the Google deep learning team and researchers from multiple universities jointly released a new system called "MegaSaM" that can quickly and accurately estimate camera parameters and depth maps from ordinary dynamic videos. The advent of this technology will bring more possibilities to the videos we record in our daily lives, especially in terms of capturing and analyzing dynamic scenes.

Traditional Structure from Motion (SfM) and Monocular Simultaneous Localization and Mapping (SLAM) technologies usually require the input of videos of static scenes and have high parallax requirements. Facing dynamic scenes, the performance of these methods is often unsatisfactory because in the absence of static background, the algorithm is prone to errors. Although some neural network-based methods have tried to solve this problem in recent years, these methods often have huge computational overhead and lack of stability in dynamic videos, especially when the camera movement is uncontrolled or the field of view is unknown.

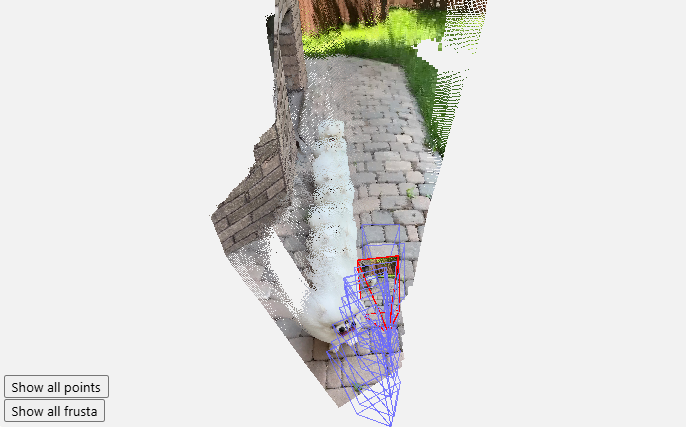

The emergence of MegaSaM has changed this situation. The research team carefully modified the deep vision SLAM framework to enable it to adapt to complex dynamic scenes, especially when the camera path is not restricted. After a series of experiments, the researchers found that MegaSaM significantly outperformed previous related technologies in terms of camera pose and depth estimation, and also performed well in terms of running time, even comparable to some methods.

The power of the system allows it to handle almost any video, including casual footage where there may be intense movement or scene dynamics during the filming. MegaSaM demonstrates its excellent performance by processing the source video at approximately 0.7 frames per second. The research team also shows more processing results in their gallery to demonstrate its effectiveness in real-world applications.

This research result not only brings fresh blood to the field of computer vision, but also provides new possibilities for video processing in daily life for users. We look forward to seeing MegaSaM in more scenes in the future.

Project entrance: https://mega-sam.github.io/#demo

Highlights:

The MegaSaM system is able to quickly and accurately estimate camera parameters and depth maps from ordinary dynamic videos.

This technology overcomes the shortcomings of traditional methods in dynamic scenes and adapts to real-time processing of complex environments.

Experimental results show that MegaSaM outperforms previous technologies in both accuracy and operational efficiency.

The emergence of the MegaSaM system has brought revolutionary changes to dynamic video processing, and its efficient and accurate performance provides the possibility for more application scenarios in the future. It is believed that with the continuous development and improvement of technology, MegaSaM will play an important role in more fields and bring more convenience to people's lives.