OpenAI’s latest model, o3, achieved impressive results on the ARC-AGI benchmark, scoring as high as 75.7% under standard computing conditions and 87.5% in the high-computing version. This result far exceeds all previous models and has attracted widespread attention in the field of AI research. The ARC-AGI benchmark is designed to evaluate the ability of AI systems to adapt to new tasks and demonstrate fluid intelligence. It is extremely difficult and is considered one of the most challenging standards in AI evaluation. The breakthrough performance of o3 undoubtedly brings new directions and possibilities for AI development, but it does not mean that AGI has been cracked.

The latest model o3 released by OpenAI achieved amazing results in the ARC-AGI benchmark, scoring as high as 75.7% under standard computing conditions, and the high-computing version reached 87.5%. This achievement surprised the AI research community, but it still does not prove that artificial intelligence generality (AGI) has been cracked.

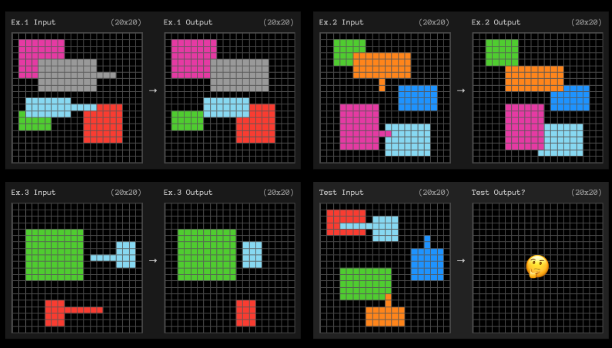

The ARC-AGI benchmark is based on the Abstract Reasoning Corpus, a test designed to evaluate an AI system's ability to adapt to new tasks and demonstrate fluid intelligence. ARC consists of a series of visual puzzles that require understanding of basic concepts such as objects, boundaries, and spatial relationships. Humans can easily solve these puzzles, but current AI systems face great challenges in this regard. ARC is considered one of the most challenging criteria in AI evaluation.

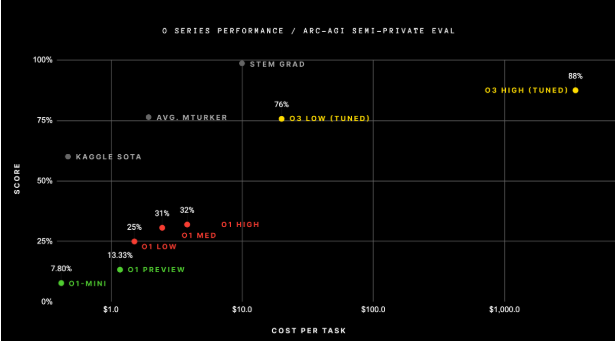

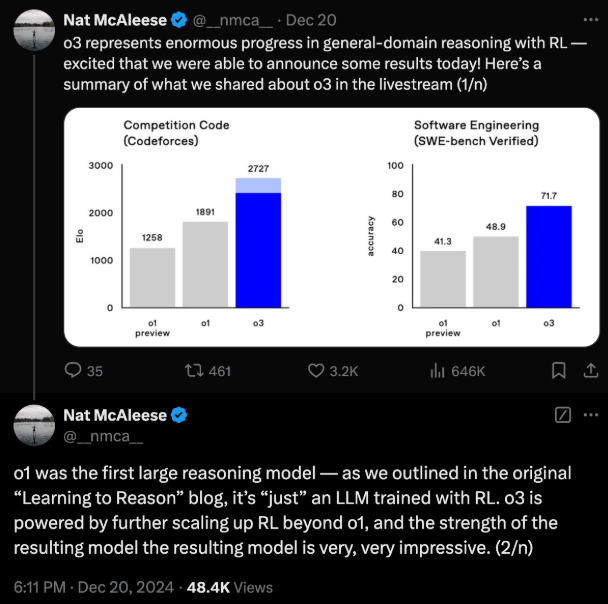

o3 performs significantly better than previous models. The highest score of o1-preview and o1 model on ARC-AGI is 32%. Prior to this, researcher Jeremy Berman used a hybrid method to combine Claude3.5Sonnet with a genetic algorithm, achieving a score of 53%, and the emergence of o3 was regarded as a leap in AI capabilities.

François Chollet, the founder of ARC, praised o3 for its qualitative change in AI capabilities and believed that it has reached an unprecedented level in its ability to adapt to new tasks.

Although o3 performs well, its computational cost is also quite high. Under low computing configuration, solving each puzzle costs between $17 and $20, consuming 33 million tokens; under high computing configuration, the computational cost increases to 172 times, using billions of tokens . However, as the cost of inference gradually decreases, these overheads may become more reasonable.

There are currently no details on how o3 achieved this breakthrough. Some scientists speculate that o3 may use a program synthesis method that combines chain thinking and search mechanisms. Other scientists believe that o3 may simply come from further extending reinforcement learning.

Although o3 has made significant progress on ARC-AGI, Chollet emphasized that ARC-AGI is not a test of AGI and o3 has not yet reached AGI standards. It still performs poorly on some simple tasks, showing fundamental differences from human intelligence. In addition, o3 still relies on external verification during the reasoning process, which is far from the independent learning ability of AGI.

The Chollet team is developing new challenging benchmarks to test o3's capabilities and expect to lower its score below 30%. He points out that true AGI will mean that it will become nearly impossible to create tasks that are simple for humans but difficult for AI.

Highlight:

o3 achieved a high score of 75.7% in the ARC-AGI benchmark test, outperforming previous models.

The cost of solving each puzzle in o3 is as high as 17 to 20 US dollars, which is a huge amount of calculation.

Although o3 performs well, experts emphasize that it has not yet reached AGI standards.

All in all, the excellent performance of the o3 model in the ARC-AGI test demonstrates the significant progress of artificial intelligence in abstract reasoning capabilities, but this is only a small step on the road to true AGI. Future research still needs to continue to explore to solve the high computational cost and core issues of AGI.