The Qwen team has open sourced its latest multi-modal reasoning model QVQ, which is built on Qwen2-VL-72B and significantly improves AI's visual understanding and reasoning capabilities. QVQ achieved a high score of 70.3 in the MMMU review and surpassed its predecessor model in multiple mathematical benchmarks. This article will introduce in detail the characteristics, advantages, limitations and usage of the QVQ model, and provide relevant links to facilitate readers to further understand and use it.

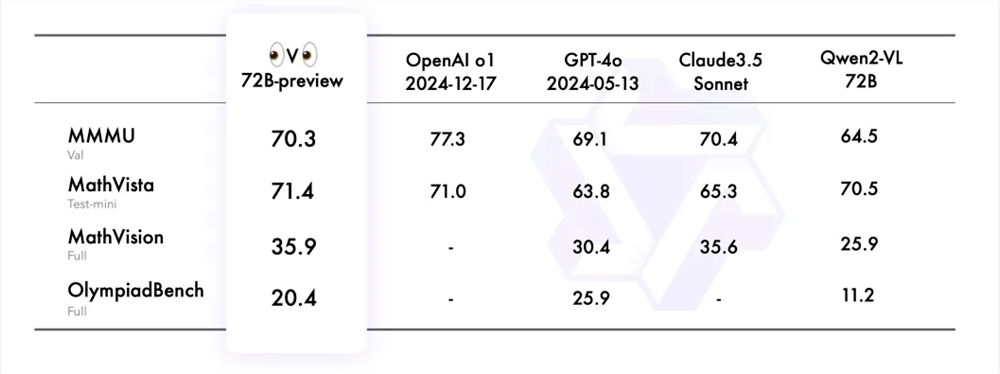

The Qwen team recently announced the open source of its newly developed multi-modal reasoning model QVQ, marking an important step in artificial intelligence's visual understanding and complex problem-solving capabilities. This model is built on Qwen2-VL-72B and aims to improve AI’s reasoning capabilities by combining language and visual information. In the MMMU evaluation, QVQ achieved a high score of 70.3 and showed significant performance improvements compared to Qwen2-VL-72B-Instruct in multiple mathematics-related benchmark tests.

The QVQ model has shown particular advantages in visual reasoning tasks, especially in areas that require complex analytical thinking. Despite the excellent performance of QVQ-72B-Preview, the team also pointed out some limitations of the model, including language mixing and code switching issues, the possibility of falling into circular logic patterns, safety and ethical considerations, and performance and benchmark limitations. The team emphasized that although the model has improved in visual reasoning, it cannot completely replace the ability of Qwen2-VL-72B. During the multi-step visual reasoning process, the model may gradually lose focus on the image content, leading to hallucinations.

The Qwen team evaluated QVQ-72B-Preview on four data sets, including MMMU, MathVista, MathVision and OlympiadBench. These data sets are designed to examine the model's comprehensive understanding and reasoning capabilities related to vision. The QVQ-72B-Preview performed well in these benchmarks, effectively closing the gap with the leading model.

To further demonstrate the application of the QVQ model in visual reasoning tasks, the Qwen team provided several examples and shared a link to the technical blog. In addition, the team also provided code examples for model inference and how to use the Magic API-Inference to directly call the QVQ-72B-Preview model. The API-Inference of the Magic Platform provides support for the QVQ-72B-Preview model, and users can directly use the model through API calls.

Model link:

https://modelscope.cn/models/Qwen/QVQ-72B-Preview

Experience link:

https://modelscope.cn/studios/Qwen/QVQ-72B-preview

Chinese blog:

https://qwenlm.github.io/zh/blog/qvq-72b-preview

The open source of the QVQ model provides valuable resources for multi-modal artificial intelligence research and also heralds the further development of AI in the field of visual reasoning in the future. Although there are some limitations, its excellent performance in many benchmark tests is still impressive. We look forward to further optimization and improvement of the QVQ model in the future.