Joint research by top institutions such as Harvard University and Stanford University shows that OpenAI’s o1-preview model has demonstrated amazing capabilities in medical reasoning tasks, even surpassing human doctors. This study conducted a comprehensive evaluation of the o1-preview model, covering multiple aspects such as differential diagnosis generation, diagnostic reasoning process display, triage differential diagnosis, probabilistic reasoning, and management reasoning, and compared it with human doctors and early large-scale language models. The research results are eye-catching, bringing new breakthroughs to the application of artificial intelligence in the medical field, and also pointing the way for the future development direction of medical artificial intelligence.

The application of artificial intelligence in the medical field has once again ushered in a major breakthrough! A study jointly conducted by Harvard University, Stanford University and other top institutions showed that OpenAI's o1-preview model showed amazing capabilities in multiple medical reasoning tasks , even surpassing human doctors. This study not only evaluated the model's performance on medical multiple-choice benchmark tests, but also focused on its diagnostic and management capabilities in simulated real-life clinical scenarios. The results are impressive.

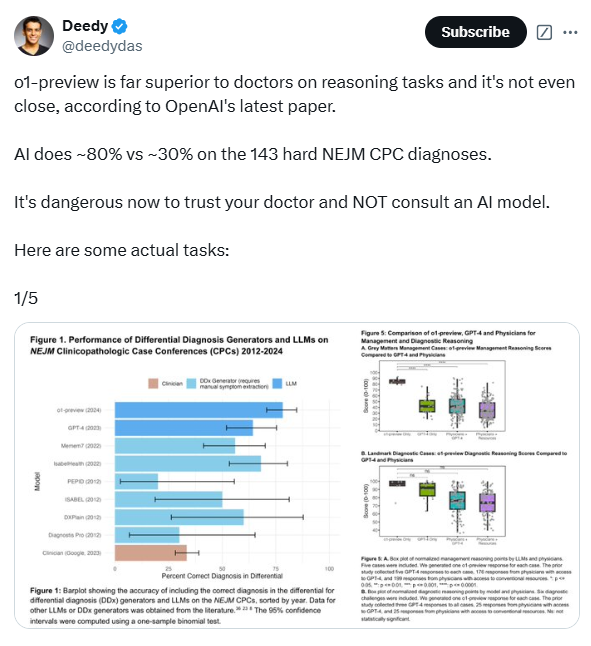

The researchers conducted a comprehensive evaluation of the o1-preview model through five experiments, including differential diagnosis generation, display of diagnostic reasoning process, triage differential diagnosis, probabilistic reasoning, and management reasoning. The experiments were evaluated by medical experts using validated psychometric methods and were designed to compare o1-preview's performance to previous human controls and earlier large language model benchmarks. Results show that o1-preview achieves significant improvements in differential diagnosis generation and the quality of diagnostic and management reasoning.

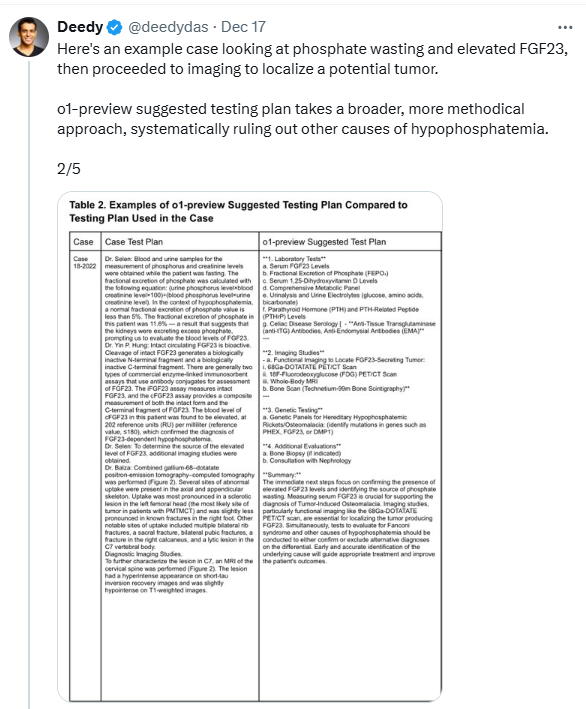

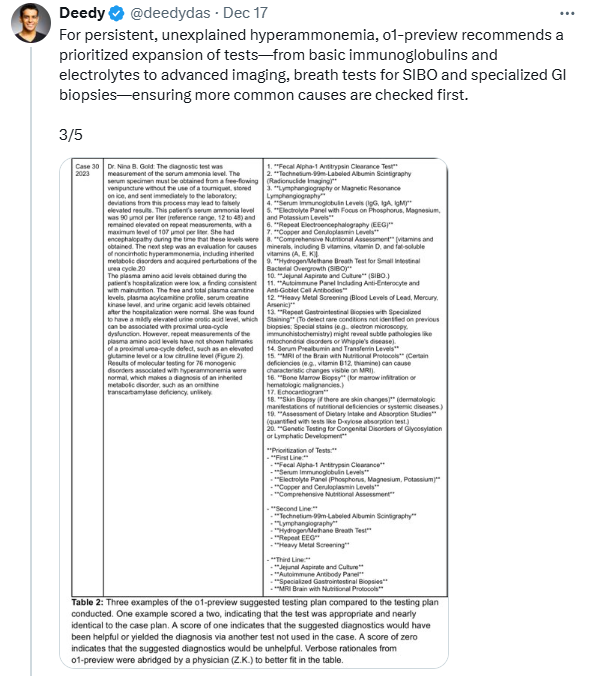

In assessing o1-preview's ability to generate differential diagnoses, the researchers used Clinical Pathology Colloquium (CPC) cases published in the New England Journal of Medicine (NEJM). The results showed that the differential diagnosis given by the model included the correct diagnosis in 78.3% of the cases, and in 52% of the cases, the first diagnosis was the correct diagnosis. Even more strikingly, o1-preview gave accurate or very close diagnoses in 88.6% of cases, compared with 72.9% of the same cases for the previous GPT-4 model. In addition, o1-preview also performed well in selecting the next diagnostic test, selecting the correct test in 87.5% of cases and selecting a test regimen that was considered helpful in 11% of cases.

To further evaluate o1-preview's clinical reasoning capabilities, the researchers used 20 clinical cases from the NEJM Healer course. The results showed that o1-preview performed significantly better than GPT-4, attending physicians, and residents in these cases, achieving perfect R-IDEA scores in 78/80 cases. The R-IDEA score is a 10-point scale used to assess the quality of clinical reasoning documentation. In addition, the researchers evaluated o1-preview's management and diagnostic reasoning capabilities through the "Grey Matters" management case and the "Landmark" diagnostic case. In the "Grey Matters" case, o1-preview scored significantly higher than GPT-4, doctors using GPT-4, and doctors using traditional resources. In the “Landmark” case, o1-preview performs on par with GPT-4, but better than doctors using GPT-4 or traditional resources.

However, the study also found that o1-preview's performance in probabilistic reasoning was similar to the previous model, without significant improvement. In some cases, the model was inferior to humans at predicting disease probabilities. The researchers also noted that a limitation of o1-preview is its tendency to be verbose, which may have contributed to its score in some experiments. In addition, this study mainly focused on model performance and did not involve human-computer interaction, so further research on how o1-preview enhances human-computer interaction is needed in the future to develop more effective clinical decision support tools.

Still, this study shows that o1-preview performs well in tasks that require complex critical thinking, such as diagnosis and management. The researchers emphasize that diagnostic reasoning benchmarks in the medical field are rapidly becoming saturated, necessitating the development of more challenging and realistic evaluation methods. They call for trials of these technologies in real clinical settings and preparation for collaborative innovation between clinicians and artificial intelligence. In addition, a robust oversight framework needs to be established to monitor the widespread implementation of AI clinical decision support systems.

Paper address: https://www.arxiv.org/pdf/2412.10849

All in all, this study provides strong evidence for the application of artificial intelligence in the medical field and also points out the direction of future research. The excellent performance of the o1-preview model is exciting, but its limitations also require careful consideration and ensuring its safety and reliability in clinical applications. In the future, human-machine collaboration will become an important trend in the medical field.