Magic Square Quantitative recently released a new generation of large model DeepSeek-V3. Its 671 billion parameter scale and MoE architecture make its performance comparable to top closed-source models. It also has the characteristics of low cost and high efficiency, which has attracted widespread attention in the industry. DeepSeek-V3 has performed well in many tests, especially surpassing all existing models in the mathematical ability test, and provides API services at a price significantly lower than models such as GPT-4, providing developers and enterprises with cost-effective AI. solution. This article will analyze in detail the performance, cost and commercialization strategy of DeepSeek-V3, and discuss its impact on the AI industry.

Magic Square Quantitative released a new generation of large model DeepSeek-V3 on the evening of December 26, showing an amazing technological breakthrough. This model using MoE (Mixed Experts) architecture is not only comparable in performance to top closed-source models, but its low-cost and high-efficiency features have attracted industry attention.

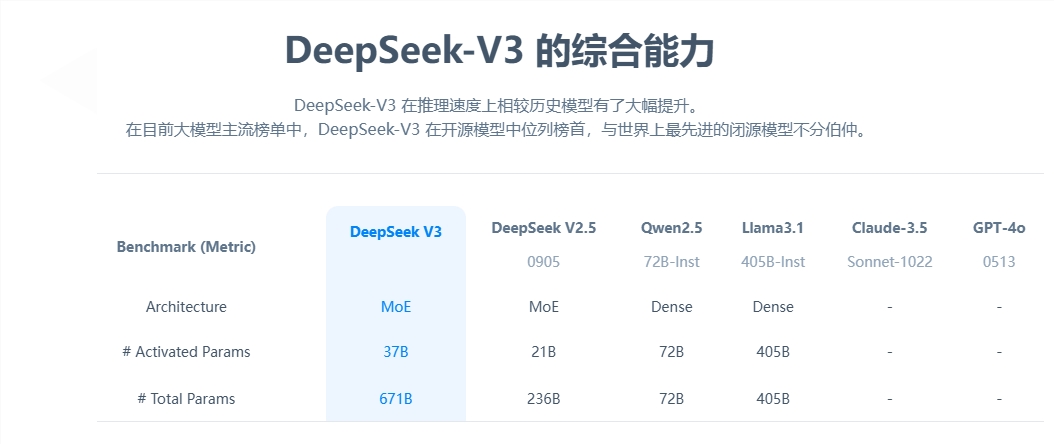

From the perspective of core parameters, DeepSeek-V3 has 671 billion parameters, of which 37 billion are activation parameters, and has completed pre-training on a data scale of 14.8 trillion tokens. Compared with the previous generation product, the generation speed of the new model has been increased by 3 times, and it can process 60 tokens per second, significantly improving the efficiency of practical applications.

In terms of performance evaluation, DeepSeek-V3 shows excellent strength. It not only surpasses well-known open source models such as Qwen2.5-72B and Llama-3.1-405B, but is also on par with GPT-4 and Claude-3.5-Sonnet in multiple tests. Especially in the mathematical ability test, the model outperformed all existing open-source and closed-source models with excellent results.

The most striking thing is the low-cost advantage of DeepSeek-V3. According to open source papers, calculated at US$2 per GPU hour, the total training cost of the model is only US$5.576 million. This breakthrough result is due to the collaborative optimization of algorithms, frameworks and hardware. OpenAI co-founder Karpathy spoke highly of this, pointing out that DeepSeek-V3 achieved performance surpassing Llama3 in only 2.8 million GPU hours, and the computing efficiency increased by about 11 times.

In terms of commercialization, although the API service pricing of DeepSeek-V3 has been increased compared with the previous generation, it still maintains a high cost performance. The new version is priced at 0.5-2 yuan per million input tokens and 8 yuan per million output tokens, with a total cost of approximately 10 yuan. In comparison, the equivalent service price of GPT-4 is about 140 yuan, and the price gap is significant.

As a comprehensive open source large model, the release of DeepSeek-V3 not only demonstrates the progress of China's AI technology, but also provides developers and enterprises with a high-performance, low-cost AI solution.

The emergence of DeepSeek-V3 marks a major breakthrough in China's AI technology in the field of large-scale language models. Its low-cost and high-performance advantages make it highly competitive in commercial applications, and its future development is worth looking forward to. The open source of this model also contributes valuable resources to the global AI community and promotes the sharing and development of AI technology.