VideoLLaMA2 is an advanced multi-modal language model focused on improving video understanding capabilities, especially spatiotemporal modeling and audio understanding. It can quickly identify video content and generate subtitles. For example, for a 31-second video, it only takes 19 seconds to complete the recognition and generate subtitles. This project aims to promote the development of video large language model technology and provide users with a more convenient and in-depth video content understanding experience. This article will introduce in detail the functions, application scenarios and trial entry of VideoLLaMA2.

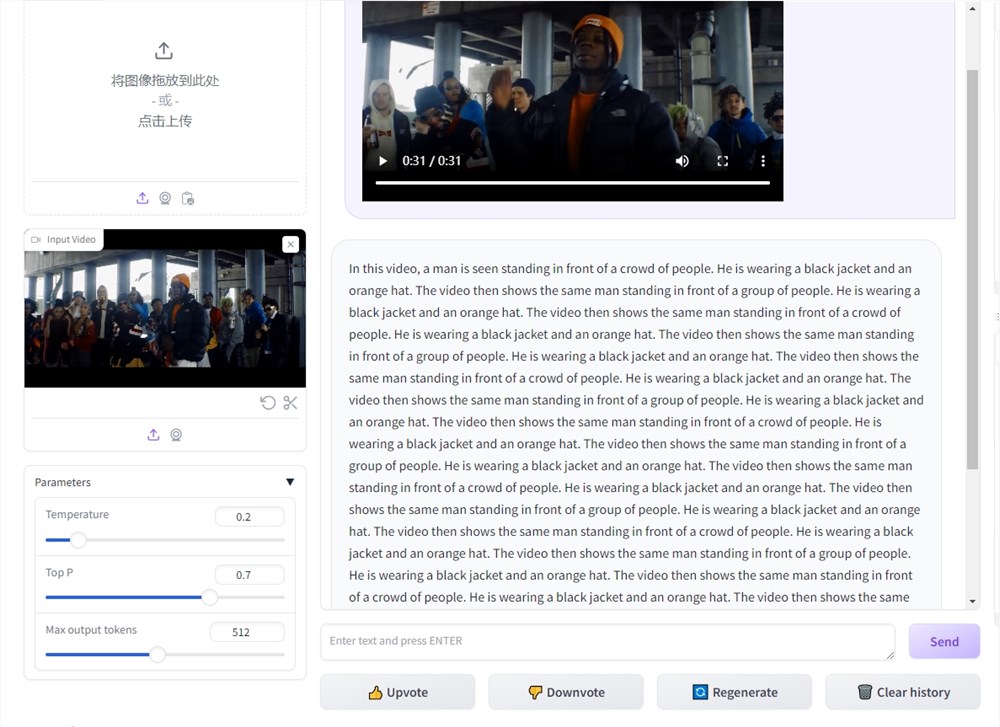

With the development of artificial intelligence technology, video understanding is becoming more and more important. Against this background, the VideoLLaMA2 project came into being, aiming to advance the spatiotemporal modeling and audio understanding capabilities of video large language models. This project is an advanced multi-modal language model that can help users better understand video content. In the test, VideoLLaMA2 recognized video content very quickly. For example, it only took 19 seconds to recognize a 31-second video and generate subtitles. The subtitles in the video below are VideoLLaMA2’s understanding of the video based on instructions.

Here's what the video caption says: This video captures a vibrant and whimsical scene of a miniature pirate ship sailing amidst turbulent waves of coffee foam. These intricately designed vessels, with their sails raised and flags waving, appear to be on an adventurous journey across a sea of foam. The ship has detailed rigging and masts, adding to the authenticity of the scene. The entire spectacle is a fun and imaginative depiction of maritime adventure, all within the confines of a cup of coffee.

At present, VideoLLaMA2 has officially released the trial entrance. The experience is as follows:

VideoLLaMA2 project entrance: https://top.aibase.com/tool/videollama-2

Trial URL: https://huggingface.co/spaces/lixin4ever/VideoLLaMA2

VideoLLaMA2 features:

1. Spatio-temporal modeling: VideoLLaMA2 can perform accurate spatio-temporal modeling and identify actions and event sequences in videos. By modeling video content, you can gain a deeper understanding of video stories.

Spatiotemporal modeling means that the model can accurately capture the temporal and spatial information in the video, thereby inferring the sequence of events and actions in the video. This feature makes the understanding of video content more precise and detailed.

2. Audio understanding: VideoLLaMA2 also has excellent audio understanding capabilities, which can identify and analyze the sound content in videos. This allows users to understand video content more comprehensively, beyond just visual information.

Audio understanding means that the model can recognize and analyze sounds in videos, including voice dialogue, music and other content. Through audio understanding, users can better understand the video background music, dialogue content, etc., and thus understand the video more comprehensively.

VideoLLaMA2 application scenarios:

Based on the above capabilities, VideoLLaMA2 application scenarios can be used for real-time highlight moment generation, real-time live content understanding and summary, etc. It can be summarized as follows:

Video understanding research: In the academic field, VideoLLaMA2 can be used for video understanding research, helping researchers analyze video content and explore the information behind video stories.

Media content analysis: The media industry can use VideoLLaMA2 for video content analysis to better understand user needs, optimize content recommendations, etc.

Education and training: In the field of education, VideoLLaMA2 can be used to produce teaching videos, assist in understanding teaching content, and improve learning effects.

All in all, VideoLLaMA2 has shown great potential in the field of video content understanding with its powerful spatiotemporal modeling and audio understanding capabilities. It has broad future application prospects and is worth looking forward to its further development and application.