The EmoTalk3D project has made breakthrough progress in the field of artificial intelligence. Its core lies in the successful synthesis of high-fidelity, emotionally rich 3D talking avatars. This project solves the existing technology's problems in multi-view consistency and Inadequacy in emotional expression. The framework can accurately predict 3D geometric sequences, synthesize 3D avatar appearance based on 4D Gaussian representation, and ultimately achieve free-view talking avatar animation, where even subtle expressions and wrinkles can be realistically presented.

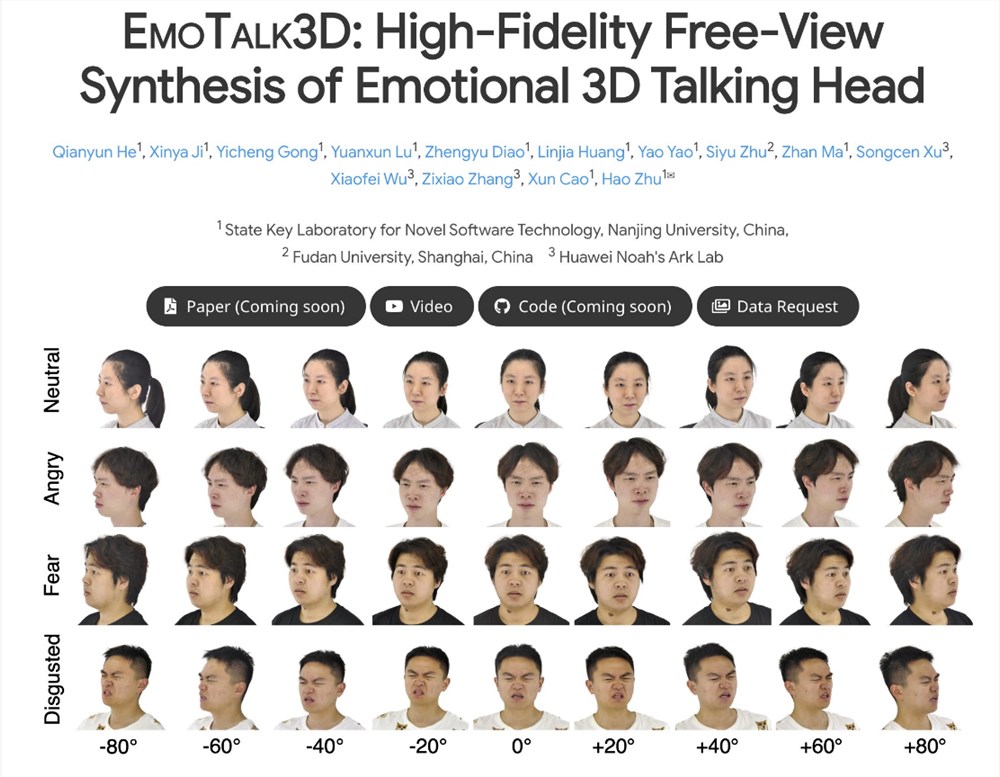

It is understood that the research team of the EmoTalk3D project has proposed a new synthesis method to address the shortcomings of current 3D talking avatar technology in terms of multi-view consistency and emotional expression. This approach not only enables enhanced lip synchronization and rendering quality, but also enables controllable emotional expression in the generated talking avatars.

The research team designed a "speech to geometry to appearance" mapping framework. The framework first predicts faithful 3D geometric sequences from audio features and then synthesizes the appearance of a 3D talking head represented by a 4D Gaussian based on these geometries. In this process, the appearance is further decomposed into canonical and dynamic Gaussian components, which are fused through learning from multi-view videos to render a free-view talking avatar animation.

It is worth mentioning that the research team of the EmoTalk3D project also successfully solved the difficulties of previous methods in capturing dynamic facial details, such as the presentation of wrinkles and subtle expressions. Experimental results show that this method has significant advantages in generating high-fidelity and emotionally controllable 3D talking avatars, while exhibiting better rendering quality and stability in lip motion generation.

Currently, the code and data sets of the EmoTalk3D project have been released at the designated HTTPS URL for reference and use by researchers and developers around the world. This innovative technological breakthrough will undoubtedly inject new vitality into the development of the field of 3D talking avatars, and is expected to be used in many fields such as virtual reality, augmented reality, and film and television production in the future.

The success of the EmoTalk3D project has brought new possibilities to the production of 3D digital characters. Its high-fidelity, emotional 3D avatar technology will bring revolutionary changes to the fields of virtual reality, augmented reality, and film and television production. In the future, we can look forward to the emergence of more products and applications based on EmoTalk3D technology, bringing people a more immersive experience.