The Kimi open platform is about to launch the highly anticipated internal testing of Context Caching function. This innovative technology will significantly improve the user experience of long text large models. By caching duplicate Tokens content, Context Caching can significantly reduce the cost of users requesting the same content and greatly improve the API interface response speed. This is particularly important for application scenarios that require frequent requests and repeated references to a large number of initial contexts, such as large-scale and highly repetitive prompt scenarios.

News from ChinaZ.com on June 20: Kimi Open Platform recently announced that the much-anticipated Context Caching function will soon launch internal testing. This innovative feature will support large models of long text and provide users with an unprecedented experience through an efficient context caching mechanism.

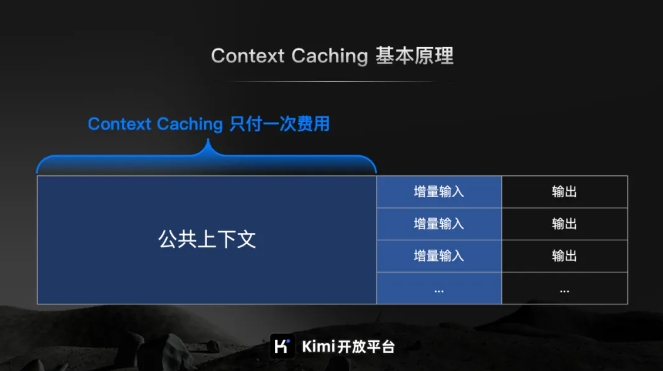

According to the official introduction of Kimi Open Platform, Context Caching is a cutting-edge technology designed to significantly reduce the cost of users requesting the same content by caching duplicate Tokens content. Its working principle is to intelligently identify and store processed text fragments. When the user requests again, the system can quickly retrieve it from the cache, thus greatly improving the API interface response speed.

For large-scale and highly repetitive prompt scenarios, the advantages of the Context Caching function are particularly significant. It can quickly respond to large numbers of frequent requests and significantly improve processing efficiency while reducing costs by reusing cached content.

It is particularly worth mentioning that the Context Caching function is particularly suitable for application scenarios that require frequent requests and repeated references to a large number of initial contexts. Through this feature, users can easily implement efficient context caching, thereby improving work efficiency and reducing operating costs.

The internal testing of the Context Caching function is about to start, which marks an important step taken by the Kimi open platform in improving the efficiency of large models and reducing user costs. The future is promising.