Peking University collaborated with the Kuaishou AI team to develop a new video generation framework called VideoTetris, which successfully solved the problem of complex video generation, and its performance surpassed commercial models such as Pika and Gen-2. This framework innovatively defines combined video generation tasks, can accurately generate videos according to complex instructions, and supports long video generation and progressive multi-object instructions, effectively solving the shortcomings of existing models in processing complex instructions and details. , such as accurately locating multiple objects and maintaining their characteristic details.

News from ChinaZ.com on June 17: Peking University and Kuaishou AI team collaborated to successfully overcome the problem of complex video generation. They proposed a new framework called VideoTetris, which can easily combine various details like a puzzle to generate videos with high difficulty and complex instructions. This framework surpasses commercial models such as Pika and Gen-2 in complex video generation tasks.

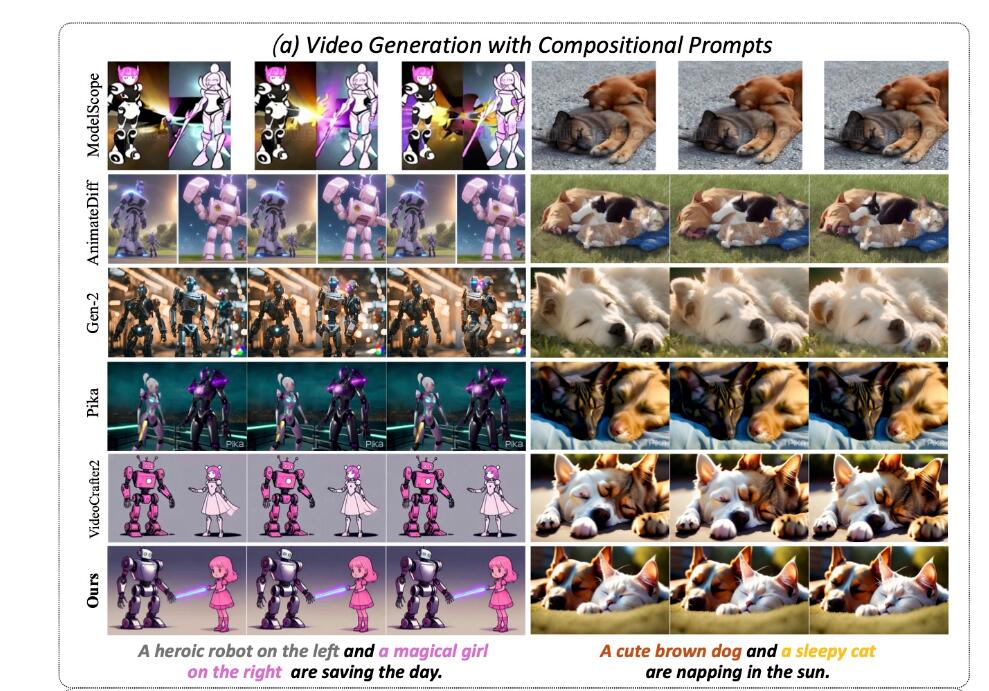

The VideoTetris framework defines the combined video generation task for the first time, including two subtasks: 1) video generation following complex combination instructions; 2) long video generation following progressive combined multi-object instructions. The team found that almost all existing open source and commercial models failed to generate correct videos. For example, if you input "a cute brown dog on the left and a napping cat taking a nap in the sun on the right", the resulting video often fuses information about the two objects, which looks weird.

In contrast, VideoTetris successfully retains all location information and detailed features. In long video generation, it supports more complex instructions, such as "Transition from a cute brown squirrel on a pile of hazelnuts to a cute brown squirrel and a cute white squirrel on a pile of hazelnuts." The sequence of the generated videos is consistent with the input instructions, and the two squirrels can naturally exchange food.

The VideoTetris framework adopts the spatiotemporal combined diffusion method. It first deconstructs text prompts according to time and assigns different prompt information to different video frames. Then the spatial dimension is deconstructed on each frame to map different objects to different video areas. Finally, efficient combination instruction generation is achieved through spatio-temporal cross-attention.

In order to generate higher-quality long videos, the team also proposed an enhanced training data preprocessing method to make long video generation more dynamic and stable. In addition, a reference frame attention mechanism is introduced, and native VAE is used to encode previous frame information, which is different from other models using CLIP encoding, thereby achieving better content consistency.

The result of optimization is that long videos no longer have large-area color casts, can better adapt to complex instructions, and the generated videos are more dynamic and more natural. The team also introduced new evaluation indicators VBLIP-VQA and VUnidet, extending the combined generation evaluation method to the video dimension for the first time.

Experimental tests show that in terms of combined video generation capabilities, the VideoTetris model outperforms all open source models, even commercial models such as Gen-2 and Pika. It is reported that the code will be completely open source.

Project address: https://top.aibase.com/tool/videotetris

All in all, the VideoTetris framework has made significant breakthroughs in the field of complex video generation, and its efficient spatiotemporal combination diffusion method and innovative evaluation indicators provide a new direction for the development of future video generation technology. The open source of this project also provides valuable resources to more researchers and promotes further development in this field. We look forward to VideoTetris being able to play a role in more application scenarios in the future.