Research teams from institutions such as the Chinese University of Hong Kong and the Chinese Academy of Sciences recently launched a full-modal pre-training paradigm called MiCo, which has made breakthrough progress in the field of multi-modal learning and refreshed 37 state-of-the-art performance (SOTA) records. MiCo aims to build full-modal intelligence that can understand any modality and learn universal representations, and simulates the multi-modal cognitive process of the human brain by introducing more modalities, data volumes, and model parameters. The core is to divide different modes into "knowledge mode" and "interface mode", and design a corresponding full-modal learning architecture, using multi-modal context to strengthen the mutual reinforcement between modalities, and build a cross-modal Contextual relations. This research result provides new directions and ideas for the development of the field of artificial intelligence.

News from ChinaZ.com on June 17: A research team from the Chinese University of Hong Kong, the Chinese Academy of Sciences and other institutions proposed a full-modal pre-training paradigm called MiCo (Multimodal Context). This method is Remarkable results have been achieved in the field of multi-modal learning, setting 37 state-of-the-art performance (SOTA) records.

Core features:

Full-modal understanding: MiCo aims to build full-modal intelligence that can understand any modality and learn universal representations.

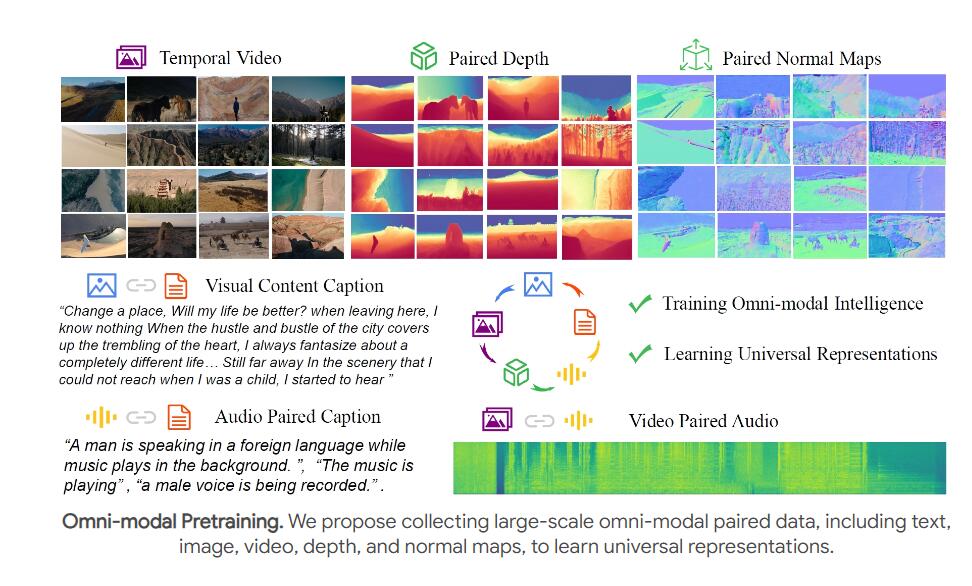

Large-scale pre-training: By introducing more modalities, data volumes and model parameters, MiCo simulates the multi-modal cognitive process of the human brain during the pre-training process.

Neural network structure design: MiCo divides different modes into "knowledge mode" and "interface mode", and designs a corresponding full-modal learning architecture, which is aligned through generative reasoning methods.

Multimodal context and scaling law: MiCo uses multimodal context to strengthen the mutual reinforcement between modalities and build cross-modal context relationships.

The experimental results show:

In the single-modal perception benchmark test of 10 different modes, MiCo achieved 7 SOTA results.

In 25 cross-modal understanding tasks, including retrieval, question and answer, description, etc., MiCo achieved 20 SOTA results.

In 18 multi-modal large-scale language model benchmark tests, MiCo achieved a total of 10 SOTA results.

MiCo’s pre-training method:

The team used videos and paired audio, text descriptions, depth and normals for joint pre-training to simulate the visual, auditory and space-time perception capabilities of the human brain.

Multi-modal context relationships are constructed by extracting multi-modal features using an all-modal encoder (such as ViT) and extracting text features using a text encoder.

Conclusion and future work:

The MiCo project is an important attempt by artificial intelligence to simulate the multi-modal cognition of the human brain. The team expects it to inspire future research and develop more powerful full-modal basic models.

Future work plans include combining more modalities, such as optical flow, IMU data and event files, to continue to enhance full-modal joint pre-training.

MiCo's outstanding performance has set a new benchmark in the field of multi-modal learning. Its future development potential is huge and deserves continued attention. The team’s future research direction is also worth looking forward to, and I believe MiCo will continue to promote the advancement of artificial intelligence technology.