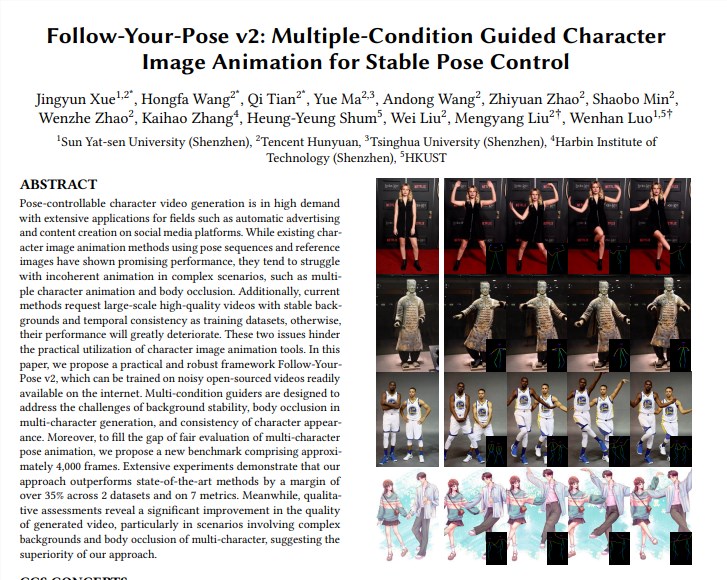

Tencent Hunyuan team joined hands with Sun Yat-sen University and Hong Kong University of Science and Technology to launch a new Tusheng video model "Follow-Your-Pose-v2", achieving a breakthrough in video generation technology from single to multi-person. This model can handle group photos of multiple people and make the people in the photos move in the generated video at the same time, significantly improving the efficiency and quality of video generation. The highlight of its technology is that it supports multi-person video action generation, has strong generalization capabilities, can be trained and generated using daily life photos/videos, and can correctly handle issues such as character occlusion. The model outperforms existing techniques on multiple data sets, demonstrating its powerful performance and broad application prospects.

Support multi-person video action generation: realize the generation of multi-person video actions with less time-consuming reasoning.

Strong generalization ability: High-quality videos can be generated regardless of age, clothing, race, background clutter, or action complexity.

Daily life photos/videos available: Model training and generation can use daily life photos (including snapshots) or videos, without looking for high-quality pictures/videos.

Correctly handle character occlusion: Faced with the problem of multiple characters' bodies blocking each other in a single picture, it can generate an occlusion picture with the correct front-to-back relationship.

Technical implementation:

The model uses the "optical flow guide" to introduce background optical flow information, which can generate stable background animation even when the camera shakes or the background is unstable.

Through the "Inference Map Guide" and "Depth Map Guide", the model can better understand the spatial information of the characters in the picture and the spatial position relationships of multiple characters, and effectively solve the problems of multi-character animation and body occlusion.

Evaluate and compare:

The team proposed a new benchmark Multi-Character, which contains about 4,000 frames of multi-character videos to evaluate the effect of multi-character generation.

Experimental results show that "Follow-Your-Pose-v2" outperforms the state-of-the-art by more than 35% on two public data sets (TikTok and TED speeches) and 7 indicators.

Application prospects:

Image-to-video generation technology has broad application prospects in many industries such as film content production, augmented reality, game production, and advertising. It is one of the AI technologies that will attract much attention in 2024.

Additional information:

Tencent's Hunyuan team also announced an acceleration library for the large open source model of Vincentian graphs (Hunyuan DiT), which greatly improves reasoning efficiency and shortens graph generation time by 75%.

The threshold for using the Hunyuan DiT model has been lowered. Users can call the model in Hugging Face's official model library with three lines of code.

Paper address: https://arxiv.org/pdf/2406.03035

Project page: https://top.aibase.com/tool/follow-your-pose

The emergence of the "Follow-Your-Pose-v2" model marks a major breakthrough in Tusheng's video technology, and its application prospects in many fields are worth looking forward to. In the future, with the continuous development and improvement of technology, it is believed that this model will play an important role in more scenarios and bring people a more convenient and intelligent video generation experience.