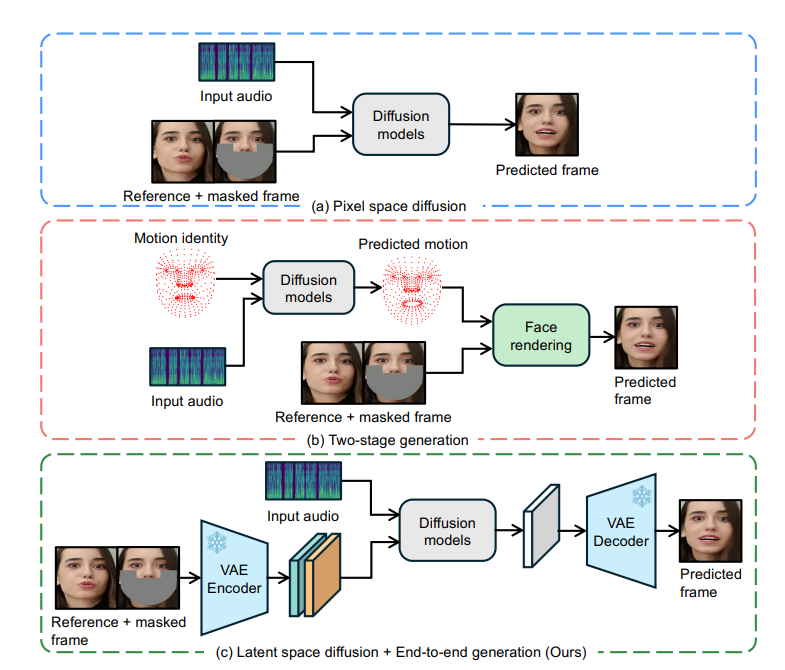

ByteDance has open sourced an innovative lip synchronization technology called LatentSync, which is based on the latent diffusion model of audio conditions and achieves precise synchronization of the character's lip movements in the video with the audio. It directly utilizes the power of Stable Diffusion without the need for intermediate motion representation, effectively models complex audio-visual associations, and enhances temporal consistency through Temporal Representation Alignment (TREPA) technology. LatentSync also optimizes the convergence problem of SyncNet and significantly improves lip synchronization accuracy. This technology has significant advantages in end-to-end framework, high-quality generation, temporal consistency and SyncNet optimization, providing a new solution for audio-driven portrait animation.

It was found that diffusion-based lip synchronization methods perform poorly in terms of temporal consistency because of inconsistencies in the diffusion process between different frames. To solve this problem, LatentSync introduces Time Representation Alignment (TREPA) technology. TREPA leverages temporal representations extracted from large self-supervised video models to align generated frames with real frames, thereby enhancing temporal consistency while maintaining lip sync accuracy.

In addition, the research team also conducted an in-depth study of the convergence problem of SyncNet, and through a large number of empirical studies, identified the key factors affecting the convergence of SyncNet, including model architecture, training hyperparameters and data preprocessing methods. By optimizing these factors, SyncNet's accuracy on the HDTF test set is significantly improved from 91% to 94%. Since the overall training framework of SyncNet is not changed, this experience can also be applied to other lip synchronization and audio-driven portrait animation methods utilizing SyncNet.

LatentSync Advantages

End-to-end framework: Generate synchronized lip movements directly from audio without intermediate motion representation.

High-quality generation: Use the powerful capabilities of Stable Diffusion to generate dynamic and realistic speaking videos.

Temporal consistency: Enhance the temporal consistency between video frames through TREPA technology.

SyncNet optimization: Solve the convergence problem of SyncNet and significantly improve the accuracy of lip synchronization.

Working principle

The core of LatentSync is based on image-to-image repair technology, which requires the input of a masked image as a reference. In order to integrate the facial visual features of the original video, the model also inputs reference images. After channel splicing, these input information are input into the U-Net network for processing.

The model uses the pre-trained audio feature extractor Whisper to extract audio embeddings. Lip movements may be affected by the audio of surrounding frames, so the model bundles the audio of multiple surrounding frames as input to provide more temporal information. Audio embeddings are integrated into U-Net through cross-attention layers.

To solve the problem that SyncNet requires image space input, the model first predicts in the noisy space and then obtains the estimated clean latent space through a single-step method. The study found that training SyncNet in pixel space is better than training in latent space, which may be because the information of the lip region is lost during VAE encoding.

The training process is divided into two stages: in the first stage, U-Net learns visual features without pixel space decoding and adds SyncNet loss. The second stage adds the SyncNet loss using the decoded pixel space supervision method and uses the LPIPS loss to improve the visual quality of the image. In order to ensure that the model learns temporal information correctly, the input noise also needs to be temporally consistent, and the model uses a mixed noise model. In addition, in the data preprocessing stage, affine transformation is also used to achieve face frontalization.

TREPA technology

TREPA improves temporal consistency by aligning the temporal representations of generated and real image sequences. This method uses the large-scale self-supervised video model VideoMAE-v2 to extract temporal representations. Unlike methods that only use distance loss between images, temporal representation can capture temporal correlations in image sequences, thereby improving overall temporal consistency. Studies have found that TREPA not only does not harm the accuracy of lip synchronization, but can actually improve it. .

SyncNet convergence issues

Research has found that the training loss of SyncNet tends to stay near 0.69 and cannot be reduced further. Through extensive experimental analysis, the research team found that batch size, input frame number and data preprocessing method have a significant impact on the convergence of SyncNet. Model architecture also affects convergence, but to a lesser extent.

Experimental results show that LatentSync outperforms other state-of-the-art lip synchronization methods on multiple metrics. Especially in terms of lip sync accuracy, thanks to its optimized SyncNet and audio cross-attention layer, which can better capture the relationship between audio and lip movements. In addition, LatentSync's time consistency has been significantly improved thanks to TREPA technology.

Project address: https://github.com/bytedance/LatentSync

The open source of LatentSync has brought new breakthroughs to the development of lip synchronization technology. Its efficient, accurate performance and optimized training methods are worthy of research and application. In the future, this technology is expected to play a greater role in video production, virtual reality and other fields.