The practical application effect of generative language models is often limited by the decoding strategy in the inference stage. Existing methods such as RLHF mainly focus on the model winning rate and ignore the impact of decoding strategies on model performance, resulting in low efficiency and difficulty in ensuring output quality. In order to solve this problem, Google DeepMind and the Google research team proposed the InfAlign framework, which aims to combine the inference strategy with the model alignment process to improve the inference performance and reliability of the model.

Generative language models face many challenges in the process from training to practical applications. One of the main issues is how to achieve optimal model performance during the inference phase.

Current countermeasures, such as reinforcement learning through human feedback (RLHF), mainly focus on improving the winning rate of the model, but often ignore decoding strategies during inference, such as Best-of-N sampling and controlled decoding. This gap between training goals and actual usage can lead to inefficiencies and affect the quality and reliability of the output.

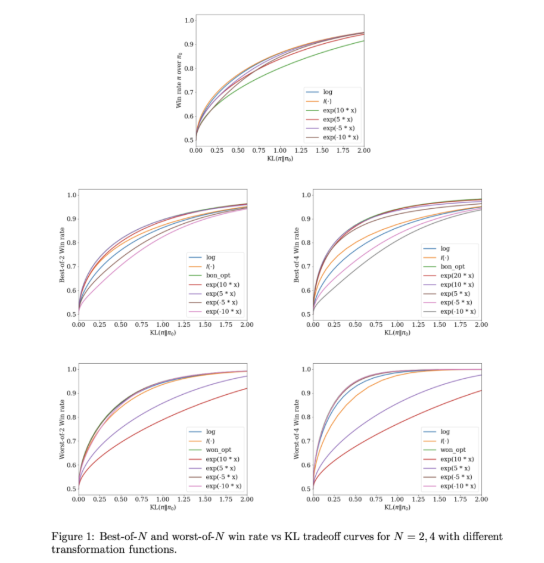

To address these issues, Google DeepMind and the Google Research team developed InfAlign, a machine learning framework designed to be combined with inference strategies. InfAlign incorporates inference-time methods into the alignment process and strives to bridge the gap between training and application. It adopts a calibrated reinforcement learning method to adjust the reward function based on a specific inference strategy. InfAlign is particularly effective with techniques such as Best-of-N sampling (generating multiple responses and selecting the best one) and Worst-of-N (commonly used in safety assessments), ensuring that the aligned model performs both in controlled environments and real-world scenarios good.

The core of InfAlign is the Calibration and Transformation Reinforcement Learning (CTRL) algorithm, which follows three steps: calibrating reward scores, transforming these scores according to the inference strategy, and solving a KL regularized optimization problem. InfAlign aligns training goals with inference requirements by tailoring reward transformations to specific scenarios. This method not only improves the winning rate during inference, but also maintains computational efficiency. Additionally, InfAlign enhances the model's robustness, allowing it to effectively cope with various decoding strategies and produce consistent high-quality output.

The effectiveness of InfAlign is verified in experiments using Anthropic's usefulness and harmlessness datasets. Compared with existing methods, InfAlign improves the inference winning rate by 8%-12% in Best-of-N sampling and 4%-9% in Worst-of-N security evaluation. These improvements are due to its calibrated reward transformation, which effectively solves the miscalibration problem of the reward model and ensures consistent performance in different inference scenarios.

InfAlign represents an important advance in the alignment of generative language models. By incorporating inference-aware strategies, InfAlign addresses the critical difference between training and deployment. Its solid theoretical foundation and empirical results highlight its potential to comprehensively improve AI system alignment.

Link: https://arxiv.org/abs/2412.19792

Highlights:

InfAlign is a new framework developed by Google DeepMind, aiming to improve the performance of language models in the inference stage.

This framework adjusts the reward function of the inference strategy through calibrated reinforcement learning methods to achieve alignment between training goals and inference requirements.

Experimental results show that InfAlign significantly improves the model's inference winning rate in multiple tasks, demonstrating good adaptability and reliability.

The emergence of the InfAlign framework provides new ideas for solving the efficiency and quality problems of generative language models in the inference phase. Its contribution in improving model robustness and reliability deserves attention. Future research can further explore the application of InfAlign on different models and tasks to promote the continued development of generative AI technology.