A paper on medical AI evaluation unexpectedly disclosed the parameter sizes of multiple top large language models, causing widespread concern in the industry. This paper released by Microsoft takes the MEDEC medical field benchmark test as the core and estimates the model parameters of OpenAI, Anthropic and other companies, including models such as OpenAI's GPT-4 series and Anthropic's Claude 3.5 Sonnet. There are differences between the parameter scales mentioned in the paper and public information. For example, the parameter scale of GPT-4 is very different from the data previously announced by NVIDIA. This has triggered a heated discussion in the industry about model architecture and technical strength, and once again triggered people's concern about AI models. Thoughts on parameter confidentiality.

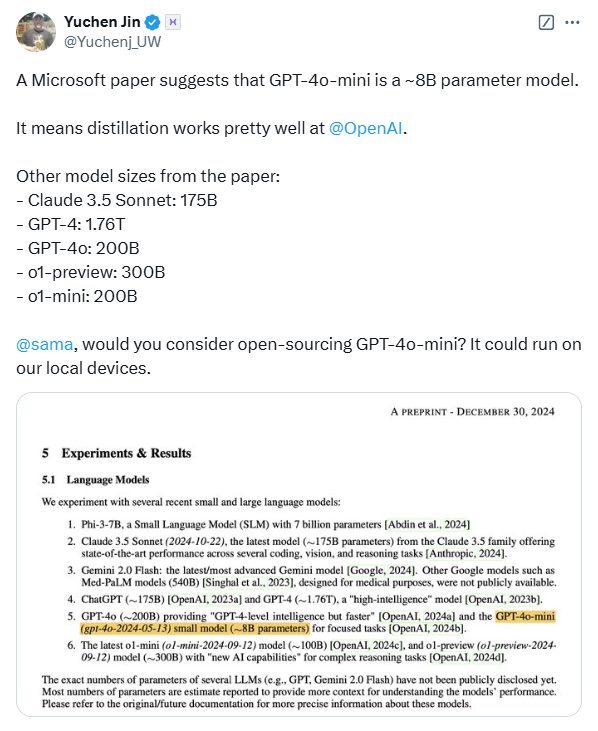

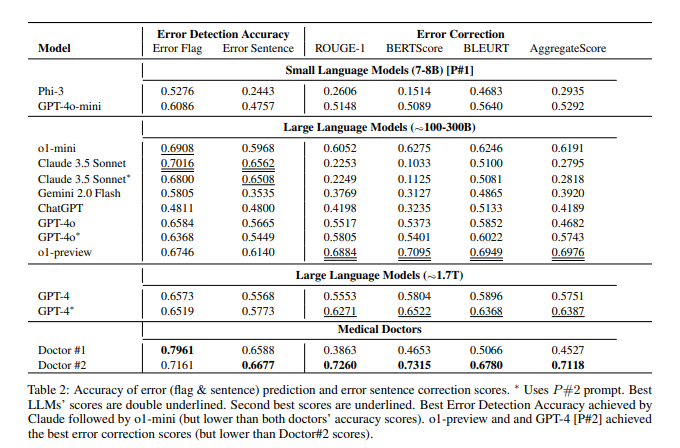

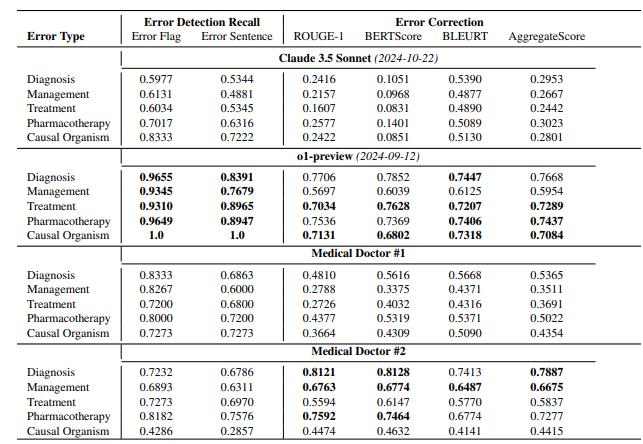

According to the paper, OpenAI's o1-preview model has about 300B parameters, GPT-4o has about 200B, and GPT-4o-mini has only 8B; the parameter size of Claude3.5Sonnet is about 175B. MEDEC test results show that Claude3.5Sonnet performs well in error detection, scoring as high as 70.16. The parameters of Google Gemini are not mentioned in the paper. This may be because Gemini uses TPU instead of NVIDIA GPU, which makes it difficult to accurately estimate the token generation speed. The “leaked” parameter information in the paper, as well as the evaluation results of the model performance, provide valuable reference for the industry to think deeply about the large model technology route, business competition, and future development directions.

This is not the first time that Microsoft has "leaked" model parameter information in papers. In October last year, Microsoft disclosed the 20B parameter size of GPT-3.5-Turbo in a paper, but later deleted this information in an updated version. This repeated "leakage" has led to speculation among industry insiders as to whether it has a specific intention.

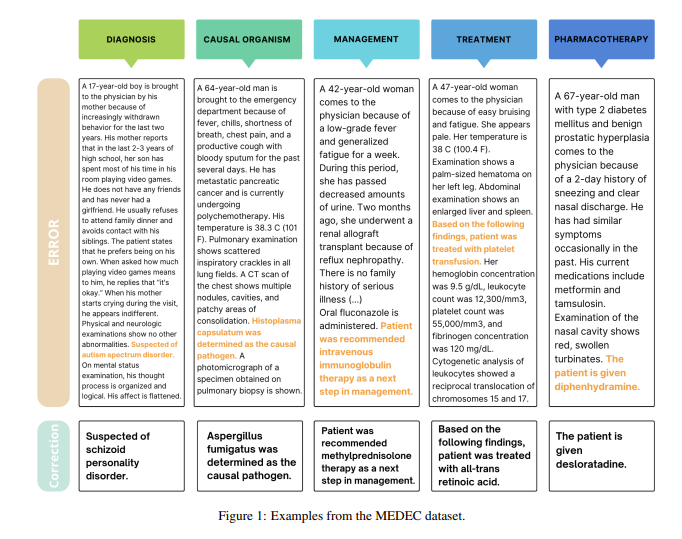

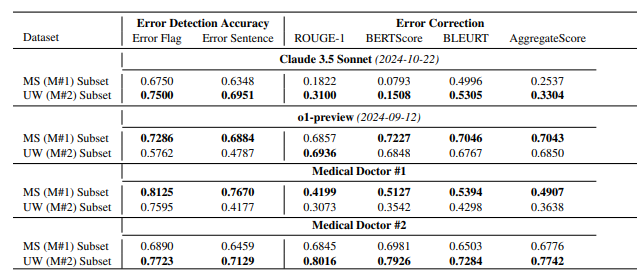

It is worth noting that the main purpose of this paper is to introduce a benchmark in the medical field called MEDEC. The research team analyzed 488 clinical notes from three U.S. hospitals and evaluated the ability of major models to identify and correct medical documentation errors. The test results show that Claude3.5Sonnet leads other models in error detection with a score of 70.16.

There is a heated discussion in the industry about the authenticity of these data. Some people believe that if Claude3.5Sonnet does achieve excellent performance with a smaller number of parameters, this will highlight Anthropic's technical strength. Some analysts also believe that some parameter estimates are reasonable through model pricing inference.

What is particularly noteworthy is that the paper only estimates the parameters of mainstream models, but does not mention the specific parameters of Google Gemini. Some analysts believe that this may be related to Gemini's use of TPU instead of NVIDIA GPU, making it difficult to accurately estimate the token generation speed.

As OpenAI gradually dilutes its open source commitment, core information such as model parameters may continue to become the focus of continued attention in the industry. This unexpected leak once again triggered people's in-depth thinking on AI model architecture, technical routes, and business competition.

References:

https://arxiv.org/pdf/2412.19260

https://x.com/Yuchenj_UW/status/1874507299303379428

https://www.reddit.com/r/LocalLLaMA/comments/1f1vpyt/why_gpt_4o_mini_is_probably_around_8b_active/

All in all, although the model parameter information "leaked" in this paper is not the main content of the paper's research, it has triggered in-depth discussions in the industry on the scale of large model parameters, the selection of technical routes, and the commercial competition landscape, providing a basis for the future development of the field of artificial intelligence. Provides new thinking directions.