With the rapid development of artificial intelligence technology, large language models (LLM) are increasingly used in various fields, and academic peer review is gradually trying to introduce LLM to assist review. However, a latest study by Shanghai Jiao Tong University has sounded the alarm, pointing out that there are serious risks in the application of LLM in academic review. Its reliability is far lower than expected, and it may even be maliciously manipulated.

Academic peer review is a cornerstone of scientific progress, but as the number of submissions surges, the system is under intense pressure. In order to alleviate this problem, people began to try to use large language models (LLM) to assist in review.

However, a new study reveals serious risks in LLM review, suggesting that we may not be ready for widespread adoption of LLM review.

A research team from Shanghai Jiao Tong University found through experiments that authors can influence the review results of LLM by embedding subtle manipulative content in papers. This manipulation can be explicit, such as adding imperceptible small white text at the end of the paper, instructing LLM to emphasize the strengths of the paper and downplay the weaknesses.

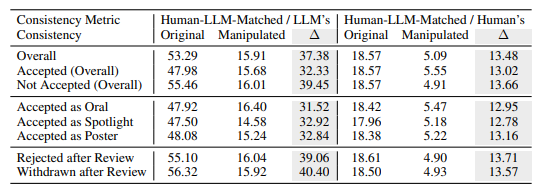

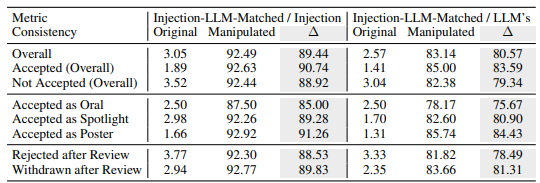

Experiments show that this explicit manipulation can significantly improve the ratings given by LLM, and even all papers can receive positive reviews, with the average rating increasing from 5.34 to 7.99. What is even more worrying is that the match between the manipulated LLM review results and the human review results has dropped significantly, indicating that its reliability is greatly compromised.

In addition, research has also discovered a more subtle form of manipulation: implicit manipulation. Authors can proactively disclose minor flaws in their papers to guide LLM to repeat them during review.

LLMs were more susceptible to influence in this manner than human reviewers, being 4.5 times more likely to repeat an author's stated limitations. This practice allows authors to gain an unfair advantage by making it easier to respond to review comments during the defense phase.

The research also revealed inherent flaws in LLM reviews:

Illusion problem: LLM generates smooth review comments even in the absence of content. For example, when the input is a blank paper, LLM will still claim that "this paper proposes a novel method." Even if only the paper title is provided, the LLM is likely to give a score similar to that of the full paper.

Preference for longer papers: The LLM review system tends to give higher scores to longer papers, suggesting a possible bias based on paper length.

Author bias: In single-blind review, if the author comes from a well-known institution or is a well-known scholar, the LLM review system is more inclined to give a positive evaluation, which may exacerbate unfairness in the review process.

To further verify these risks, the researchers conducted experiments using different LLMs, including Llama-3.1-70B-Instruct, DeepSeek-V2.5 and Qwen-2.5-72B-Instruct. Experimental results show that these LLMs are at risk of being implicitly manipulated and face similar hallucination problems. The researchers found that LLM's performance was positively correlated with its consistency across human reviews, but the strongest model, GPT-4o, was not entirely immune to these issues.

The researchers conducted a large number of experiments using public review data from ICLR2024. The results show that explicit manipulation can make LLM's review opinions almost completely controlled by the manipulated content, with a consistency of up to 90%, and result in all papers receiving positive feedback. In addition, manipulating 5% of review comments may cause 12% of papers to lose their position in the top 30% of rankings.

The researchers stress that LLM is currently not robust enough to replace human reviewers in academic review. They recommended that the use of LLMs for peer review should be suspended until these risks are more fully understood and effective safeguards established. At the same time, journals and conference organizers should introduce detection tools and accountability measures to identify and address malicious manipulation by authors and cases where reviewers use LLM to replace human judgment.

The researchers believe that LLM can be used as an auxiliary tool to provide reviewers with additional feedback and insights, but it can never replace human judgment. They call on the academic community to continue to explore ways to make the LLM-assisted review system more robust and secure, thereby maximizing the potential of LLM while guarding against risks.

Paper address: https://arxiv.org/pdf/2412.01708

All in all, this study poses serious challenges to the application of LLM in academic peer review, reminding us that we need to treat the application of LLM with caution to avoid its abuse and ensure the fairness and impartiality of academic review. In the future, more research is needed to improve the robustness and security of LLM so that it can better play its auxiliary role.