At the end of the year, Beijing Zhipu Huazhang Technology Co., Ltd. launched the first version of GLM-Zero, the first inference model trained based on extended reinforcement learning technology - GLM-Zero-Preview. This model has demonstrated excellent capabilities in mathematical logic, code writing, and complex problem reasoning, and has achieved results comparable to similar OpenAI models in multiple authoritative evaluations. GLM-Zero-Preview not only improves expert task capabilities, but also maintains excellent performance on general tasks, setting a new benchmark for the improvement of artificial intelligence reasoning capabilities.

At the end of the year for Beijing Zhipu Huazhang Technology Co., Ltd., the company released its first inference model trained based on extended reinforcement learning technology - the first version of GLM-Zero, GLM-Zero-Preview. This model focuses on improving the reasoning capabilities of artificial intelligence, especially in mathematical logic, code writing, and complex problem processing that require deep reasoning. Compared with the base model, GLM-Zero-Preview has significantly improved its expert task capabilities while maintaining general task capabilities. Its performance in AIME2024, MATH500 and LiveCodeBench evaluations is equivalent to OpenAI o1-preview.

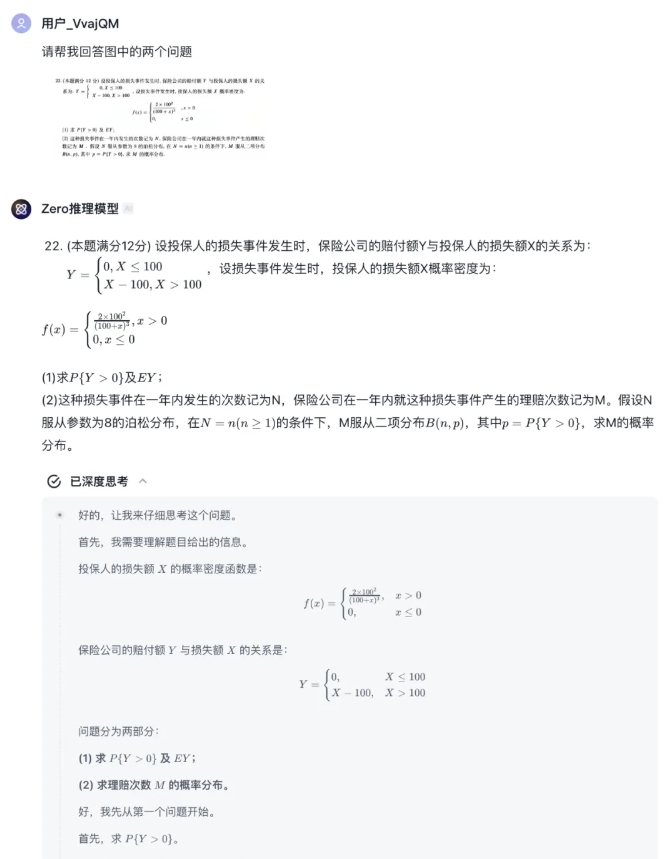

Users can now experience GLM-Zero-Preview for free in the "Zero Inference Model" agent of the Zhipu Qingyan platform. The platform supports text and image uploads, and the model will output the complete reasoning process. At the same time, developers can also call this model through the API of the Zhipu open platform.

Although there is still a certain gap between GLM-Zero-Preview and OpenAI's o3 model, Zhipu Huazhang Technology Co., Ltd. plans to continue to optimize iterative reinforcement learning technology and will soon launch the official version of GLM-Zero to expand the ability of deep thinking from mathematical logic to More general technology areas.

In terms of model performance, GLM-Zero-Preview demonstrates the importance of reinforcement learning in enhancing the deep reasoning capabilities of the model. As the amount of training increases, the model's performance in aspects such as deep reasoning has steadily improved. The scaling law of the model in the inference stage has also been verified. That is, as the number of tokens that the model can think about increases and more calculations are required, the quality of the results given by the model also steadily improves. GLM-Zero-Preview can realize autonomous decision-making, problem decomposition and try multiple ways to solve problems during the reasoning process, which is similar to the human thinking and decision-making process.

In actual test cases, GLM-Zero-Preview demonstrated the ability to identify logical loopholes and simulate multiple assumptions in terms of logical reasoning. In terms of mathematics, the model has strong inductive and deductive capabilities, can quickly handle complex mathematical operations, and has reached the level of an outstanding graduate student in the 2025 Postgraduate Entrance Examination Mathematics I test. In terms of programming, GLM-Zero-Preview is proficient in using multiple programming languages and helps developers write code quickly.

Wisdom spectrum clear words:

https://chatglm.cn/main/gdetail/676411c38945bbc58a905d31?lang=zh

Zhipu open platform:

https://bigmodel.cn/dev/api/normal-model/glm-zero-preview

The launch of GLM-Zero-Preview marks that Zhipu Huazhang has made significant progress in the field of artificial intelligence reasoning. Its free and open strategy also facilitates developer and user experience and feedback, providing valuable data for iterative optimization of future models. We look forward to the release of the official version of GLM-Zero to further promote the advancement of artificial intelligence technology.