MiniMax released a new open source model series MiniMax-01 on January 15, 2025, including the text large model MiniMax-Text-01 and the visual multi-modal large model MiniMax-VL-01. This series of model parameters reaches as high as 456 billion, with a single activation of 45.9 billion. It boldly innovates in architecture and applies the linear attention mechanism on a large scale for the first time, breaking through the limitations of the traditional Transformer and capable of efficiently processing contexts of up to 4 million tokens, significantly ahead of existing models. The MiniMax-01 series is on par with top overseas models in terms of performance and shows significant advantages in long text processing. Its efficient processing capabilities and low price make it extremely competitive in commercial applications.

MiniMax announced the open source of its new series of models MiniMax-01 on January 15, 2025. The series includes the basic language large model MiniMax-Text-01 and the visual multi-modal large model MiniMax-VL-01. The MiniMax-01 series has made bold innovations in architecture, implementing a linear attention mechanism on a large scale for the first time, breaking the limitations of the traditional Transformer architecture. Its parameter volume is as high as 456 billion, and a single activation is 45.9 billion. Its comprehensive performance is comparable to top overseas models, and it can efficiently handle contexts up to 4 million tokens. This length is 32 times that of GPT-4o and Claude-3.5-Sonnet. 20 times.

MiniMax believes that 2025 will be a critical year for the rapid development of Agents. Whether it is a single-Agent system or a multi-Agent system, a longer context is needed to support continuous memory and large amounts of communication. The launch of the MiniMax-01 series of models is precisely to meet this demand and take the first step in establishing the basic capabilities of complex Agents.

Thanks to architectural innovation, efficiency optimization and integrated cluster training and push design, MiniMax can provide text and multi-modal understanding API services at the lowest price range in the industry. The standard pricing is input token 1 yuan/million tokens and output token 8 yuan/hundred. 10,000 tokens. The MiniMax open platform and overseas version have been launched for developers to experience.

MiniMax-01 series models have been open sourced on GitHub and will be continuously updated. In the industry's mainstream text and multi-modal understanding evaluations, the MiniMax-01 series tied the internationally recognized advanced models GPT-4o-1120 and Claude-3.5-Sonnet-1022 in most tasks. Especially for long text tasks, compared with Google's Gemini model, MiniMax-Text-01 has the slowest performance degradation as the input length increases, which is significantly better than Gemini.

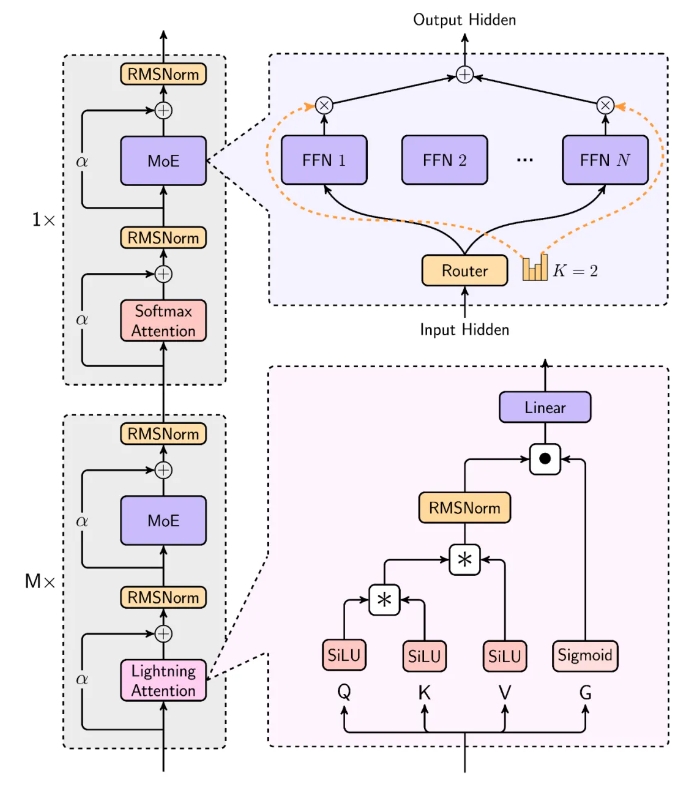

MiniMax's model is extremely efficient when processing long inputs, approaching linear complexity. In its structural design, 7 out of every 8 layers use linear attention based on Lightning Attention, and 1 layer uses traditional SoftMax attention. This is the first time in the industry that the linear attention mechanism has been extended to the commercial model level. MiniMax has comprehensively considered Scaling Law, combination with MoE, structural design, training optimization and inference optimization, and reconstructed the training and inference system, including more Efficient MoE All-to-all communication optimization, longer sequence optimization, and efficient Kernel implementation of linear attention at the inference level.

In most academic tests, the MiniMax-01 series has achieved results comparable to those of the first tier overseas. It is significantly ahead in the long context evaluation set, such as its excellent performance on the 4 million Needle-In-A-Haystack retrieval task. In addition to academic data sets, MiniMax also built an assistant scenario test set based on real data, and MiniMax-Text-01 performed outstandingly in this scenario. In the multi-modal understanding test set, MiniMax-VL-01 is also ahead.

Open source address: https://github.com/MiniMax-AI

The open source of MiniMax-01 series models has injected new vitality into the development of the AI field. Its breakthroughs in long text processing and multi-modal understanding will promote the rapid development of Agent technology and related applications. We look forward to more innovations and breakthroughs from MiniMax in the future.