Alibaba Damo Academy released Valley2, a multi-modal large-scale language model based on e-commerce scenarios. This model combines Qwen2.5, SigLIP-384 visual encoder and innovative Eagle modules and convolution adapters to improve e-commerce. and application performance in the short video field. Valley2's data set covers OneVision style data, e-commerce and short video field data, and chain thinking data. After multi-stage training, it has achieved excellent results in multiple public benchmark tests, especially in e-commerce related evaluations. . The optimization of its architecture design and training strategy provides new ideas for improving the performance of multi-modal large models.

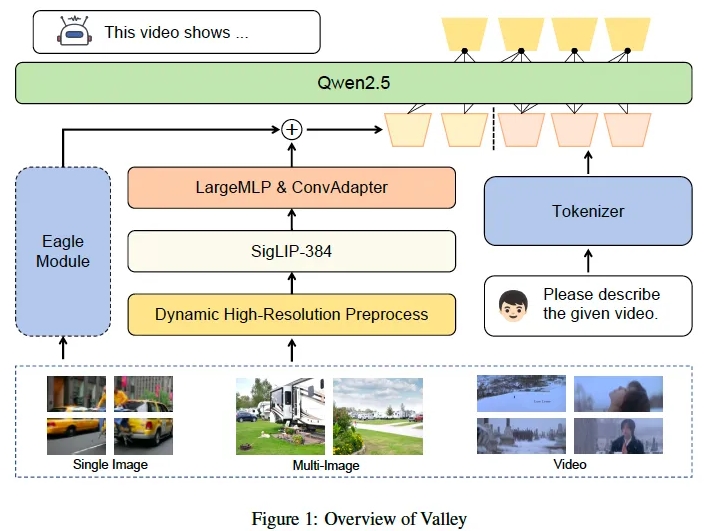

Alibaba Damo Academy recently launched a multi-modal large-scale language model called Valley2. This model is designed based on e-commerce scenarios and aims to improve performance in various fields and expand e-commerce and short-term use through an scalable visual-language architecture. Application boundaries of video scenes. Valley2 uses Qwen2.5 as the LLM backbone, paired with the SigLIP-384 visual encoder, and combines MLP layers and convolutions for efficient feature conversion. Its innovation lies in the introduction of a large visual vocabulary, convolution adapter (ConvAdapter) and Eagle module, which enhances the flexibility of processing diverse real-world inputs and the efficiency of training inference.

Valley2’s data consists of OneVision style data, data for e-commerce and short video fields, and Chain of Thinking (CoT) data for complex problem solving. The training process is divided into four stages: text-visual alignment, high-quality knowledge learning, instruction fine-tuning, and chain thinking post-training. In experiments, Valley2 performed well in multiple public benchmark tests, especially scoring high on MMBench, MMStar, MathVista and other benchmarks, and also surpassed other models of the same size in the Ecom-VQA benchmark test.

In the future, Alibaba DAMO Academy plans to release a comprehensive model including text, image, video and audio modalities, and introduce a Valley-based multi-modal embedding training method to support downstream retrieval and detection applications.

The launch of Valley2 marks an important progress in the field of multi-modal large-scale language models, demonstrating the possibility of improving model performance through structural improvement, data set construction and training strategy optimization.

Model link:

https://www.modelscope.cn/models/bytedance-research/Valley-Eagle-7B

Code link:

https://github.com/bytedance/Valley

Paper link:

https://arxiv.org/abs/2501.05901

The release of Valley2 not only demonstrates the advanced technology of Alibaba Damo Academy in the field of multi-modal large models, but also indicates that the e-commerce and short video fields will usher in more innovative applications based on AI in the future. We look forward to Valley2 being able to further improve and expand its application scenarios in the future, bringing more convenient and smarter services to users.