With the explosive growth of video data volume, how to effectively use video information for knowledge retrieval and question answering has become a research hotspot. Traditional retrieval-augmented generation (RAG) systems mostly rely on text information and are difficult to fully exploit the rich multi-modal information contained in videos. This article introduces a novel framework called VideoRAG that is capable of dynamically retrieving videos relevant to a query and effectively integrating visual and textual information to generate more accurate and informative answers. The framework utilizes Large Video Language Models (LVLMs) to achieve seamless integration of multi-modal data and processes unsubtitled videos through automatic speech recognition technology, significantly improving retrieval and generation efficiency.

With the rapid development of video technology, video has become an important tool for information retrieval and understanding of complex concepts. Video combines visual, temporal, and contextual data to provide multimodal representation beyond static images and text. Today, with the proliferation of video sharing platforms and the proliferation of educational and informational videos, leveraging video as a knowledge source provides unprecedented opportunities to solve queries that require detailed context, spatial understanding, and process demonstration.

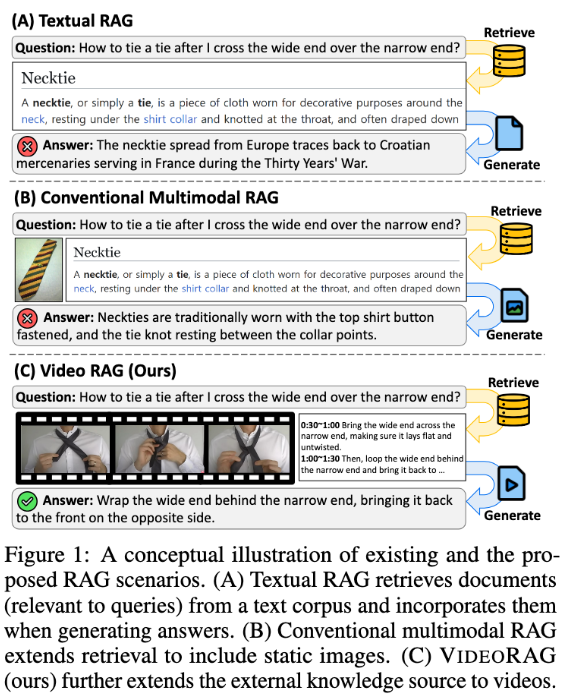

However, existing retrieval-augmented generation (RAG) systems often overlook the full potential of video data. These systems often rely on textual information and occasionally use static images to support query responses, but fail to capture the visual dynamics and multimodal cues contained in video, which are critical for complex tasks. Traditional approaches either pre-define query-related videos without retrieval or convert the videos into text format, thereby losing important visual context and temporal dynamics, limiting the ability to provide accurate and informative answers.

In order to solve these problems, the research team from the Korea Advanced Institute of Science and Technology (KaIST) and DeepAuto.ai proposed a novel framework-VideoRAG. The framework is capable of dynamically retrieving videos relevant to a query and integrating visual and textual information into the generation process. VideoRAG leverages advanced large-scale video language models (LVLMs) to achieve seamless integration of multi-modal data, ensuring that retrieved videos are contextually consistent with user queries and maintaining the temporal richness of video content.

VideoRAG's workflow is divided into two main stages: retrieval and generation. During the retrieval phase, the framework identifies videos similar to its visual and textual features through the query.

In the generation stage, automatic speech recognition technology is used to generate auxiliary text data for videos without subtitles, thereby ensuring that response generation for all videos can effectively contribute information. The relevant retrieved videos are further input into the generation module, which integrates multi-modal data such as video frames, subtitles, and query text, and processes them with the help of LVLMs to generate long, rich, accurate, and contextually appropriate responses.

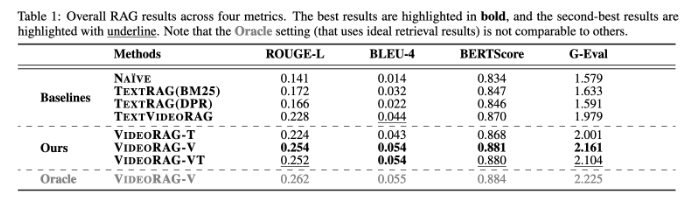

VideoRAG conducts extensive experiments on datasets such as WikiHowQA and HowTo100M, and the results show that its response quality is significantly better than traditional methods. This new framework not only improves the capabilities of retrieval enhancement generation systems, but also sets new standards for future multi-modal retrieval systems.

Paper: https://arxiv.org/abs/2501.05874

Highlight:

**New framework**: VideoRAG dynamically retrieves relevant videos and fuses visual and textual information to improve the generation effect.

**Experimental verification**: Tested on multiple data sets, showing significantly better response quality than the traditional RAG method.

**Technical Innovation**: Using large-scale video language models, VideoRAG opens a new chapter in multi-modal data integration.

All in all, the VideoRAG framework provides a new solution for video-based retrieval enhancement tasks. Its breakthroughs in multi-modal data integration and information retrieval provide important information for future smarter and more accurate information retrieval systems. Reference value. The research results are expected to be widely used in education, medical and other fields.