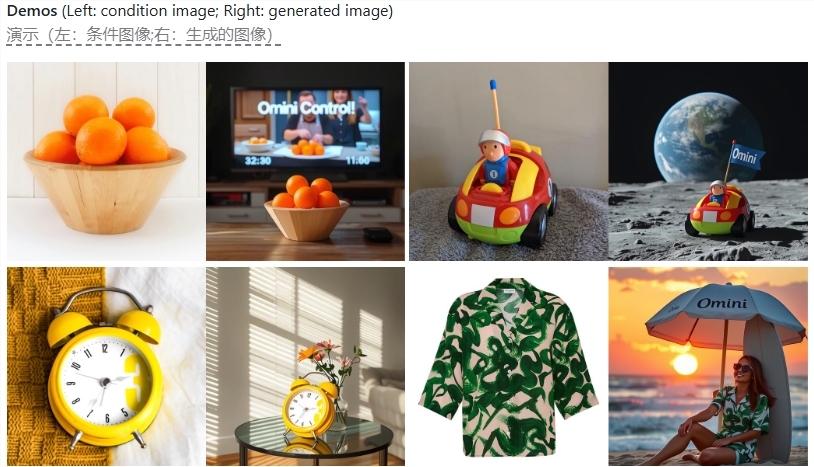

A research team from the National University of Singapore has developed a new image generation framework called OminiControl, which significantly improves the flexibility and efficiency of image generation through an ingenious parameter reuse mechanism. OminiControl uses the pre-trained diffusion transformer model (DiT), combined with image conditions, to achieve powerful theme integration and spatial alignment capabilities. Even with only a few additional parameters, it can achieve stunning results. It is able to handle a variety of image conditioning tasks, such as subject-based generation and spatial alignment using information such as edges, depth maps, etc., which shows great advantages in subject-driven image generation tasks.

In today's digital age, image generation technology is advancing at an astonishing pace. Recently, a research team from the National University of Singapore proposed a new framework - OminiControl, aiming to improve the flexibility and efficiency of image generation. This framework brings unprecedented control capabilities by combining image conditions and making full use of the already trained Diffusion Transformer (DiT) model.

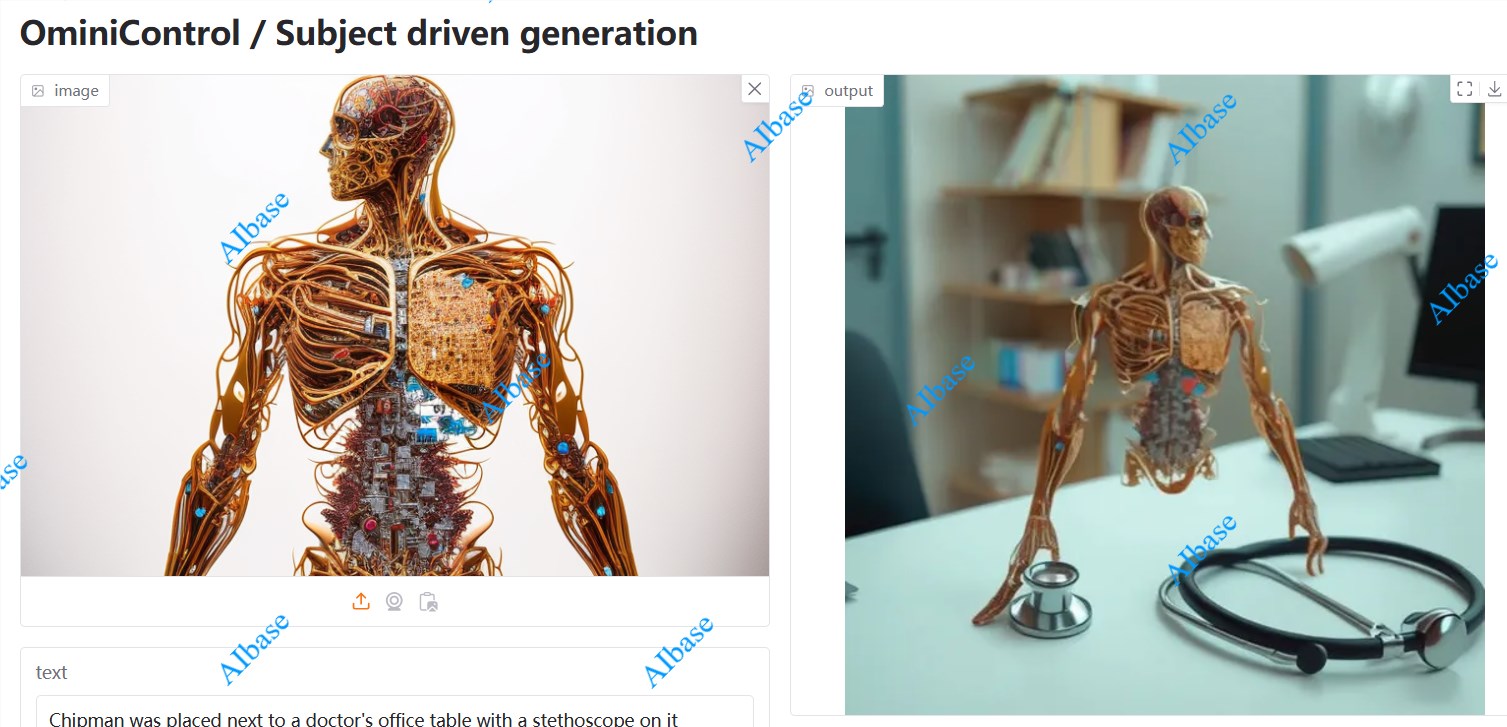

Simply put, as long as you provide a material picture, you can use OminiControl to integrate the theme in the material picture into the generated picture. For example, the editor uploaded the material picture on the left and entered the prompt word "The chip man is placed next to the table in a doctor's office, with a stethoscope placed on the table." The generated effect is relatively general, as follows:

The core of OminiControl lies in its "parameter reuse mechanism". This mechanism enables the DiT model to effectively handle image conditions with fewer additional parameters. This means that compared to existing methods, OminiControl only needs 0.1% to 0.1% more parameters to achieve powerful functions. Furthermore, it is able to uniformly handle multiple image conditioning tasks, such as subject-based generation and the application of spatial alignment conditions, such as edges, depth maps, etc. This flexibility is particularly useful for topic-driven generation tasks.

The research team also emphasized that OminiControl achieves these capabilities by training generated images, which is particularly important for topic-driven generation. After extensive evaluation, OminiControl significantly outperforms existing UNet models and DiT adaptation models in both topic-driven generation and spatially aligned conditional generation tasks. This research result brings new possibilities to the creative field.

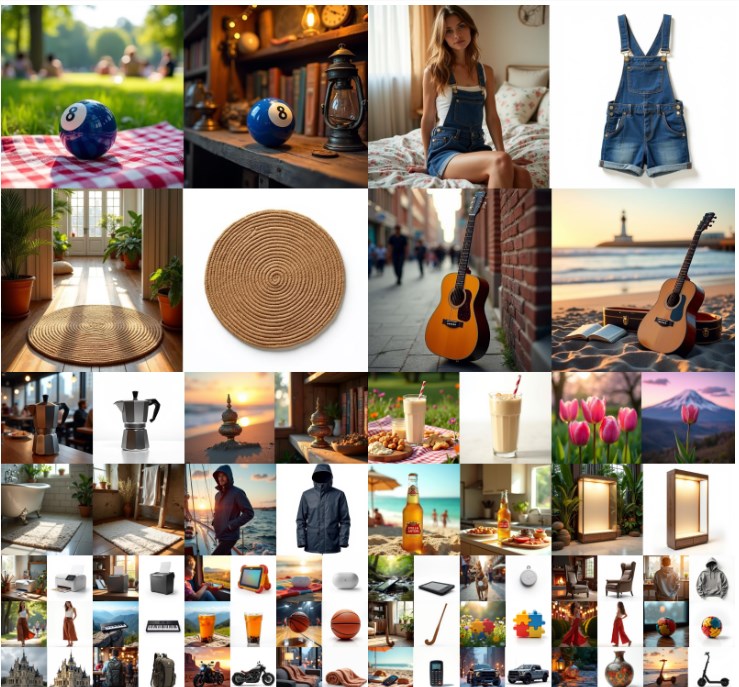

To support broader research, the team also released a training data set called Subjects200K, which contains more than 200,000 identity-consistent images and provides an efficient data synthesis pipeline. This dataset will provide researchers with a valuable resource to help them further explore the topic consensus generation task.

The launch of Omini not only improves the efficiency and effect of image generation, but also provides more possibilities for artistic creation. As technology continues to advance, image generation in the future will be more intelligent and personalized.

Online experience: https://huggingface.co/spaces/Yuanshi/OminiControl

github:https://github.com/Yuanshi9815/OminiControl

Paper: https://arxiv.org/html/2411.15098v2

Highlight:

OminiControl uses a parameter reuse mechanism to make image generation control more powerful and efficient.

The framework can handle multiple image condition tasks at the same time, such as edges, depth maps, etc., to adapt to different creative needs.

The team released Subjects200K, a data set of more than 200,000 images, to facilitate further research and exploration.

The emergence of OminiControl marks a new milestone in image generation technology. Its efficient parameter reuse mechanism and powerful multi-tasking capabilities provide artists and researchers with powerful tools, and also herald the unlimited potential of future image generation technology. Feel free to visit the link provided to learn more details and experience OminiControl.