Audio-driven image animation technology has made significant progress in recent years, but the complexity and efficiency issues of existing models limit its application. To solve these problems, researchers developed a new technology called JoyVASA, which significantly improves the quality, efficiency and application scope of audio-driven image animation through an innovative two-stage design. JoyVASA is not only able to generate longer animated videos, but also animates human portraits and animal faces, and supports multiple languages.

Recently, researchers have proposed a new technology called JoyVASA, which aims to improve audio-driven image animation effects. With the continuous development of deep learning and diffusion models, audio-driven portrait animation has made significant progress in video quality and lip synchronization accuracy. However, the complexity of existing models increases the efficiency of training and inference, while also limiting the duration and inter-frame continuity of videos.

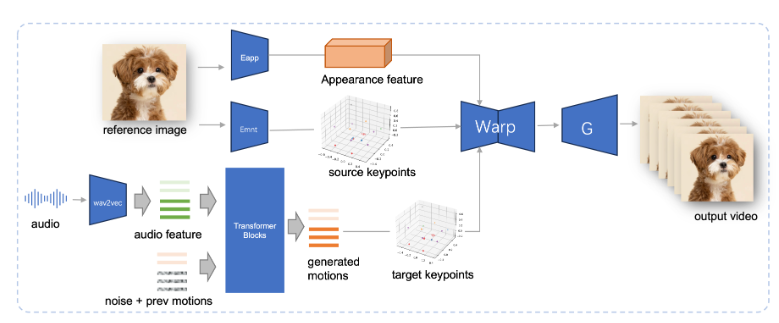

JoyVASA adopts a two-stage design. The first stage introduces a decoupled facial representation framework to separate dynamic facial expressions from static three-dimensional facial representations.

This separation enables the system to combine any static 3D facial model with dynamic action sequences to generate longer animated videos. In the second stage, the research team trained a diffusion transformer that can generate action sequences directly from audio cues, a process that is independent of character identity. Finally, the generator based on the first-stage training takes the 3D facial representation and the generated action sequence as input to render high-quality animation effects.

Notably, JoyVASA is not limited to human portrait animation, but can also seamlessly animate animal faces. This model is trained on a mixed data set, combining private Chinese data and public English data, showing good multi-language support capabilities. The experimental results prove the effectiveness of this method. Future research will focus on improving real-time performance and refining expression control to further expand the application of this framework in image animation.

The emergence of JoyVASA marks an important breakthrough in audio-driven animation technology, promoting new possibilities in the field of animation.

Project entrance: https://jdh-algo.github.io/JoyVASA/

Highlight:

JoyVASA technology enables longer animated video generation by decoupling facial expressions from 3D models.

This technology can generate action sequences based on audio cues, and has the dual ability of character and animal animation.

JoyVASA is trained on Chinese and English data sets, has multi-language support, and provides services to users around the world.

The innovation of JoyVASA technology lies in its decoupled design and efficient use of audio cues, which provides a new direction for the future development of audio-driven image animation technology. Its multi-language support and efficient animation generation capabilities also make it widely used application prospects. It is expected that JoyVASA can further improve real-time performance and achieve more sophisticated expression control in the future.