Reducing the cost of large model training is a current research hotspot in the field of artificial intelligence. The recent research released by Tencent Hunyuan team deeply discusses the scale rules of low-bit floating point quantization training, providing new ideas for efficient training of large models. Through a large number of experiments, this study analyzed the impact of factors such as model size, training data volume, and quantification accuracy on the training effect, and finally came up with rules on how to effectively allocate training resources under different accuracies to obtain the best results. This research not only has important theoretical significance, but also provides valuable guidance for the practical application of large models.

Today, with the rapid development of Large Language Model (LLM), the cost of model training and inference has increasingly become the focus of research and application. Recently, Tencent Hunyuan team released an important study, which deeply explored the "Scaling Laws" of low-bit floating point quantization training, that is, the scaling law of floating point quantization training. The core of this research is to explore how to significantly reduce computing and storage costs without losing performance by reducing the accuracy of the model.

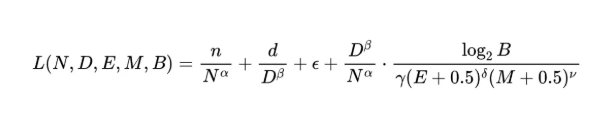

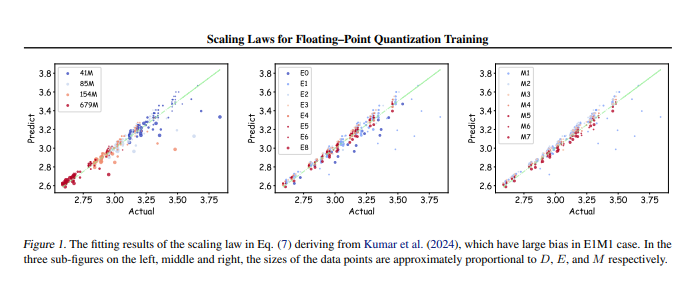

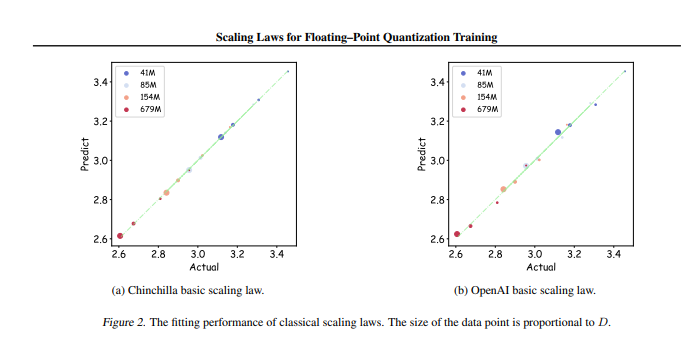

The research team conducted up to 366 sets of floating-point quantitative training with different parameter sizes and precisions, and systematically analyzed various factors that affect the training effect, including model size (N), training data volume (D), exponential bit (E), Mantissa bits (M) and quantization granularity (B). Through these experiments, the researchers derived a unified set of Scaling Law, which revealed how to effectively configure training data and model parameters under different accuracy to obtain the best training effect.

The most critical thing is that research points out that in arbitrary low-precision floating-point quantification training, there is a "limit effect", that is, under a specific amount of data, the performance of the model will reach optimal, and exceeding this amount of data may cause effects. decline. In addition, the study also found that the theoretically best cost-effective floating point quantification training accuracy should be between 4 and 8 bits, which has important guiding significance for the development of efficient LLM.

This research not only fills the gap in the field of floating-point quantification training, but also provides a reference for future hardware manufacturers to help them optimize floating-point computing capabilities under different precisions. Ultimately, this research provides a clear direction for the practice of large model training, ensuring that efficient training effects can still be achieved even with limited resources.

Paper address: https://arxiv.org/pdf/2501.02423

In short, this research by Tencent Hunyuan team provides an effective solution for reducing the cost of large model training. The scale rules and optimal accuracy range discovered will have a profound impact on the development and application of large models in the future. This work points out the direction for high-performance, low-cost large model training, which deserves attention and in-depth research.