Japan's Sakana AI company recently released TRANSFORMER², an innovative technology that aims to improve language model tasks. Different from the traditional AI system that needs to handle multiple tasks at one time, Transformer² uses two -stage learning to use expert vectors and strange value fine -tuning (SVF) technology to make it efficiently adapt to new tasks without re -training the entire network, showing that it is apparent to it, showing that it is apparent. It has reduced resource consumption and improves the flexibility and accuracy of the model. This technology focuses on expert vectors that control the importance of network connection to focus on specific tasks, such as mathematical operations, programming and logical reasoning, and combined with strengthening learning to further optimize model performance.

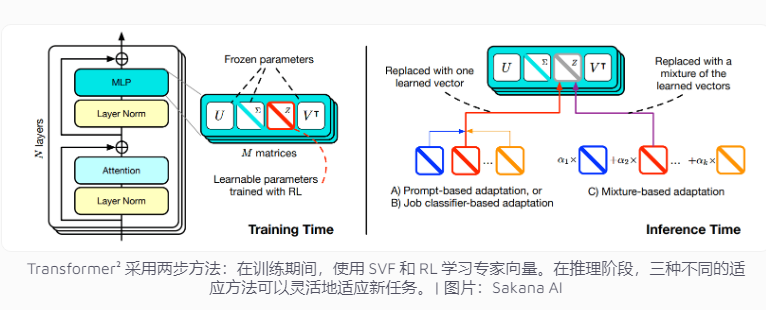

The current artificial intelligence system usually needs to handle multiple tasks in a training, but they are prone to accidental challenges when facing new tasks, resulting in restrictions on the model's adaptability. The design concept of Transformer 理 is aimed at this issue. It uses expert vector and strange value fine -tuning (SVF) technology, so that the model can flexibly cope with new tasks without re -training the entire network.

Traditional training methods need to adjust the weight of the entire neural network, and this practice is not only highly cost -effective, but also may lead to the knowledge learned before the model "forgotten". In contrast, SVF technology avoids these issues by learning expert vectors that control the importance of each network connection. Expert vectors can help models focus on specific tasks by adjusting the weight matrix of the network connection, such as mathematical computing, programming and logical reasoning.

This method significantly reduces the number of parameters required for the model to adapt to the new tasks. For example, the LORA method requires 6.82 million parameters, while SVF only needs 160,000 parameters. This not only reduces the consumption of memory and processing capabilities, but also prevents the model from forgetting other knowledge when focusing on a certain task. The most important thing is that these expert vectors can effectively work together to enhance the model's ability to adapt to diverse tasks.

In order to further improve the adaptability, Transformer² introduced enhanced learning. During the training process, the model continuously optimizes the expert vector by proposing a task solution and obtaining feedback, thereby increasing the performance of new tasks. The team has developed three strategies to use these experts: adapt to tips, task classifiers, and small sample adaptive. In particular, the adaptive strategy of small samples, by analyzing the examples of the new tasks and adjusting the expert vector, further improve the flexibility and accuracy of the model.

In multiple benchmark tests, Transformer² performed more than the traditional method LoRa. In mathematical tasks, its performance has increased by 16%, and the required parameters have been greatly reduced. In the face of a new task, the accuracy of Transformer² is 4%higher than the original model, while LoRa failed to achieve the expected effect.

Transformer 能 can not only solve complex mathematical problems, but also combine programming and logical reasoning capabilities to achieve cross -domain knowledge sharing. For example, the team finds that smaller models can also transfer expert vectors to improve performance with the knowledge of larger models, which provides new possibilities for the knowledge sharing between models.

Although Transformerr has made significant progress in task adaptability, it still faces some restrictions. At present, expert vectors using SVF training can only rely on the existing abilities in the pre -training model and cannot add new skills. Real continuous learning means that the model can learn new skills independently, and this goal still takes time to achieve. How to expand this technology in a large model of more than 70 billion parameters is still an unspeakable issue.

All in all, Transformer² has shown huge potential in the field of continuous learning. Its efficient resource utilization and significant performance improvement provides a new direction for the development of future language models. However, technology still needs to be continuously improved to overcome the existing limitations and achieve true continuous learning goals.