Long video understanding has always been a major challenge in the field of video analysis. Traditional models are inefficient when processing long videos and are difficult to effectively extract key information. This paper introduces a hierarchical video markup compression technology called HiCo, and the "VideoChat-Flash" system based on this technology, which significantly improves the "needle in the haystack" task through multi-stage learning and improved Long video understanding capabilities and significantly reduced computing requirements. The research team built a large dataset containing 300,000 hours of video and 200 million words of annotations for model training and evaluation.

Specifically, HiCo reduces computational complexity by segmenting long videos into short segments and compressing redundant information, while leveraging semantic associations with user queries to further reduce the number of tags processed. "VideoChat-Flash" adopts a multi-stage learning scheme, first using short videos for supervised fine-tuning, and then gradually introducing long video training, and finally achieving a comprehensive understanding of mixed-length corpus. Additionally, the improved “needle in the haystack” task improves the model’s understanding of context and multi-hop video configurations.

In the specific implementation of long video processing, "VideoChat-Flash" adopts a multi-stage learning scheme from short videos to long videos. The researchers first used short videos and their corresponding annotations for supervised fine-tuning, and then gradually introduced long videos for training, finally achieving a comprehensive understanding of mixed-length corpus. This method not only improves the visual perception capabilities of the model, but also provides rich data support for long video processing. The research team constructed a huge data set containing 300,000 hours of video and 200 million words of annotations.

In addition, an improved "needle in the haystack" task is proposed in the study for multi-hop video configurations. With the new benchmark, the model not only needs to find a single target image in the video, but also needs to understand multiple interrelated image sequences, thus improving the model's ability to understand context.

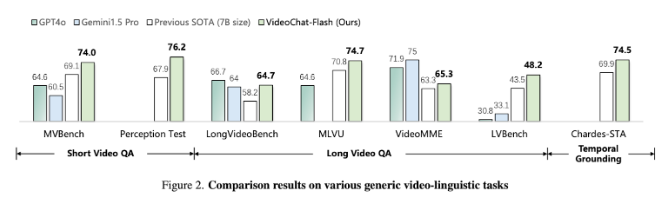

Experimental results show that the proposed method reduces computation by two orders of magnitude, especially performs well in benchmark tests of short and long videos, becoming a leader in the new field of short video understanding. At the same time, this model also surpasses existing open source models in long video understanding, showing strong time positioning capabilities.

Paper: https://arxiv.org/abs/2501.00574

Highlight:

The researchers proposed the hierarchical video tag compression technology HiCo, which significantly reduces the computational requirements for long video processing.

The "VideoChat-Flash" system adopts a multi-stage learning method and combines short and long videos for training, which improves the model's understanding ability.

Experimental results show that this method reaches new performance standards in multiple benchmark tests and becomes an advanced model in the field of long video processing.

All in all, this research provides a new solution for efficient long video understanding. HiCo technology and VideoChat-Flash system have achieved significant breakthroughs in computational efficiency and model performance, laying the foundation for future long video analysis applications. A solid foundation. The research results have important theoretical significance and practical application value.