Game scene generation has always been a major challenge in the field of game development. How to break through the limitations of existing scenarios and create a more diverse and innovative game world is a direction that developers continue to explore. Recently, the University of Hong Kong and Kuaishou Technology collaborated to develop an innovative framework called GameFactory, which provides a new idea to solve this problem. This framework utilizes advanced video diffusion model technology, combined with a unique three-stage training strategy, to generate new and diverse game scenes, significantly improving the efficiency and creativity of game video generation.

In the field of game development, the diversity and innovation of scenarios have always been a difficult problem. Recently, the University of Hong Kong and Kuaishou Technology jointly developed an innovative framework called GameFactory, aiming to solve the problem of scene generalization in game video generation. This framework leverages pre-trained video diffusion models that can be trained on open-domain video data to generate new and diverse game scenarios.

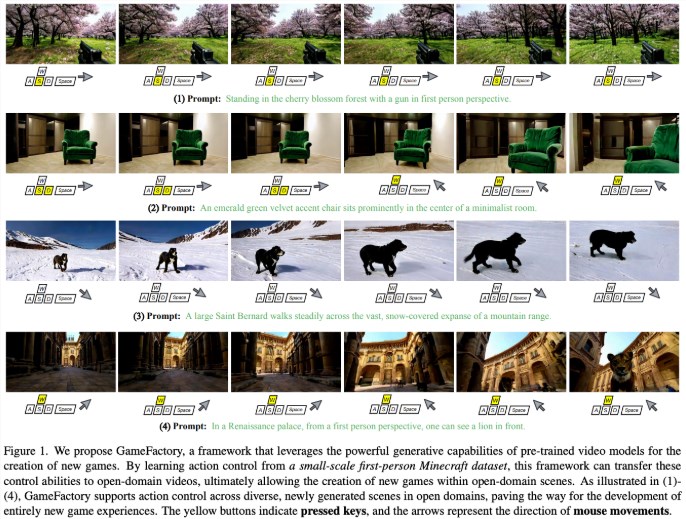

As an advanced generation technology, video diffusion model has shown great potential in the fields of video generation and physical simulation in recent years. These models can respond to user input, such as keyboard and mouse, just like video generation tools, and then generate corresponding game scenes. However, scene generalization, which refers to the ability to create entirely new game scenarios beyond existing ones, remains a significant challenge in this area. Although collecting a large amount of action-annotated video data sets is a direct way to solve this problem, this method is time-consuming and labor-intensive, especially impractical in open-domain scenarios.

The GameFactory framework was launched to solve this problem. Through pre-trained video diffusion models, GameFactory is able to avoid over-reliance on specific game datasets and support the generation of diverse game scenarios. In addition, to bridge the gap between open domain prior knowledge and limited game data sets, GameFactory also adopts a unique three-stage training strategy.

In the first stage, LoRA (low-rank adaptation) is used to fine-tune the pre-trained model to adapt it to the specific game domain while retaining the original parameters. The second stage freezes the pre-training parameters and focuses on training the motion control module to avoid confusion between style and control. Finally, in the third stage, the LoRA weights are removed and the motion control module parameters are retained, allowing the system to generate controlled game videos in different open domain scenarios.

The researchers also evaluated the effectiveness of different control mechanisms and found that the cross-attention mechanism performed better when processing discrete control signals such as keyboard input, while the splicing method performed better when processing mouse movement signals. GameFactory also supports autoregressive motion control, enabling the generation of interactive gameplay videos of unlimited length. In addition, the research team also released the high-quality action annotation video dataset GF-Minecraft for the training and evaluation of the framework.

Paper: https://arxiv.org/abs/2501.08325

Highlight:

The GameFactory framework was jointly developed by the University of Hong Kong and Kuaishou Technology to solve the problem of scene generalization in game video generation.

The framework utilizes pre-trained video diffusion models to generate diverse game scenarios and adopts a three-stage training strategy to improve the effect.

The researchers also released the action annotation video dataset GF-Minecraft to support the training and evaluation of GameFactory.

The emergence of the GameFactory framework has brought new possibilities to game development. Its efficient scene generation capabilities and open domain adaptability will greatly promote the development of the game industry and bring players a more colorful game experience. In the future, we expect the GameFactory framework to be further improved to provide more powerful tools for game developers.