In the field of artificial intelligence, efficient model inference is crucial. Developers continue to explore ways to run large language models on different hardware platforms. Recently, developer Andrei David achieved an eye-catching achievement: he successfully transplanted Meta AI's Llama 2 model to an Xbox 360 game console that is nearly twenty years old. This not only demonstrates his superb technology, but also provides The application of artificial intelligence in edge computing provides new possibilities. This move overcomes many challenges such as PowerPC architecture, memory limitations, and endian conversion, and provides valuable experience for running large language models in low-resource environments.

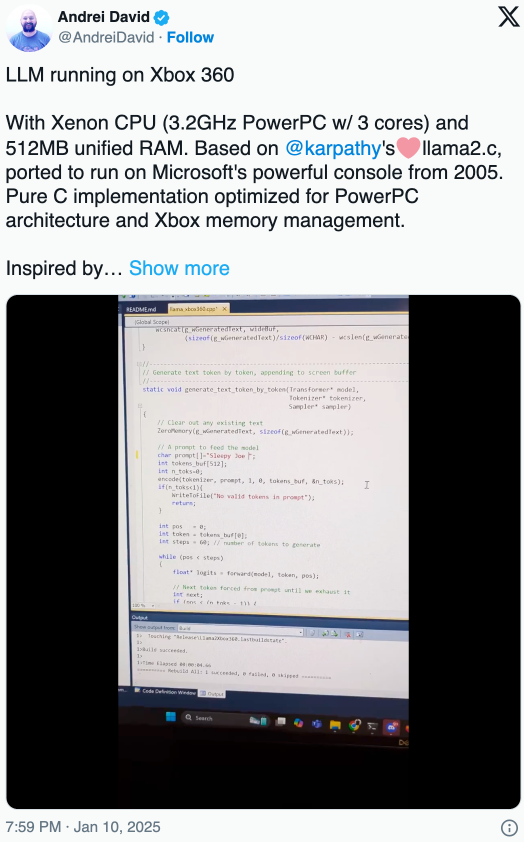

Today, with the rapid development of artificial intelligence technology, how to implement efficient model inference on various hardware has become an important challenge for developers. Recently, developer Andrei David found inspiration from an Xbox 360 game console that was nearly twenty years old. He successfully transplanted a lightweight model from Meta AI's Llama LLM series, llama2.c, to this old console. on old game consoles.

David shared his achievement on social media platform X, saying the challenges he faced were huge. The Xbox 360's PowerPC CPU uses a big-endian architecture, which means that a large number of endian conversions must be performed when the model is configured and weighted. Additionally, David needed to deeply tweak and optimize the original code to run smoothly on such an aging piece of hardware.

Memory management is also a big problem he must solve. The size of the llama2 model reaches 60MB, and the memory architecture of Xbox360 is unified memory, which means that the CPU and GPU need to share the same memory. This forces David to be very careful when designing memory usage. He believes that despite the memory limitations of the Xbox 360, its architecture was very forward-looking at the time, foreshadowing the standard memory management technology of modern game consoles and APUs.

After repeated coding and optimization, David finally successfully ran the llama2 model on Xbox360 with just a simple prompt: "Sleepy Joe said". It is worth mentioning that the llama2 model has only 700 lines of C code and has no external dependencies, which allows it to show "surprisingly" powerful performance when customized in specific areas.

For other developers, David's success gave them a new direction. Some users suggested that the 512MB memory of Xbox360 may also be able to support the implementation of other small LLMs, such as smolLM developed by Hugging Face. David welcomes this, and we are likely to see more experimental results from LLM on Xbox360 in the future.

David's success story provides new ideas and inspiration for developers, proving that even on resource-constrained devices, large language models can be run through clever optimization and code adjustments. This not only promotes the further development of artificial intelligence technology in the field of edge computing, but also provides unlimited possibilities for more innovative applications in the future. In the future, we look forward to seeing more similar breakthroughs that will bring artificial intelligence technology into a wider range of application scenarios.