Codestral 25.01, the latest open source coding model released by Mistral, has achieved significant improvements in performance and is twice as fast as the previous generation. As an upgraded version of Codestral, Codestral 25.01 inherits its low-latency and high-frequency operation characteristics, and is optimized for enterprise-level applications, supporting tasks such as code correction, test generation, and intermediate filling. Its excellent performance in the Python coding test, especially the high score of 86.6% in the HumanEval test, makes it the leader among current heavyweight coding models, surpassing many similar products.

Similar to the original Codestral, Codestral 25.01 still focuses on low-latency and high-frequency operations, supporting code correction, test generation, and intermediate filling tasks. Mistral said this version is particularly suitable for enterprises that require more data and model residency. Benchmark tests show that Codestral25.01 performs beyond expectations in the Python coding test, with a HumanEval test score of 86.6%, far exceeding the previous version, Codellama70B Instruct and DeepSeek Coder33B Instruct.

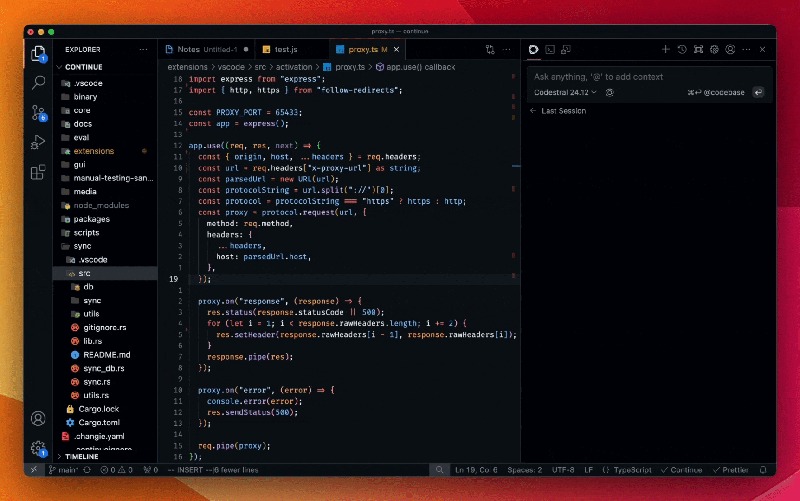

Developers can access the model through the Mistral IDE plug-in as well as the local deployment tool Continue. In addition, Mistral also provides access to the API through Google Vertex AI and Mistral la Plateforme. The model is currently available in preview on Azure AI Foundry and will soon be available on the Amazon Bedrock platform.

Since its release last year, Codestral by Mistral has become a leader in the code-focused open source model. Its first version of Codestral is a 22B parameter model that supports up to 80 languages and has better coding performance than many similar products. Immediately afterwards, Mistral launched Codestral-Mamba, a code generation model based on the Mamba architecture that can handle longer code strings and cope with more input requirements.

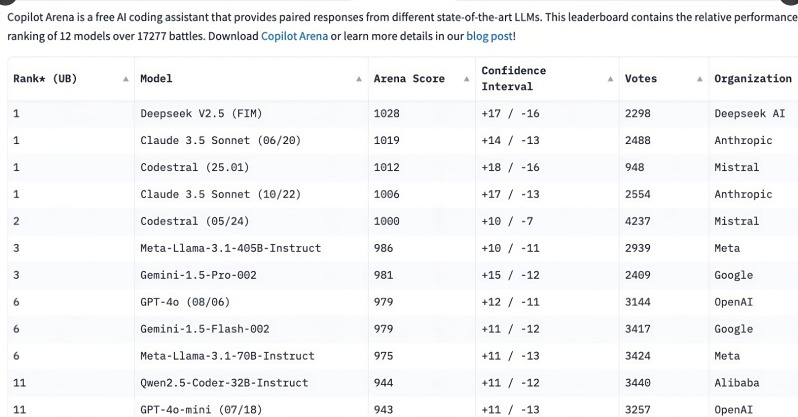

The launch of Codestral 25.01 attracted widespread attention from developers, ranking at the top of the C o pilot Arena rankings within just a few hours of release. This trend shows that specialized coding models are quickly becoming the first choice for developers, especially in the field of coding tasks. Compared with multi-functional general models, the need for focused coding models is increasingly obvious.

Although general-purpose models like OpenAI’s o3 and Anthropic’s Claude can also encode, specially optimized encoding models tend to perform better. In the past year, multiple companies have released dedicated models for coding, such as Alibaba's Qwen2.5-Coder and China's DeepSeek Coder, the latter becoming the first model to surpass GPT-4Turbo. In addition, Microsoft has also launched GRIN-MoE based on the mixture of experts model (MOE), which can not only code but also solve mathematical problems.

Although developers are still debating whether to choose general-purpose models or specialized models, the rapid rise of coding models has revealed a huge need for efficient and accurate coding tools. With the advantage of being specially trained for coding tasks, Codestral25.01 undoubtedly occupies a place in the future of coding.

The release of Codestral25.01 marks another step forward in the field of professional coding models. Its superior performance and convenient access will bring developers a more efficient coding experience and promote the further development of artificial intelligence in the field of software development. In the future, we look forward to seeing more similar professional models emerge to provide developers with more choices.