ViTPose is an open source human pose estimation model based on visual Transformer, known for its simple and efficient structure and excellent performance. It abandons complex convolutional neural networks and only uses stacked Transformer layers to extract image features, and can adjust model size and input resolution according to needs to achieve a balance between performance and speed. This model has achieved excellent results on the MS COCO data set, even surpassing many more complex models, and supports knowledge transfer, so that small models can also have the capabilities of large models. Its open source code and models facilitate research and development.

At its core, ViTPose uses a purely visual Transformer, which acts like a powerful "skeleton" to extract key features in an image. It does not require the assistance of complex convolutional neural networks (CNN) like other models. Its structure is very simple, that is, multiple Transformers are layered together.

ViTPose models can be resized as needed. Like a stretchable ruler, you can control the size of your model by increasing or decreasing the number of Transformer layers to find a balance between performance and speed. You can also adjust the resolution of the input image and the model will adapt. In addition, it can process multiple data sets simultaneously, that is, you can use it to recognize data from different poses.

Despite its simple structure, ViTPose performs very well in human pose estimation. It achieves very good results on the famous MS COCO dataset, even surpassing many more complex models. This shows that simple models can be very powerful. Another feature of ViTPose is that it can transfer "knowledge" from large models to small models. It's like an experienced teacher can impart knowledge to students, so that small models can have the strength of large models.

ViTPose’s code and models are open source, meaning anyone can use it for free and conduct research and development on it.

ViTPose is like a simple but powerful tool that helps computers understand human actions. Its advantages are simplicity, flexibility, efficiency and ease of learning. This makes it a very promising baseline model in the field of human pose estimation.

The model uses a Transformer layer to process image data and a lightweight decoder to predict key points. The decoder can use simple deconvolution layers or bilinear interpolation to upsample feature maps. ViTPose not only performs well on standard datasets, but also performs well in handling occlusions and different poses. It can be applied to various tasks such as human pose estimation, animal pose estimation, and facial key point detection.

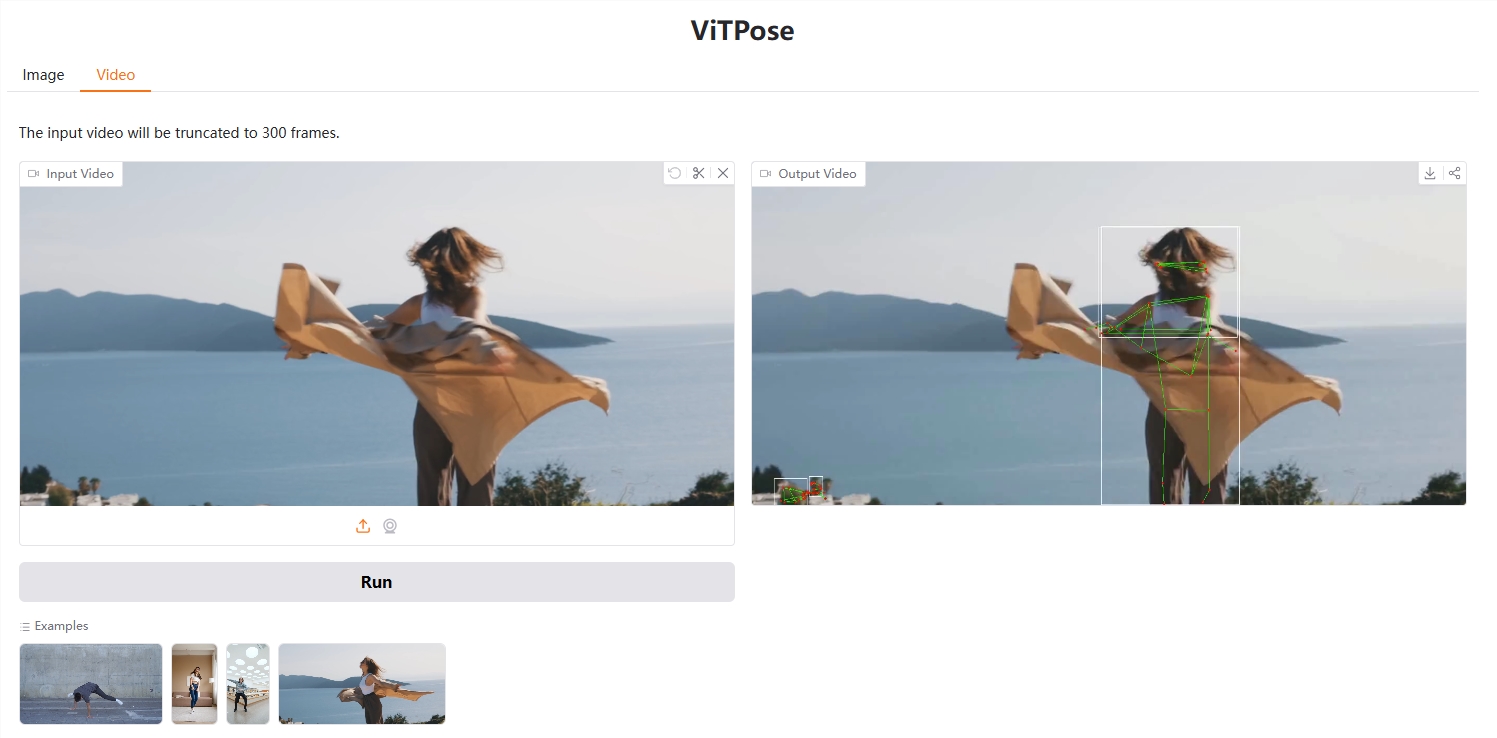

demo:https://huggingface.co/spaces/hysts/ViTPose-transformers

Model: https://huggingface.co/collections/usyd-community/vitpose-677fcfd0a0b2b5c8f79c4335

All in all, ViTPose provides a powerful baseline model for the field of human posture estimation with its efficient structure and excellent performance. Its open source feature also facilitates the participation of more researchers and developers and promotes the development of this field. Simplicity, efficiency and ease of use are its core advantages.