DeepSeek's recently released DeepSeek-V3 and DeepSeek-R1 models have caused a huge response in the field of artificial intelligence. Their low cost and high performance and powerful reasoning capabilities make them comparable to or even surpass the top closed-source models in many reviews. In particular, DeepSeek-R1 open source model weights and discloses all training technologies, which has attracted widespread attention in the industry and has also brought great pressure to companies such as Meta. Meta engineers even publicly stated that the team was in panic and tried to replicate DeepSeek's technology.

The series of models recently launched by DeepSeek has caused shock in the global AI circle. DeepSeek-V3 achieves high performance at low cost and is comparable to the top closed-source model in many reviews; DeepSeek-R1 uses innovative training methods to show strong inference capabilities, and its performance is benchmarked against the official version of OpenAI o1, and it is also open source. The model weighting has brought new breakthroughs and thinking to the field of AI.

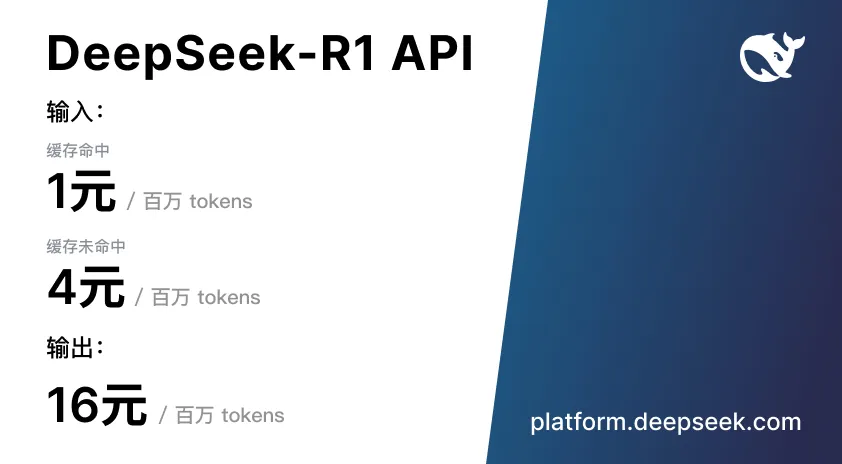

DeepSeek also discloses all training techniques. R1 is benchmarked against OpenAI's o1 model, and reinforcement learning technology is used extensively in the post-training stage. DeepSeek said that R1 is comparable to o1 in tasks such as mathematics, code, natural language reasoning, and the API price is less than 4% of o1.

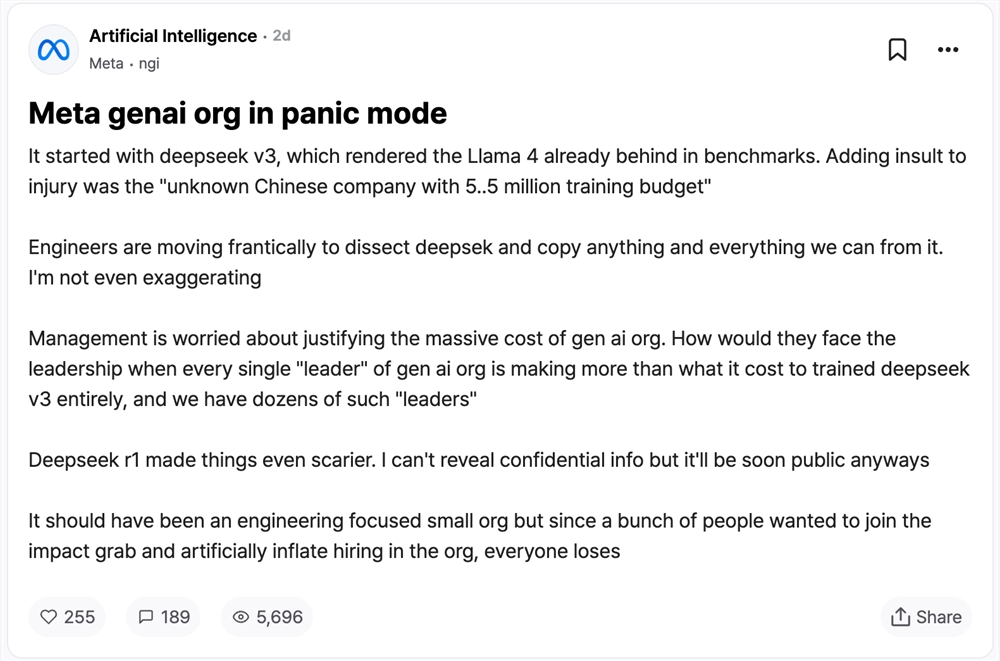

Recently, the teamblind, an anonymous post from a Meta employee in anonymous workplace community abroad, was particularly popular. The launch of DeepSeek V3 puts Llama 4 all behind in benchmarks, and the Meta Generative AI team is in panic. An "unknown Chinese company" has a $5.5 million budget to complete training and slap the existing big model in the face.

Meta engineers frantically dismantle DeepSeek and try to copy, while management is anxious about how to explain high costs to senior management. The salary of its team "leader" exceeds that of DeepSeek V3 training costs dozens of people. The emergence of DeepSeek R1 makes the situation worse, and although some information cannot be disclosed yet, it will be made public soon, and the situation may be even more unfavorable by then.

The translation of the anonymous post of Meta employees is as follows (translated by DeepSeek R1):

Meta Generative AI Department Enters a State of Emergency

It all started with the DeepSeek V3 – it made the Llama 4 benchmark score instantly look dated. What is even more embarrassing is that "an unknown Chinese company achieved such a breakthrough with just $5 million in training budget."

The team of engineers is frantically dismantling the DeepSeek architecture, trying to replicate all its technical details. This is by no means an exaggeration, our code base is undergoing a carpet-style search.

Management is dying about the rationality of the department's huge expenses. When the annual salary of each "leader" in the generative AI department exceeds the entire training cost of DeepSeek V3, and we have dozens of such "leaders", how should they explain to the senior management?

DeepSeek R1 makes the situation even more serious. Although confidential information cannot be disclosed, the relevant data will be made public soon.

It should be a skilled technology-oriented team, but the organizational structure was deliberately expanded due to the influx of a large number of people for influence. The result of this Game of Thrones? In the end, everyone became losers.

Introduction to DeepSeek Series ModelsDeepSeek-V3: is a hybrid expert (MoE) language model with a parameter amount of 671B, and each token activates 37B. It adopts the Multi-head Latent Attention (MLA) and DeepSeekMoE architecture, pre-trained on 14.8 trillion high-quality tokens, and after supervised fine-tuning and reinforcement learning, it surpasses some open source models in multiple assessments, and is associated with GPT-4o and Claude 3.5 Top closed-source models such as Sonnet have comparable performance. The training cost is low, only 2.788 million H800 GPU hours, about 5.576 million US dollars, and the training process is stable.

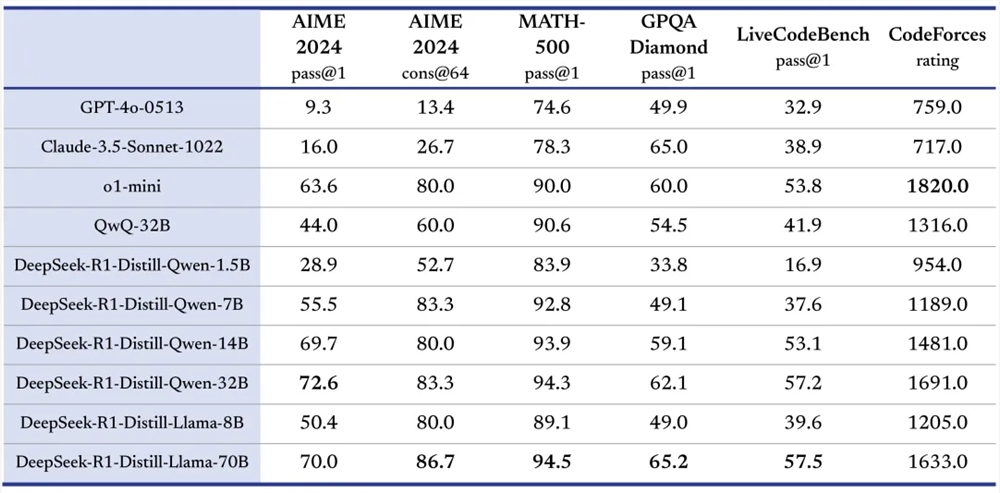

DeepSeek-R1: Includes DeepSeek-R1-Zero and DeepSeek-R1. Through large-scale reinforcement learning training, DeepSeek-R1-Zero demonstrates self-verification, reflection and other abilities through large-scale reinforcement learning training, and does not rely on supervised fine-tuning (SFT), but there are problems such as poor readability and language confusion. Based on DeepSeek-R1, DeepSeek-R1 introduces multi-stage training and cold-start data, which solves some problems. Its performance is comparable to OpenAI o1's official version in tasks such as mathematics, code, and natural language reasoning. At the same time, multiple models with different parameter scales have been opened to promote the development of the open source community.

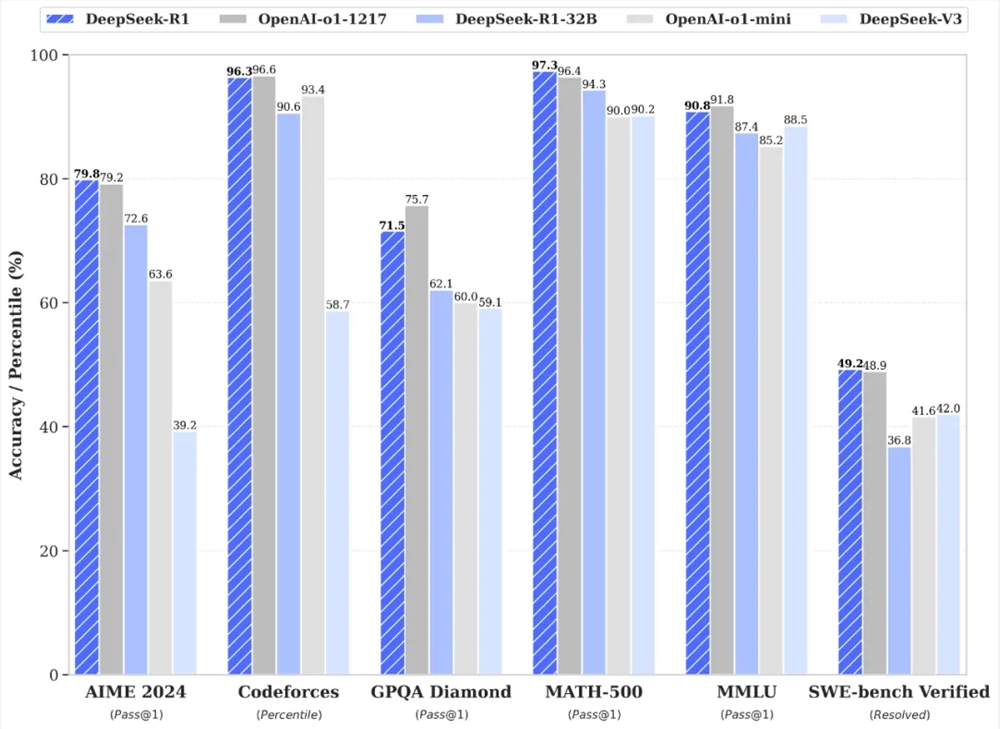

Excellent performance: DeepSeek-V3 and DeepSeek-R1 performed well in multiple benchmarks. For example, DeepSeek-V3 achieved excellent results in MMLU, DROP and other evaluations; DeepSeek-R1 has high accuracy in AIME 2024, MATH-500 and other tests, which is comparable to the official version of OpenAI o1 and even surpasses in some aspects.

Training innovation:

DeepSeek-V3 adopts load balancing strategies without auxiliary losses and multi-Token prediction targets (MTP) to reduce performance degradation and improve model performance; using FP8 training, it verifies its feasibility on large-scale models.

DeepSeek-R1-Zero uses pure reinforcement learning training and relying solely on simple reward and punishment signals to optimize the model, which proves that reinforcement learning can improve the model's inference ability; DeepSeek-R1 uses cold-start data fine-tuning to improve model stability and readability. sex.

Open Source Sharing: The DeepSeek series models adhere to the open source concept and open source model weights, such as DeepSeek-V3 and DeepSeek-R1 and their small distilled models, allowing users to train other models through distillation technology to promote communication and innovation in AI technology.

Multi-domain advantages: DeepSeek-R1 demonstrates its powerful capabilities in multiple fields. In the code field, it has a high rating on the Codeforces platform, surpassing most human contestants; in natural language processing tasks, it performs excellent in handling various text comprehension and generation tasks.

High cost performance: The DeepSeek series model API is affordable. For example, the input and output price of DeepSeek-V3 API is much lower than similar models; the DeepSeek-R1 API service pricing is also competitive, reducing the cost of developers.

Natural language processing tasks: including text generation, question and answer system, machine translation, text summary, etc. For example, in a question-and-answer system, DeepSeek-R1 can understand the problem and use reasoning ability to give accurate answers; in text generation tasks, high-quality text can be generated based on a given topic.

Code development: Help developers write code, debug programs, and understand code logic. For example, when developers encounter code problems, DeepSeek-R1 can analyze the code and provide solutions; it can also generate code frameworks or specific code snippets based on functional descriptions.

Solving mathematical problems: Solve complex mathematical problems in mathematical education, scientific research and other scenarios. Like DeepSeek-R1, it performs well in AIME competition-related questions and can be used to assist students in learning mathematics and researchers in dealing with math problems.

Model Research and Development: Provides reference and tools for AI researchers to study model distillation, improved model structure and training methods. Researchers can conduct experiments based on the DeepSeek open source model to explore new technological directions.

Auxiliary decision-making: process data and information and provide decision-making advice in the fields of business, finance, etc. For example, analyzing market data to provide reference for companies to formulate marketing strategies; processing financial data to assist investment decisions.

Visit the platform: Users can log in to the DeepSeek official website (https://www.deepseek.com/) to enter the platform.

Select a model: In the official website or App, the default dialogue is driven by DeepSeek-V3. Click to open the "Deep Thinking" mode, which is driven by the DeepSeek-R1 model. If called through the API, set the corresponding model parameters in the code according to requirements, such as setting model='deepseek-reasoner' when using DeepSeek-R1.

Input tasks: Enter tasks described in natural language in the dialogue interface, such as "Writing a love novel", "Explaining the function of this code", "Solving mathematical equations", etc.; if using the API, build the request according to the API specifications and add the task-related information Passed as input parameters.

Get results: After the model processes the task, return the results, view the generated text, answered questions, etc. on the interface; when using the API, parse the result data from the API response for subsequent processing.

ConclusionThe DeepSeek series models have achieved remarkable results in the field of AI with their outstanding performance, innovative training methods, open source sharing spirit and cost-effective advantages.

If you are interested in AI technology, you might as well like, comment and share your views on the DeepSeek series of models. At the same time, we continue to pay attention to the subsequent development of DeepSeek, and look forward to it bringing more surprises and breakthroughs to the AI field, promoting the continuous progress of AI technology, and bringing more changes and opportunities to various industries.

The emergence of DeepSeek has brought new vitality and competition to the field of artificial intelligence, and its open source spirit is even more commendable. In the future, the DeepSeek series models will show their powerful abilities in more fields, let's wait and see!