The Hugging Face team released two lightweight AI models: SmolVLM-256M and SmolVLM-500M, with parameters of 256 million and 500 million respectively. They are currently the smallest AI model that can process image, video and text data simultaneously. These two models are especially suitable for devices with less than 1GB of memory, providing developers with low-cost and high-efficiency data processing solutions. Its efficient performance surpasses many larger-scale models in various benchmarks, especially in dealing with primary school science charts, demonstrating its huge potential in education and research.

Recently, the team of Hugging Face, an artificial intelligence development platform, released two new AI models, SmolVLM-256M and SmolVLM-500M. They confidently claim that the two models are by far the smallest AI models capable of processing images, short videos and text data simultaneously, especially suitable for devices with less than 1GB of memory, such as laptops. This innovation allows developers to achieve higher efficiency at a lower cost when processing large amounts of data.

The parameters of these two models are 256 million and 500 million, respectively, which means that their ability to solve problems has also improved accordingly. The more parameters, the better the model's performance is usually. The tasks that the SmolVLM series can perform include describing images or video clips, and answering questions about PDF documents and their content, such as scanning text and charts. This makes them have a wide range of application prospects in many fields such as education and research.

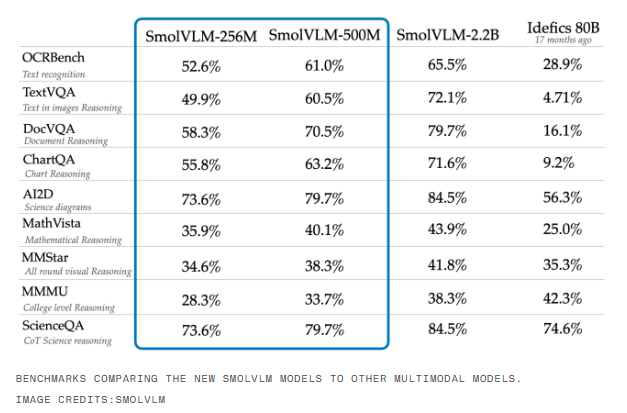

During the training of the model, the Hugging Face team leveraged 50 high-quality image and text datasets called "The Cauldron", as well as file scans and detailed pairing datasets called Docmatix. Both datasets were developed by Hugging Face’s M4 team and focused on the development of multimodal AI technology. It is worth noting that the SmolVLM-256M and SmolVLM-500M outperform many larger models in various benchmark tests, such as the Idefics80B, and especially in AI2D tests, they perform outstandingly in the ability to analyze scientific charts for primary school students.

However, while affordable and versatile, small models may not perform as well as large models on complex inference tasks. A study from Google DeepMind, Microsoft Research Institute and the Mila Institute in Quebec showed that many small models performed disappointingly on these complex tasks. The researchers speculate that this may be due to the tendency of small models to identify surface features of the data, and they appear to be unscrupulous when applying this knowledge in new situations.

Hugging Face’s SmolVLM family of models are not only small AI tools, but also demonstrate impressive capabilities when dealing with various tasks. This is undoubtedly a good choice for developers who want to achieve efficient data processing at low cost.

The emergence of the SmolVLM series of models has brought new possibilities for lightweight AI applications. Its outstanding performance in resource-constrained devices provides a new direction for the future development of AI technology. Although there is still room for improvement in complex tasks, its low threshold and high efficiency make it a choice that many developers deserve attention. In the future, we look forward to seeing the application and further optimization of the SmolVLM series models in more fields.