The era of high-definition videos is coming, but the details of faces in low-definition videos are often blurred, which seriously affects the viewing experience. Existing face repair technologies are difficult to balance the reconstruction of details and time consistency. The research team of Nanyang Technological University has developed a KEEP framework, providing a new solution for high-definition video repair.

In this era of ever-changing information, video has become an indispensable part of our lives. However, the quality of videos often affects our viewing experience, especially in the presentation of facial details.

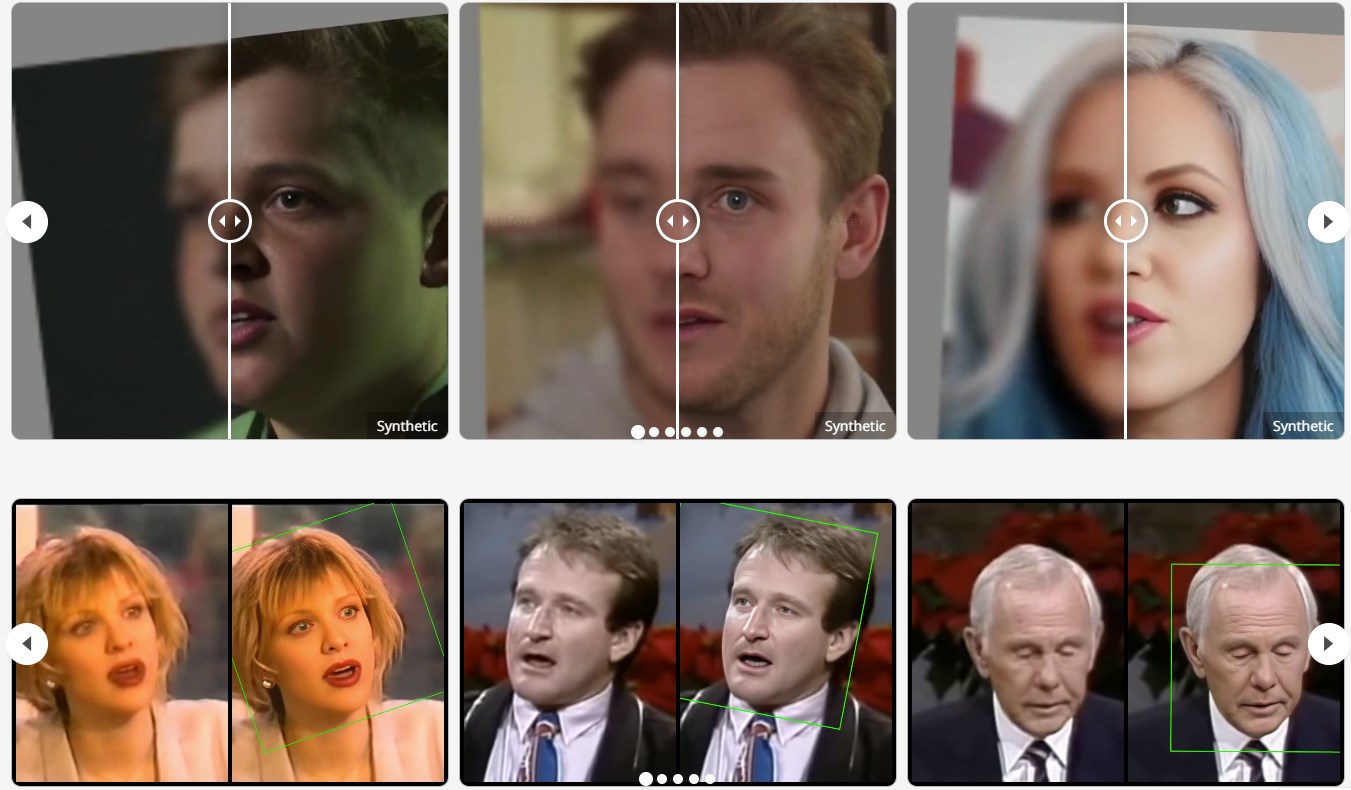

Many existing methods of video face repair are either simply applying general video super-resolution networks to facial data sets, or processing each video image independently. These methods often find it difficult to ensure the consistency of facial details and time. To solve this problem, the research team at Nanyang Technological University has launched a new framework called KEEP (Kalman-Inspired Feature Propagation), which can restore faces in low-definition videos to high-definition.

Product portal: https://top.aibase.com/tool/keep

The core idea of KEEP comes from the Kalman filtering principle, which gives the method the ability to "recall" in the recovery process. In other words, KEEP can guide and adjust the repair process of the current frame with the help of information of previously recovered frames. This process greatly improves the consistency and continuity of facial details in video frames.

In the KEEP framework, the entire process is divided into four modules: encoder, decoder, Kalman filtering network and cross-frame attention (CFA). The encoder and decoder construct a model based on a variable component quantum generation adversarial network (VQGAN) dedicated to generating high-definition facial images. The Kalman filtering network is the core part of this technology. It combines the observation state of the current frame and the prediction state of the previous frame to form a more accurate estimate of the current state, thereby generating a clearer image.

In addition, the cross-frame attention module further enhances the correlation between different frames, helping to maintain better timeliness and detail presentation during video playback. The uniqueness of this design is that it can effectively integrate the information of each frame, making the final generated video not only clear but also full of layering.

After a lot of experiments, the research team has confirmed that the KEEP technology performs quite well in restoring facial details and maintaining time consistency. KEEP shows its powerful capabilities, whether in complex simulation environments or in real video scenarios. It can be said that the launch of this technology will bring a new improvement to our video viewing experience.

Key points:

KEEP technology can effectively maintain the consistency of details and time in facial videos.

This framework combines the principle of Kalman filtering to realize the effective transmission and fusion of inter-frame information.

KEEP demonstrated excellent facial detail capture capabilities in experiments, injecting new vitality into the field of super-resolution of facial videos.

The innovation of the KEEP framework lies in its clever application of the Kalman filtering principle and its ability to effectively integrate information between frames, setting a new benchmark for high-definition video repair technology and is expected to greatly improve the user's video viewing experience. In the future, this technology will have broad application prospects in film and television production, video conferencing and other fields.