A research team at the BAIR laboratory of the University of California, Berkeley has developed a reinforcement learning framework called HIL-SERL that significantly enhances the ability of robots to learn complex operational skills in the real world. It cleverly combines human demonstration, correction and efficient reinforcement learning algorithms, allowing robots to master various precision operations in a short time, breaking through the bottlenecks of previous slow learning and error-prone robots. This breakthrough technology is expected to revolutionize the way robots learn and apply, laying a solid foundation for industrial automation and the popularization of robots in daily life.

Recently, the Sergey Levine research team from the BAIR laboratory of the University of California, Berkeley proposed a reinforcement learning framework called HIL-SERL to solve the problem of robots learning complex operational skills in the real world.

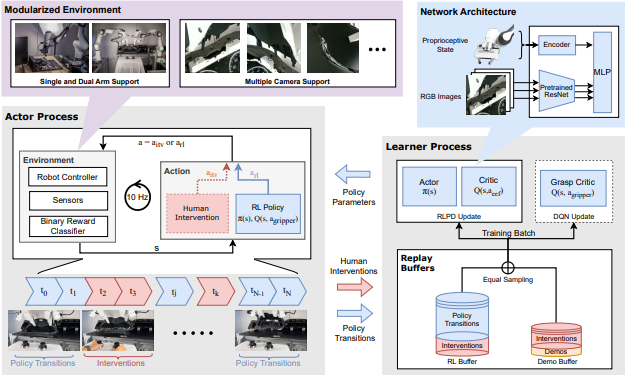

This new technology combines human demonstration and correction with efficient reinforcement learning algorithms, allowing robots to master a variety of sophisticated and dexterous operating tasks such as dynamic operation, precision assembly and two-arm collaboration in just one to 2.5 hours.

In the past, it was so difficult to let a robot learn new skills, just like teaching a naughty child to do homework, which had to be taught step by step and corrected over and over again. What is even more troublesome is that various situations in the real world are complex and changeable. Robots often learn slowly and forget quickly, and they will fail if they are not careful.

The HIL-SERL framework is like asking a "tutor" for the robot. It not only has detailed "textbooks", that is, human demonstrations and corrections, but also is equipped with efficient learning algorithms to help the robot quickly master various skills.

You only need to demonstrate a few times, and the robot can complete various operations in a decent manner, from playing with building blocks, flipping pancakes, to assembling furniture and installing circuit boards, it is simply omnipotent!

In order to make robots learn faster and better, HIL-SERL also introduces a correction mechanism for human-computer interaction. Simply put, when a robot makes a mistake, human operators can intervene in time to correct it, and feedback these correction information to the robot. In this way, the robot can constantly learn from mistakes, avoid making mistakes repeatedly, and eventually become a real master.

After a series of experiments, the effect of HIL-SERL is amazing. In various tasks, robots have achieved a success rate of nearly 100% in just 1 to 2.5 hours, and the operation speed is nearly 2 times faster than before.

More importantly, HIL-SERL is the first system to implement image input-based dual-arm coordination using reinforcement learning in the real world, that is, it allows two robot arms to work together to complete more complex tasks. For example, assembling a synchronization belt requires a highly coordinated operation.

The emergence of HIL-SERL not only allows us to see the huge potential of robot learning, but also points out the direction for future industrial applications and research. Maybe in the future, each of us will have a robot "apprentice" in our home, who will help us do housework, assemble furniture, and even play games with us. It feels very good to think about it!

Of course, HIL-SERL also has some limitations. For example, for some tasks that require long-term planning, it may seem overwhelming. In addition, currently HIL-SERL is mainly tested in laboratory environments and has not been verified on large scale in real-life scenarios. However, I believe that with the advancement of technology, these problems will be gradually solved.

Paper address: https://hil-serl.github.io/static/hil-serl-paper.pdf

Project address: https://hil-serl.github.io/

In summary, the HIL-SERL framework has brought significant progress to the field of robot learning, and its efficient learning ability and human-computer interaction mechanisms have enabled it to show great potential in real-life applications. Although there are still some limitations, the future development prospects are worth looking forward to.