Anthropic has launched the new Claude language model token counting API, aiming to help developers manage the use of tokens more effectively, thereby improving the efficiency and control of interacting with the Claude model. Precise control of tokens is crucial because it directly affects cost, quality and user experience. This API provides deeper insight into token usage, allowing developers to estimate the number of tokens before calling the Claude model, thereby optimizing the prompt content and reducing costs. This is especially important for developers and projects that need to granularly control costs.

In the current field of artificial intelligence, developers and data scientists have become crucial to precise control of language models. Anthropic's Claude language model offers many possibilities for users, but effectively managing the use of tokens remains a challenge. To solve this problem, Anthropic has launched a new token counting API designed to provide deeper insights into token usage, thereby improving interaction efficiency and control capabilities with language models.

Tokens play a fundamental role in language models, they can be letters, punctuation marks, or words needed to generate a response. The use of managed tokens directly affects multiple aspects, including cost efficiency, quality control and user experience. By rationally managing tokens, developers can not only reduce the cost of API calls, but also ensure that the generated response is more complete and improve the interactive experience between users and chatbots.

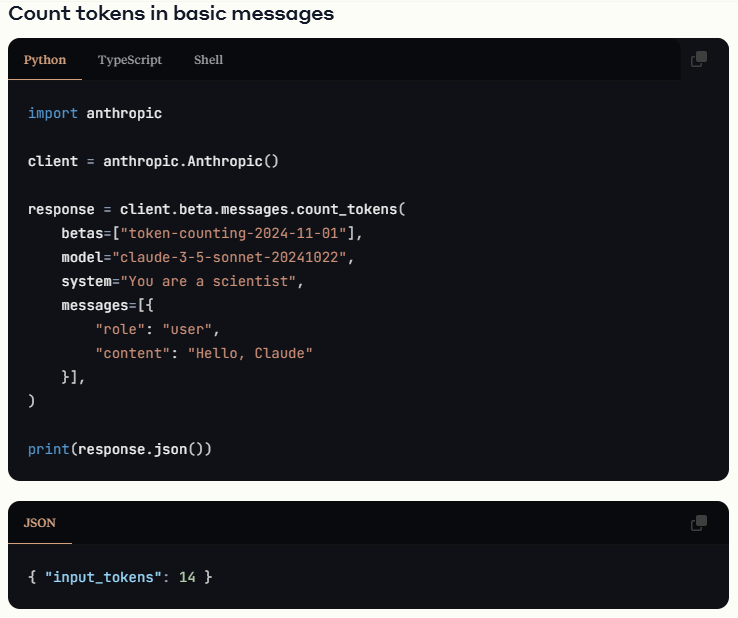

Anthropic's token counting API enables developers to count tokens without directly calling the Claude model. This API can measure the number of tokens of prompts and responses, and is more efficient in computing resource consumption. This pre-estimation function allows developers to adjust the prompt content before initiating actual API calls to optimize the development process.

Currently, the token counting API supports a variety of Claude models, including Claude3.5Sonnet, Claude3.5Haiku, Claude3Haiku and Claude3Opus. Developers can get the number of tokens through concise code calls to the API, which can be easily achieved whether using Python or Typescript.

Several main features and advantages of this API include: accurate estimation of token counts to help developers optimize inputs within token limits; optimize token usage to avoid incomplete responses in complex application scenarios; and cost-effectiveness by understanding tokens The use of this allows developers to better control the cost of API calls, especially for startups and cost-sensitive projects.

Token counting API can help build more efficient customer support chatbots, accurate document summary, and better interactive learning tools in real-world applications. By providing accurate token usage insights, Anthropic further enhances developers' control over the model, allowing it to better adjust prompt content, reduce development costs, and improve user experience.

The token counting API will provide developers with better tools to help them optimize projects and save time and resources in the rapidly evolving language model area.

Official details portal: https://docs.anthropic.com/en/docs/build-with-claude/token-counting

Key points:

The token counting API helps developers accurately grasp the usage of tokens and improve development efficiency.

Understanding the use of tokens can effectively control API call costs and is suitable for cost-sensitive projects.

Supports multiple Claude models, which are convenient for developers to use flexibly in different application scenarios.

In short, Anthropic's token counting API provides developers with more refined Claude model control capabilities, improves development efficiency and reduces costs, and is an important tool for building efficient AI applications.