With the rapid development of virtual reality and augmented reality technologies, the demand for high-fidelity and personalized virtual avatars is growing. URAvatar came into being, and this new technology can easily create realistic virtual images by scanning a mobile phone, bringing users an unprecedented virtual experience. It breaks through the bottleneck of traditional technology and realizes real-time driving and adjusting virtual avatars under different lighting conditions, greatly improving the realism and interactivity of virtual images.

With the rapid development of virtual reality and augmented reality technologies, the need for personalized virtual avatars is becoming increasingly urgent. Recently, researchers have proposed a new technology called URAvatar (Universal Re-illuminated Gaussian Codec Avatar) that can easily generate high-fidelity virtual avatars through a mobile phone scan.

This innovative achievement not only improves the visual effect of virtual avatars, but also allows users to drive and adjust their avatars in real time under different lighting conditions.

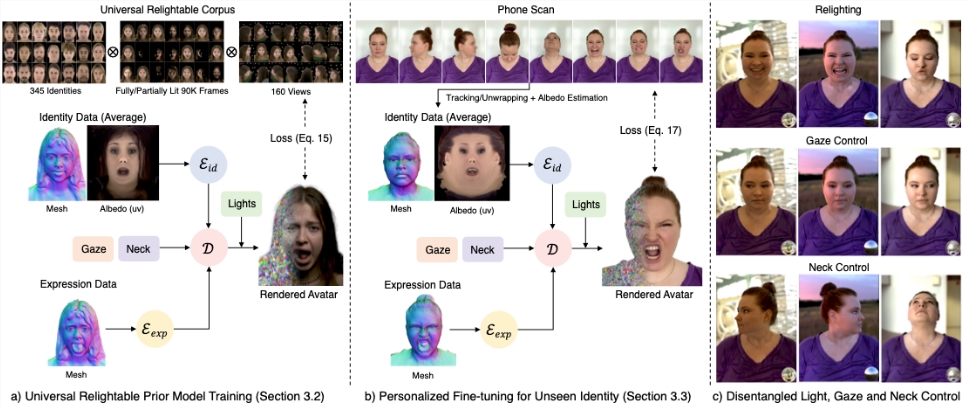

URAvatar works based on a complex optical transmission model, which is different from previous methods of estimating reflection parameters through reverse rendering. URAvatar uses a learnable radiation transmission model that enables efficient real-time rendering. The challenge of this technology is how to effectively migrate the avatar between different identities. The research team has built a general re-illuminated avatar model by training hundreds of high-quality multi-view face scanning data and combined with the lighting conditions of controllable point light sources.

In practical applications, users only need to use their mobile phones to perform simple scans in a natural environment, and the system can reconstruct the head's posture, geometry and reflective texture, and finally generate a personalized re-illuminated avatar. After fine adjustments, the user's avatar can achieve natural performance and dynamic control under different ambient lighting, ensuring that the avatar can maintain consistency under various lighting conditions.

In addition, URAvatar technology also supports users to independently control the gaze direction and neck movement of the avatar, increasing the expressiveness and interactivity of the virtual avatar. The release of this technology will bring new opportunities to the fields of games, social platforms, etc., and users will be able to participate in the virtual world in a more vivid and personal way.

Key points:

URAvatar generates personalized virtual avatar through mobile phone scanning to improve the virtual performance effect.

This technology adopts a learnable radiation transmission model to enable real-time rendering and lighting migration.

Users can independently control the gaze direction and neck movement of the avatar to enhance the virtual interactive experience.

The emergence of URAvatar technology marks a new milestone in virtual avatar generation technology. Its convenient operation, realistic effects and powerful controllability will surely promote the deep integration of the virtual world and the real world, bringing users a more immersive and personalized virtual experience. In the future, we can expect URAvatar to be applied in more fields to create a more exciting digital future.