Beijing Zhipu Huazhang Technology Co., Ltd. has launched the CogVideoX v1.5 open source model, which has made significant breakthroughs in the field of video generation. Following its release in early August, the CogVideoX series has quickly become the focus of the industry with its leading technology and developer-friendly features. This update has brought many improvements, including supporting longer and higher-definition video generation, as well as a significant improvement in the quality and semantic understanding of the image generation video, providing users with a better AI video generation experience. What is more worth noting is that the new version integrates the Qingying platform and the CogSound sound effect model, further improving the video generation ecosystem.

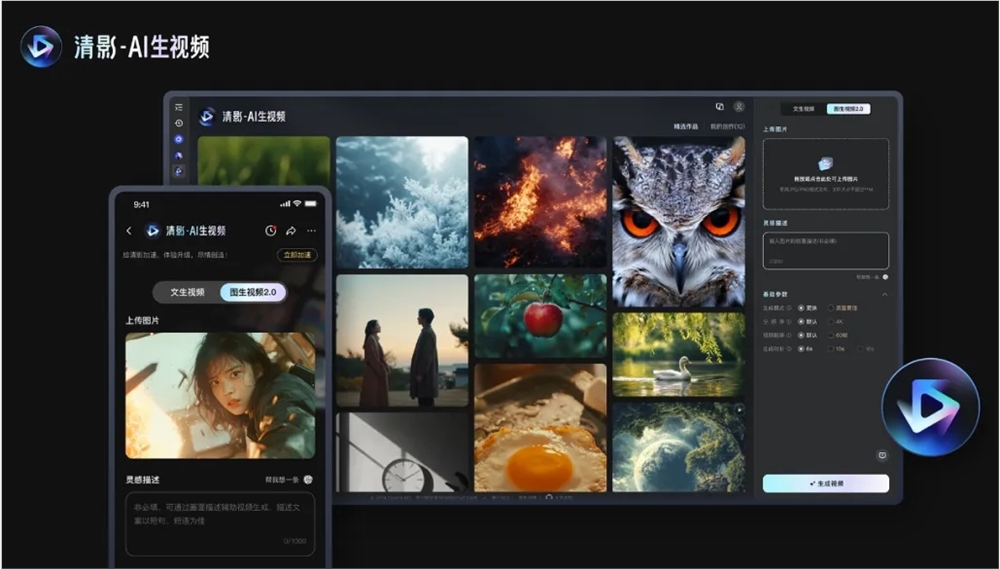

The content of this open source includes two models: CogVideoX v1.5-5B and CogVideoX v1.5-5B-I2V. The new version will also be launched on the Qingying platform simultaneously, and will be combined with the newly launched CogSound sound effect model to provide quality improvement, ultra-high-definition resolution support, variable proportions to adapt to different playback scenarios, multi-channel output, and AI videos with sound effects. Serve.

At the technical level, CogVideoX v1.5 filters video data that lacks dynamic connectivity through an automated filtering framework, and uses an end-to-end video understanding model, CogVLM2-caption to generate accurate video content descriptions, improving text understanding and instruction compliance capabilities. In addition, the new version adopts an efficient three-dimensional variational autoencoder (3D VAE) to solve the problem of content coherence, and independently develops a Transformer architecture that integrates three-dimensional text, time and space, cancels the traditional cross-attention module, and Expert adaptive layer normalization technology optimizes the utilization of time-step information in the diffusion model.

In terms of training, CogVideoX v1.5 builds an efficient diffusion model training framework, and achieves rapid training of long video sequences through a variety of parallel computing and time optimization techniques. The company said they have verified the effectiveness of scaling law in the field of video generation and plans to expand data volume and model scale in the future, explore innovative model architectures to more efficiently compress video information and better integrate text and video content. .

Code: https://github.com/thudm/cogvideo

Model: https://huggingface.co/THUDM/CogVideoX1.5-5B-SAT

The open source of CogVideoX v1.5 provides new impetus for the development of video generation technology and provides developers with more powerful tools. Zhipu Huazhang's continuous technological innovation and open source spirit are worthy of recognition, and the future application prospects of this model are worth looking forward to. Looking forward to more innovative applications based on CogVideoX v1.5.