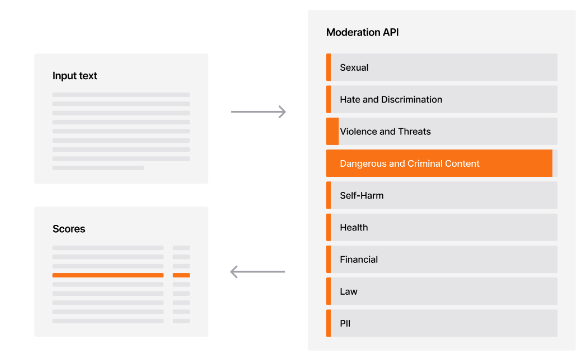

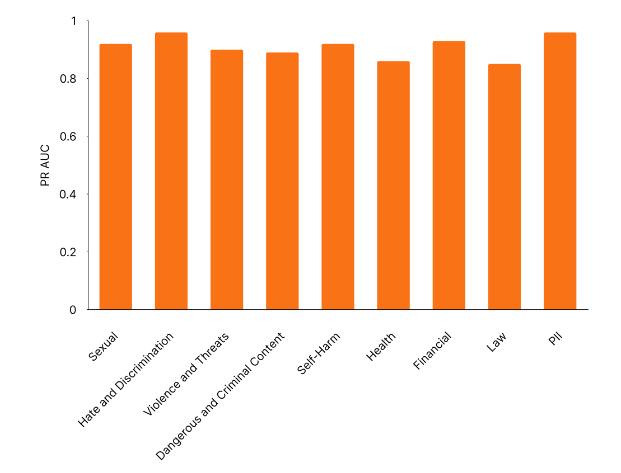

French artificial intelligence startup Mistral AI announced the launch of a new content audit API, aiming to compete with industry giants such as OpenAI and meet the increasingly severe AI security and content filtering challenges. The API is based on Mistral's Ministral8B model. It has been carefully tuned to detect nine types of harmful content, including pornographic content, hate speech, etc., and supports 11 languages, including Chinese, English, etc. Mistral AI emphasizes the key role of security in AI applications, and has established cooperative relationships with Microsoft Azure, Qualcomm, and SAP to actively expand the enterprise AI market.

French artificial intelligence startup Mistral AI recently launched a new content audit API, aiming to compete with OpenAI and other industry leaders while dealing with the growing issues of AI security and content filtering.

The service is based on Mistral's Ministral8B model and is finely tuned to detect potentially harmful content in nine different categories, including pornographic content, hate speech, violent behavior, dangerous activities and personally identifiable information. This API also has the ability to analyze original text and dialogue content.

Mistral AI emphasized at the press conference that "security plays a key role in making AI useful." They believe that system-level security protection measures are crucial to protecting downstream applications.

The content review API released this time coincides with the increasing pressure on the AI industry, and companies are forced to improve the security of their technology. Last month, Mistral also signed an agreement with other major AI companies for the AI Security Summit, promising to develop AI technologies responsibly.

The newly launched API is available on Mistral's Le Chat platform and supports 11 languages including Arabic, Chinese, English, French, German, Italian, Japanese, Korean, Portuguese, Russian and Spanish. This multilingual ability sets Mistral apart from some competitors who focus primarily on English content.

Mistral AI has also established partnerships with high-profile companies such as Microsoft Azure, Qualcomm and SAP, gradually increasing its influence in the enterprise AI market. SAP recently announced that it will host Mistral's models, including Mistral Large2, on its infrastructure, to provide secure AI solutions that comply with European regulations.

Mistral's technical strategy shows its maturity beyond age. By training its audit model to understand the context of the conversation, rather than just analyzing orphan text, Mistral has developed a system that captures more subtle and harmful content that may be missed in more basic filters.

Currently, the audit API is provided through Mistral's cloud platform and is charged based on usage. Mistral said it will continue to improve the accuracy and expand functionality of the system based on customer feedback and changing security needs.

Since its inception, Mistral has rapidly grown into an important force in promoting corporate AI security thinking. In a field dominated by American tech giants, Mistral may be its biggest advantage in privacy and security from its European perspective.

API portal: https://docs.mistral.ai/capabilities/guardrailing/

Key points:

Mistral AI launches multilingual content auditing API that supports 11 languages and detects multiple harmful content.

The API has been applied on Mistral's Le Chat platform and has established partnerships with multiple businesses at the same time.

Mistral’s technology is based on a dialogue context and enhances detection of potentially harmful content.

Mistral AI’s multilingual content auditing API is expected to gain a foothold in the highly competitive AI security field with its powerful capabilities and extensive partnerships. Its technical advantages of focusing on dialogue background also provide a new direction for the improvement of AI content review technology in the future. We look forward to Mistral AI bringing more innovations to the field of AI security in the future.