Recently, the research team publicly released an expression migration framework called HelloMeme, which can migrate one person's expressions to another person's images with ultra-high fidelity. Through its unique network structure and innovative Animatediff module, HelloMeme achieves a perfect balance of smoothness and high picture quality for video generation, and supports ARKit Face Blendshapes, giving users fine control over character expressions. In addition, its hot-swap adapter design ensures compatibility with the SD1.5 model, expands the possibilities of creation and significantly improves the efficiency of generating videos. This article will introduce in detail the core functions, technical features and comparisons with other methods of the HelloMeme framework.

Recently, the research team released a framework called HelloMeme, which can migrate the expressions of one person in the picture to the image of the character in another picture.

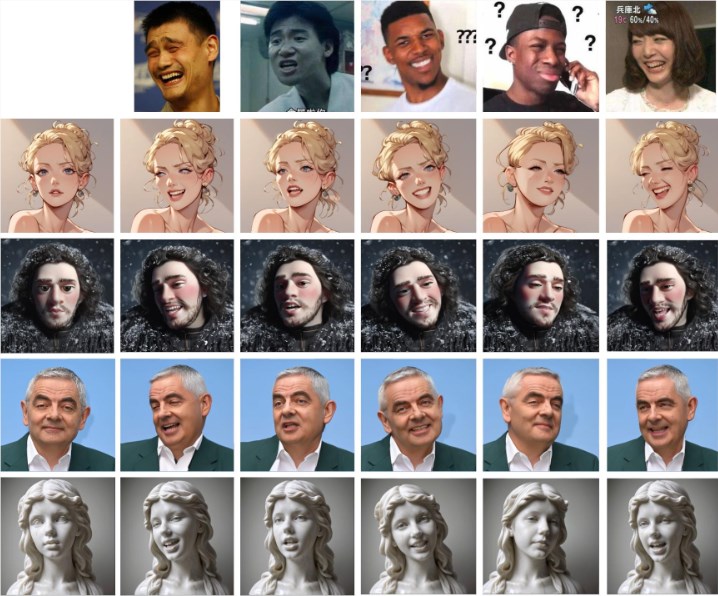

As shown in the following figure, give an expression picture (first line), and then you can transfer the expression details to the characters in other pictures.

The core of HelloMeme is its unique network structure. The frame is able to extract features of each frame from the driving video and input these features into the HMControlModule. Through such processing, researchers can generate smooth video images. However, in the initially generated video, there is a problem of flickering between frames, affecting the overall viewing experience. To address this problem, the team introduced the Animatediff module, an innovation that significantly improved the video continuity but also reduced the fidelity of the picture to some extent.

In response to this contradiction, the researchers further optimized and adjusted the Animatediff module, ultimately achieving high image quality while improving video continuity.

In addition, the HelloMeme framework also provides powerful support for facial expression editing. By binding ARKit Face Blendshapes, users can easily control the facial expressions of characters in the generated video. This flexibility allows creators to generate videos with specific emotions and expressions as needed, greatly enriching the expressiveness of the video content.

In terms of technical compatibility, HelloMeme adopts a hot-swap adapter design based on SD1.5. The biggest advantage of this design is that it does not affect the generalization capability of the T2I (text-to-image) model, allowing any stylized models developed on SD1.5 to integrate seamlessly with HelloMeme. This provides more possibilities for various creations.

The research team found that the introduction of HMReferenceModule significantly improved the fidelity conditions when generating videos, which means that sampling steps can be reduced while generating high-quality videos. This discovery not only improves generation efficiency, but also opens new doors for real-time video generation.

The effect of comparison with other methods is as follows. It is obvious that HelloMeme's expression migration effect is more natural and close to the original expression effect.

Project entrance: https://songkey.github.io/hellomeme/

https://github.com/HelloVision/ComfyUI_HelloMeme

Key points:

HelloMeme achieves the dual improvement of video generation fluency and picture quality through its unique network structure and Animatediff module.

The framework supports ARKit Face Blendshapes, allowing users to flexibly control the facial expressions of characters and enrich the performance of video content.

The hot-swap adapter design ensures compatibility with other models based on SD1.5, providing greater flexibility for creation.

With its efficient expression migration ability, smooth video generation effect and powerful compatibility, the HelloMeme framework provides new possibilities for video creation and is expected to play an important role in film and television production, animation special effects and other fields. Its open source features also facilitate more developers to participate and jointly promote the further development and improvement of the technology.