The Shanghai AI Lab team has opened the source of the LLaMA version of the o1 project, which is an open source replica project for the OpenAI Olympiad problem-solving tool o1. The project uses advanced technologies such as Monte Carlo tree search and reinforcement learning to achieve remarkable results in answering mathematical Olympiad questions, and its performance even exceeds that of some commercial closed-source solutions. The open source of the project provides developers with valuable learning resources and research foundation, and also promotes the further development of the application of artificial intelligence in the field of mathematics. This project includes pre-trained data sets, pre-trained models and reinforcement learning training codes, etc., and uses a variety of optimization technologies including LoRA and PPO, aiming to improve the model's ability in mathematical reasoning.

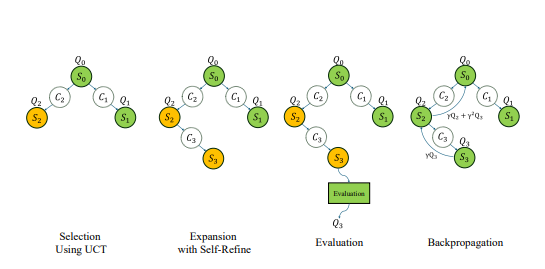

Recently, the Shanghai AI Lab team released the LLaMA version of the o1 project, aiming to replicate OpenAI's Olympiad problem-solving tool o1. The project adopts a variety of advanced technologies, including Monte Carlo tree search, Self-Play reinforcement learning, PPO and AlphaGo Zero's dual strategy paradigm, which has attracted widespread attention from the developer community.

Long before the release of OpenAI's o1 series, the Shanghai AI Lab team began to explore the use of Monte Carlo tree search to improve the mathematical ability of large models. After the release of o1, the team further upgraded the algorithm, focused on the math Olympiad problem, and developed it as an open source version of the OpenAI Strawberry Project.

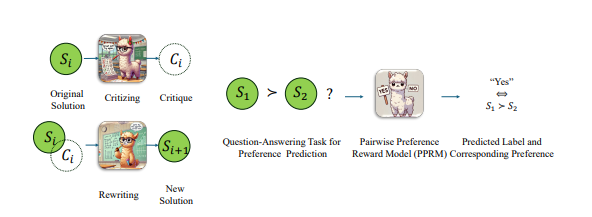

In order to improve the performance of the LLaMA model in mathematical Olympiad problems, the team adopted a paired optimization strategy, that is, not directly give the absolute score of the answer, but rather compare the relative advantages and disadvantages of the two answers. With this approach, they have made significant progress in the most difficult AIME2024 benchmarks. Among the 30 test questions, the optimized model was done correctly 8, while the original LLaMA-3.1-8B-Instruct model was done correctly 2. This achievement outperforms other commercial closed-source solutions besides o1-preview and o1-mini.

At the end of October, the team announced significant progress in replicating OpenAI o1 based on the AlphaGo Zero architecture, successfully allowing the model to gain advanced thinking ability through interaction with the search tree during the learning process without manual annotation. In less than a week, the project was opened.

At present, the open source content of LLaMA version o1 includes: pre-trained data sets, pre-trained models, and reinforcement learning training code. Among them, the "OpenLongCoT-Pretrain" data set contains more than 100,000 long thinking chain data, each data contains a complete mathematical problem reasoning process, including thinking content, scoring results, problem description, graph coordinates, calculation process, conclusion deduction and other complete inference links, as well as criticism and verification of each inference step, provide evaluation and guidance for the inference process. After continuing pre-training on this dataset, the model can read and output long thought chain processes like o1.

Although the project is called LLaMA-O1, the pre-trained model currently provided by the official is based on Google's Gemma2. Based on the pre-trained model, developers can continue to carry out reinforcement learning training. The training process includes: using Monte Carlo tree search for self-game to generate experience; storing experience in a priority experience replay buffer; sampling batch data from the buffer for training; updating model parameters and experience priorities. Some key technologies are also used in the training code, including using LoRA for efficient parameter fine-tuning, using PPO algorithm as a strategy optimization method, implementing GAE algorithm for calculating advantageous functions, and using priority experience playback to improve training efficiency.

It is worth noting that the LLaMA-O1 code was published under the GitHub account called SimpleBerry. The account has no special introduction and seems more mysterious. From other SimpleBerry-related accounts and official website information, it can only be seen that its nature is a research laboratory, but no more information on the research direction is disclosed.

In addition to LLaMA-O1, another publicly progressed o1 replica project is O1-Journey from the Shanghai Jiaotong University team. The team released its first progress report in early October, introducing the innovative Journey Learning paradigm and the first model to successfully integrate search and learning into mathematical reasoning. O1-Journey core development team is mainly composed of junior and senior undergraduates at Shanghai Jiaotong University, as well as first-year doctoral students from the GAIR laboratory (general artificial intelligence research laboratory). The instructors include Liu Pengfei and Yao Ban, associate professor at Shanghai Jiaotong University. Alumni, Sloan Award winner Li Yuanzhi, etc.

Paper address: https://arxiv.org/pdf/2410.02884

https://arxiv.org/pdf/2406.07394

The open source of the LLaMA version o1 project marks important progress in the field of AI mathematics problem-solving and also provides a solid foundation for further research and application. We look forward to more innovative achievements based on this project in the future.