Meta FAIR, UC Berkeley and New York University research teams have collaborated on developing a new technology called Thinking Preference Optimization (TPO) to significantly improve instruction compliance and response quality in large language models (LLM). Unlike traditional LLMs that give answers directly, TPO technology allows models to think and reflect internally before replying, thereby generating more accurate and coherent answers. This innovation guides the model to optimize its thinking process without showing the user the intermediate steps, ultimately improving the quality of responses through an improved chain thinking (CoT) reasoning method.

The core of TPO technology is the improved chain thinking (CoT) reasoning method. This approach encourages models to “think and answer” during training, helping them build a more organized inner thinking process before providing the final answer. Traditional CoT cues can sometimes lead to reduced accuracy and are quite tricky to train due to the lack of clear thinking steps. And TPO successfully overcomes these challenges by allowing models to optimize and simplify their thinking process without exposing intermediate steps to users.

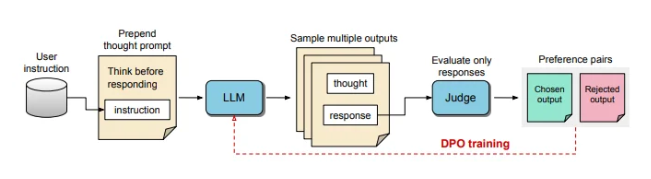

During the TPO training process, first prompt the large language model to generate multiple ideas, and then sort out the final answer. These outputs are then evaluated by a "judger" model to pick the best performing and worst-performing answers. These evaluation results are used as "choice" and "reject" pairs for Direct Preference Optimization (DPO) to continuously improve the response quality of the model.

By adjusting the training prompts, TPO encourages the model to think internally before answering. This process guides the model to optimize its answers to make it clearer and more relevant. Ultimately, the evaluation is done by an LLM-based judgment model that only scores the final answer, thereby helping the model improve the quality of the answers independently of the hidden thinking steps. TPO also uses direct preference optimization to create preferred and rejection answers that contain hidden thinking, and after multiple rounds of training, further refine the internal process of the model.

In benchmarks for AlpacaEval and Arena-Hard, the TPO method outperformed the traditional response baseline and was better than the Llama-3-8B-Instruct model of "Thinking Tips". Iterative training of this method optimizes thinking generation capabilities, ultimately surpassing multiple baseline models. It is worth mentioning that TPO is not only suitable for logic and mathematical tasks, but also makes great efforts in creative fields such as marketing and health instructions to follow tasks.

AI and robotics expert Karan Verma shared his views on the concept of "thinking LLM" on social platform X, saying he was very excited about it and looked forward to the potential of this innovation in medical applications that can bring more patients. Good therapeutic effect.

This structured internal thinking process allows the model to process complex instructions more effectively, further expanding its application in areas that require multi-level reasoning and meticulous understanding without the need for humans to provide specific thinking data. This study shows that TPO has the potential to make large language models more flexible and efficient in diverse contexts, suitable for areas where there are high requirements for the flexibility and depth of response generation.

The breakthrough advances in TPO technology have brought stronger inference and understanding capabilities to large language models, opening up new possibilities for their application in various fields, especially in tasks that require complex thinking processes. Huge advantages, future development is worth looking forward to.