OpenAI released a new benchmark SimpleQA, aiming to evaluate the factual accuracy of large language models generated answers. With the rapid development of AI technology, ensuring the authenticity of model output is crucial, and the "illusion" phenomenon—the model generation of seemingly credible but actually wrong information—has become an increasingly severe challenge. The emergence of SimpleQA provides new ways and standards to solve this problem.

Recently, OpenAI released a new benchmark called SimpleQA to evaluate the factual accuracy of language models generated answers.

With the rapid development of large language models, ensuring the accuracy of generated content faces many challenges, especially those so-called "illusion" phenomena, where the model generates information that sounds confident but is actually wrong or unverifiable. This situation has become particularly important in the context of more and more people relying on AI to obtain information.

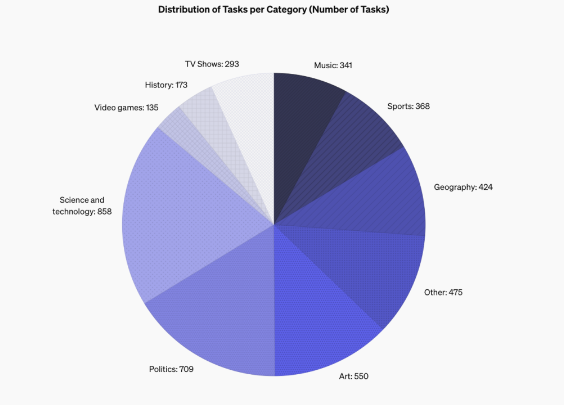

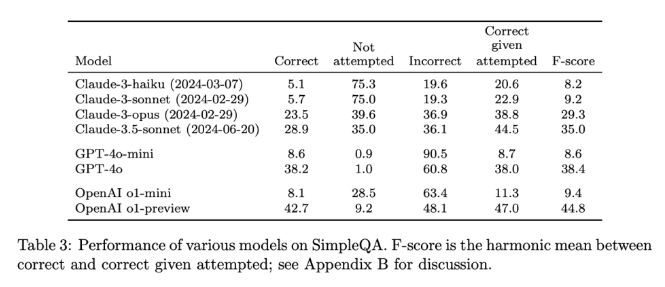

SimpleQA's design features is that it focuses on short, clear questions that often have a solid answer so that it can be easier to evaluate whether the model's answer is correct. Unlike other benchmarks, SimpleQA's problems are carefully designed to allow even state-of-the-art models such as the GPT-4 to face challenges. This benchmark contains 4326 questions, covering multiple fields such as history, science, technology, art and entertainment, with special emphasis on evaluating the accuracy and calibration capabilities of the model.

SimpleQA's design follows some key principles. First, each question has a reference answer determined by two independent AI trainers, ensuring the correctness of the answer.

Secondly, the setting of the question avoids ambiguity, and each question can be answered with a simple and clear answer, so that ratings become relatively easy. Additionally, SimpleQA uses the ChatGPT classifier for rating, explicitly marking the answer as "correct", "error", or "not tried".

Another advantage of SimpleQA is that it covers diverse issues, prevents over-specialization of models and ensures a comprehensive assessment. This dataset is simple to use because the questions and answers are short, making the test run fast and the results change little. Moreover, SimpleQA also considers the long-term correlation of information, thereby avoiding the impact caused by changes in information, making it an "evergreen" benchmark.

The release of SimpleQA is an important step in promoting the reliability of AI-generated information. It not only provides an easy-to-use benchmark, but also sets a high standard for researchers and developers, encouraging them to create models that not only generate language but also be authentic and accurate. Through open source, SimpleQA provides the AI community with a valuable tool to help improve the factual accuracy of language models to ensure that future AI systems are both informative and trustworthy.

Project entrance: https://github.com/openai/simple-evals

Details: https://openai.com/index/introducing-simpleqa/

Key points:

SimpleQA is a new benchmark launched by OpenAI, focusing on evaluating the factual accuracy of language models.

The benchmark consists of 4326 short and clear questions covering multiple areas to ensure a comprehensive assessment.

SimpleQA helps researchers identify and improve language models’ abilities in generating accurate content.

In summary, SimpleQA provides a reliable tool for evaluating the accuracy of large language models, and its openness and ease of use will drive the AI field toward a more authentic and trustworthy direction. We look forward to SimpleQA to promote the birth of more reliable and trustworthy AI systems.