Rakuten Group released its first Japanese Large Language Model (LLM) Rakuten AI2.0 and Small Language Model (SLM) Rakuten AI2.0mini, aiming to promote the development of artificial intelligence in Japan. Rakuten AI2.0 adopts a hybrid expert (MoE) architecture and has eight expert models with 7 billion parameters. It provides efficient text processing capabilities through Japanese and English data training. Rakuten AI2.0mini is a compact model with 1.5 billion parameters, designed for edge device deployment, taking into account cost-effectiveness and application ease. Both models are open source and provide fine-tuning and preference-optimized versions to support various text generation tasks.

Rakuten Group announced the launch of its first Japanese Large Language Model (LLM) and Small Language Model (SLM), named Rakuten AI2.0 and Rakuten AI2.0 mini.

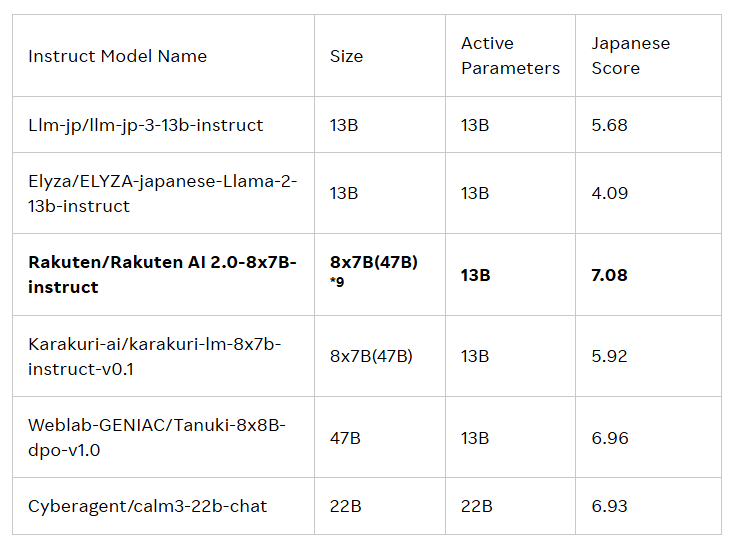

The release of these two models is intended to drive the development of artificial intelligence (AI) in Japan. Rakuten AI2.0 is based on the Hybrid Expert (MoE) architecture and is an 8x7B model consisting of eight models with 7 billion parameters, each serving as an expert. Whenever the input token is processed, the system will send it to the two most relevant experts, and the router is responsible for selecting it. These experts and routers are constantly training together through a large amount of high-quality Japanese and English bilingual data.

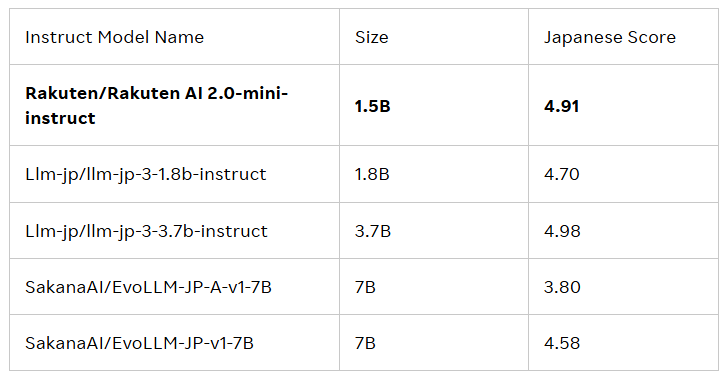

Rakuten AI2.0mini is a new dense model with a parameter volume of 1.5 billion, designed for cost-effective edge device deployment and suitable for specific application scenarios. It is also trained on mixed data in Japan and the UK, with the goal of providing a convenient solution. Both models have been fine-tuned and preferred, and have released basic models and instruction models to support enterprises and professionals in developing AI applications.

All models are under the Apache2.0 license agreement, which users can obtain in the official library of Hugging Face of Rakuten Group. Commercial uses include text generation, content summary, question and answer, text understanding and dialogue system construction. In addition, these models can also serve as the basis for other models, which facilitate further development and application.

Cai Ting, Chief AI and Data Officer at Rakuten Group, said: “I am extremely proud of how our team combines data, engineering and science to launch Rakuten AI2.0. Our new AI model provides a powerful and cost-effective Solutions that help enterprises make intelligent decisions, accelerate value realization, and open up new possibilities. Through the open model, we hope to accelerate the development of AI in Japan, encourage all Japanese companies to build, experiment and grow, and promote a win-win cooperation Community."

Official blog: https://global.rakuten.com/corp/news/press/2025/0212_02.html

Key points:

Rakuten Group launches its first Japanese Large Language Model (LLM) and Small Language Model (SLM), called Rakuten AI2.0 and Rakuten AI2.0 mini.

Rakuten AI2.0 is based on a hybrid expert architecture and has eight expert models with 7 billion parameters, committed to efficiently processing Japanese and English bilingual data.

All models are available in the official Rakuten Hugging Face library, suitable for a variety of text generation tasks and serve as the basis for other models.

In short, the launch of Rakuten AI2.0 and Rakuten AI2.0mini mark another important progress in Japan in the field of large language models. Its open source nature will promote the construction and development of Japan's AI ecosystem, and it is worth looking forward to its future application and impact.